In this week’s installment, we further break down graph technology as a natural fit for AI and machine learning projects across any industry.

Graph Technology as a Fabric for Context and Connections

There are no isolated pieces of information in the natural world, only rich, connected domains all around us.

Graph theory and technologies were specifically developed as a way to represent connected data and analyze relationships. Very simply, a graph is a mathematical representation of any type of network and graph technologies are designed to treat the relationships between data as equally important as the data itself.

A graph database and platform stores and uses data as we might first sketch it out on a whiteboard – showing how each individual entity connects with or is related to others. It acts as a fabric for our data, imbuing it with context and connections.

Graph technologies enrich our data so it is more useful, much in the same way that individual stars in the sky become more meaningful as a constellation, or when we understand their context in the sky so as to use them for navigation.

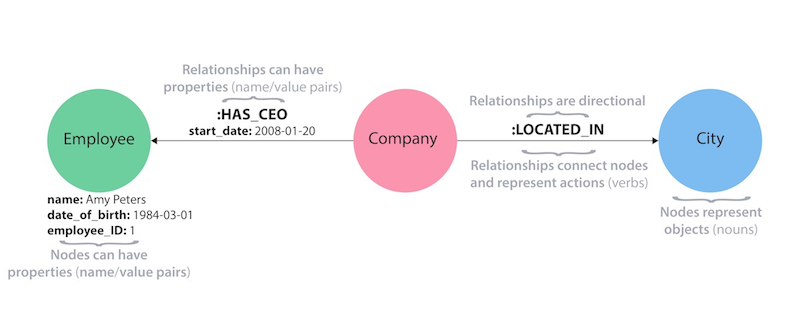

In a property graph model we have nodes that represent objects and directional relationships – each of which can have properties/attributes. Woven together, they create a fabric of context and connections that makes our data more meaningful and useful.

Graph technologies were originally custom-built and used internally by game-changing companies like Facebook, Google, Uber, Netflix, Twitter and LinkedIn because relational databases fell short of their need to find connections between people, places, locations and systems in their data.

In 2002, Neo4j invented the property graph model and went on to build a graph database so organizations could quickly traverse millions of data connections per second.

Graphs are used across multiple industries including, government, financial services, healthcare, retail, manufacturing and more. Graph technology deployments encompass a broad variety of use cases that include fraud detection, cybersecurity, real-time recommendations, network & IT operations, master data management and customer 360.

More recently, graph technologies have been increasingly integrated with machine learning and artificial intelligence solutions. These applications include using connections to predict with better accuracy, to make decisions with more flexibility, to track verifiable data lineage, and to understand decision pathways for improved explainability.

Furthermore, graph technology has been recognized as a major leap forward in machine learning. In Relational inductive biases, deep learning and graph networks, a recent paper from DeepMind, Google Brain, MIT and the University of Edinburgh, researchers advocate for “… building stronger relational inductive biases into deep learning architectures by highlighting an underused deep learning building block called a graph network, which performs computations over graph-structured data.”

The researchers found that graphs had an unparalleled ability to generalize about structure, which broadens applicability because, “… graph networks can support relational reasoning and combinatorial generalization, laying the foundation for more sophisticated, interpretable and flexible patterns of reasoning.”

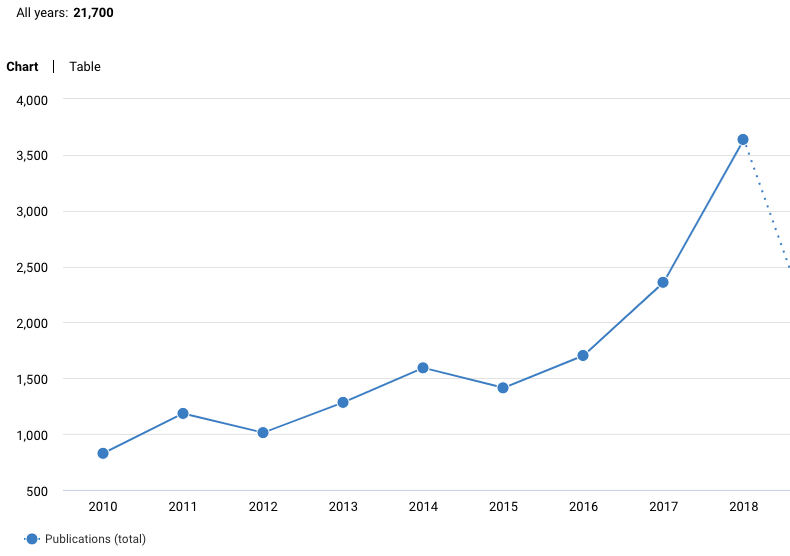

In fact, the amount of research being published on the use of graphs with AI has also skyrocketed in recent years as can be seen in the below chart showing graph-related research from 2010 to 2018.

Chart from the Dimensions knowledge system for research discovery using the search terms “graph neural network” OR “graph convolutional” OR “graph embedding” OR “graph learning” or “graph attention” OR “graph kernel” OR “graph completion.”

Conclusion

For any machine learning or AI application, data quality – and not just quantity – is foundational. If we use contextual data, we create systems that are more reliable, robust and trustworthy.

We believe that graph technologies – which naturally store and analyze connections – allow us to holistically advance these goals.

Stay tuned for next week’s blog in this series, where you’ll learn how graph technology promotes better predictions and improved flexibility.

Catch up with the rest of the Toward AI Standards series:

Ready to learn more about how graph technology enhances your AI projects?

Read the white paper, Artificial Intelligence & Graph Technology: Enhancing AI with Context & Connections

Get My Copy

Read the white paper, Artificial Intelligence & Graph Technology: Enhancing AI with Context & Connections

Get My Copy