GenAI Stack

The GenAI Stack is a collaboration between Docker, Neo4j, LangChain and Ollama launched at DockerCon 2023.

Installation

On MacOS you have to install and start Ollama first, then you can use the GenAI Stack.

git clone https://github.com/docker/genai-stack

cd genai-stack

# optionally copy env.example to .env and add your OpenAI/Bedrock/Neo4j credentials for remote models

docker compose upFunctionality Includes

-

Docker Setup with local or remote LLMs, Neo4j and LangChain demo applications

-

Pull Ollama Models and sentence transformer as needed

-

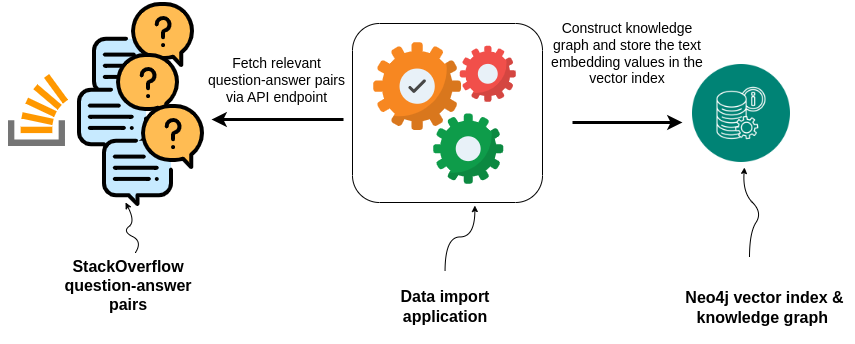

Import Stackoverflow Questions and Answers for a certain tag, e.g.

langchain -

Create Knowledge Graph and vector embeddings for questions and answers

-

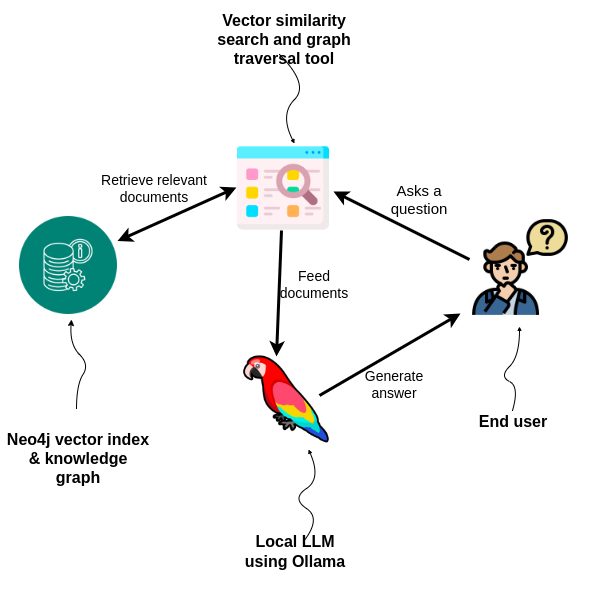

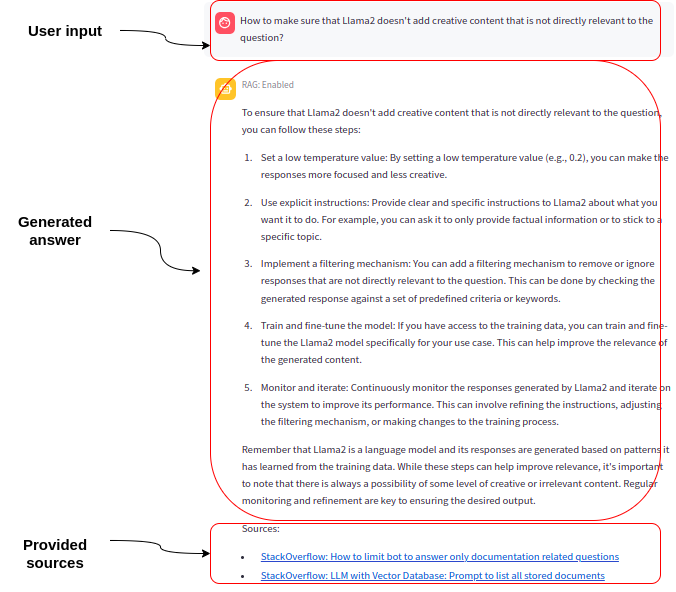

Streamlit Chat App with vector search and GraphRAG (vector + graph) answer generation

-

Creating "Support Tickets" for unanswered questions taking good questions from StackOverflow and the actual question into account

-

PDF chat with loading PDFs, chunking, vector indexing and search to generate answers

-

Python Backend and Svelte Front-End Chat App with vector search and GraphRAG (vector + graph) answer generation

Documentation

A detailed walkthrough of the GenAI Stack is available at https://neo4j.com/developer-blog/genai-app-how-to-build/.

Relevant Links

Authors |

Michael Hunger, Tomaz Bratanic, Oskar Hane and many contributors |

Community Support |

|

Repository |

|

Issues |