Using a Knowledge Graph to Implement a RAG Application

Graph ML and GenAI Research, Neo4j

8 min read

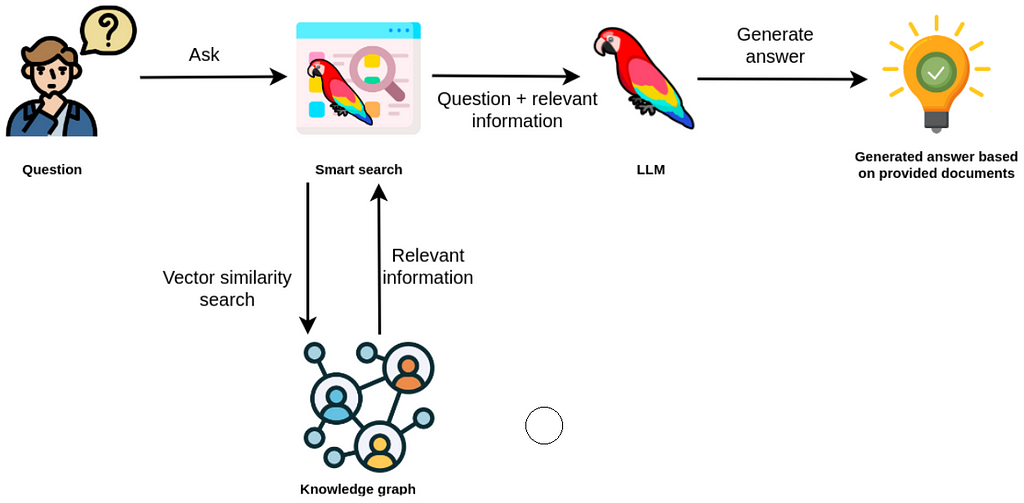

Forbes recently named RAG applications the hottest thing in AI. That comes as no surprise since Retrieval-Augmented Generation requires minimal code and helps build user trust in your LLM. The challenge when building a great RAG app or chatbot is handling structured text alongside unstructured text.

Unstructured text, which might be chunked or embedded, feeds easily into a RAG workflow, but other data sources require more preparation to ensure accuracy and relevancy. In these cases, you can create daily snapshots of your architecture and then transform those into text that your LLM will understand. This is another way, however — knowledge graphs can store both structured and unstructured text within a single database, reducing the work required to give your LLM the information it needs.

In this blog, we’ll look at an example of creating a chatbot to answer questions about your microservices architecture, ongoing tasks, and more using a knowledge graph.

What Is a Knowledge Graph?

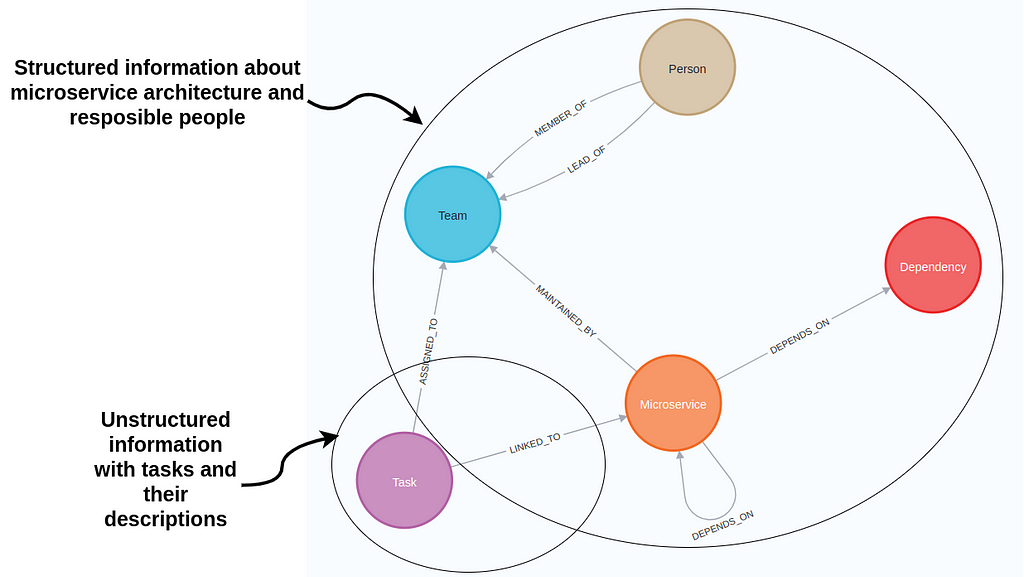

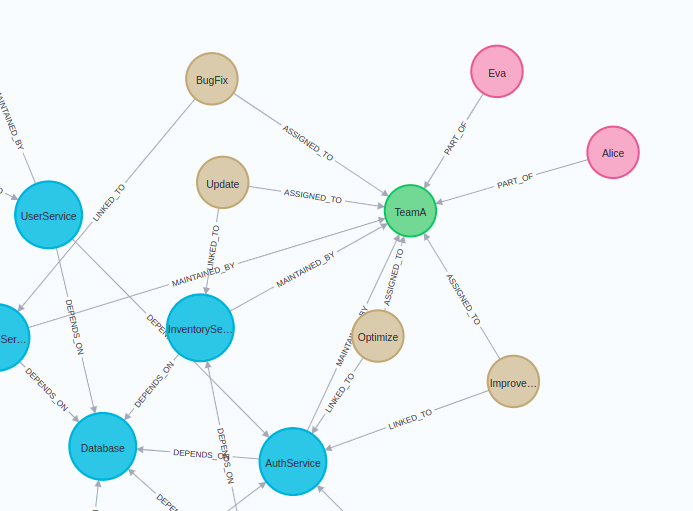

A knowledge graph captures information about data points or entities in a domain or a business and the relationships between them. Data is described as nodes and relationships within a knowledge graph.

Nodes represent data points or entities like people, organizations, and locations. In the microservice graph example, nodes describe people, teams, microservices, and tasks. Relationships are used to define the connections between these entities, like dependencies between microservices or task owners.

Both nodes and relationships can have property values stored as key-value pairs.

The Developer’s Guide: How to Build a Knowledge Graph

Get the free guide to building and using knowledge graphs for data-driven applications.

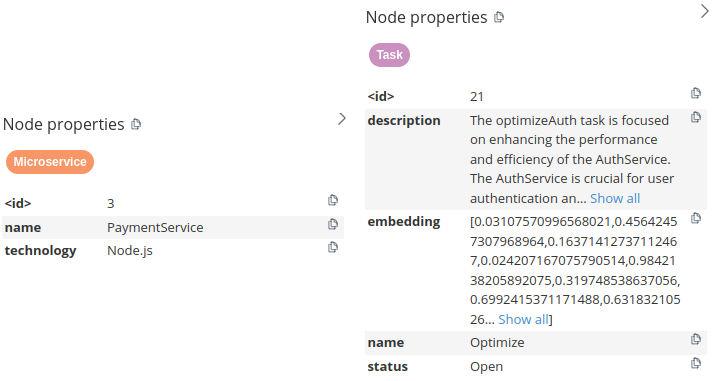

The microservice nodes have two node properties: name and technology. Task nodes are more complex: they have name, status, description, and embedding properties.

Storing text embedding values as node properties allows you to perform a vector similarity search of task descriptions the same as if the tasks were stored in a vector database.

GraphRAG is a technique that enhances RAG with knowledge graphs. We’ll walk you through a scenario that shows how to implement a GraphRAG application with LangChain to support your DevOps team. The code is available on GitHub.

Neo4j Environment Setup

First, you’ll need to set up a Neo4j 5.11 instance, or greater, to follow along with the examples. The easiest way is to start a free cloud instance of the Neo4j database on Neo4j Aura. Or, you can also set up a local instance of the Neo4j database by downloading the Neo4j Desktop application and creating a local database instance.

from langchain.graphs import Neo4jGraph

url = "neo4j+s://databases.neo4j.io"

username ="neo4j"

password = ""

graph = Neo4jGraph(

url=url,

username=username,

password=password

)Dataset

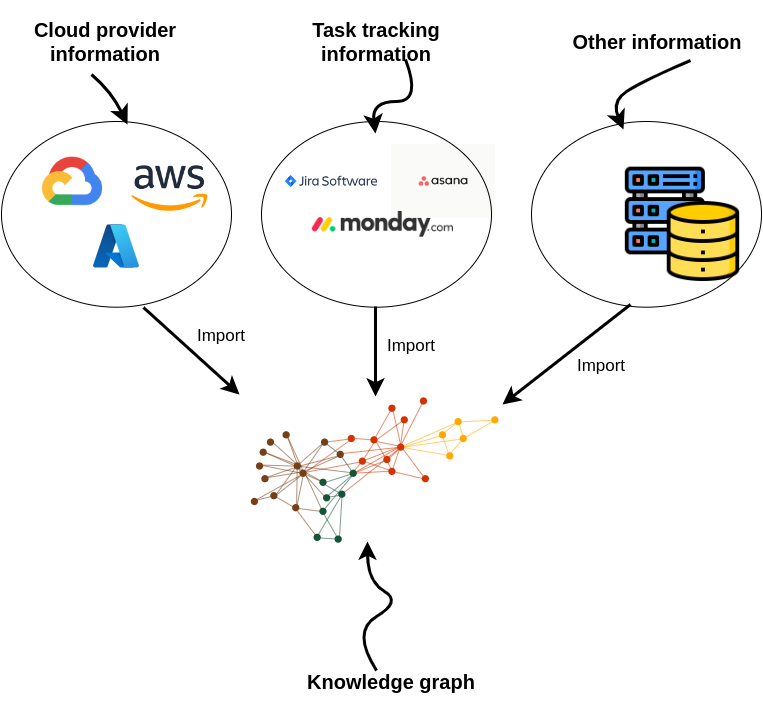

Knowledge graphs are excellent at connecting information from multiple data sources. When developing a DevOps RAG application, you can fetch information from cloud services, task management tools, and more.

Since this kind of microservice and task information is not public, we created a synthetic dataset. We employed ChatGPT to help us. It’s a small dataset with only 100 nodes, but enough for this tutorial. The following code will import the sample graph into Neo4j.

import requests

url = "https://gist.githubusercontent.com/tomasonjo/08dc8ba0e19d592c4c3cde40dd6abcc3/raw/da8882249af3e819a80debf3160ebbb3513ee962/microservices.json"

import_query = requests.get(url).json()['query']

graph.query(

import_query

)You should see a similar visualization of the graph in the Neo4j Browser.

Blue nodes describe microservices. These microservices may have dependencies on one another. It’s implied that one microservice’s ability to function or provide an outcome may be reliant on another’s operation.

The brown nodes represent tasks that directly link to these microservices. Together, our graph example shows how microservices are set up, their linked tasks, and the teams associated with each.

Neo4j Vector Index

We’ll begin by implementing a vector index search to find relevant tasks by their name and description. If you’re unfamiliar with vector similarity search, here’s a quick refresher. The key idea is to calculate the text embedding values for each task based on their description and name. Then, at query time, find the most similar tasks to the user input using a similarity metric like a cosine distance.

The retrieved information from the vector index can then be used as context to the LLM so it can generate accurate and up-to-date answers.

The tasks are already in our knowledge graph. However, we must calculate the embedding values and create the vector index. Here, we’ll use the from_existing_graph method.

import os

from langchain.vectorstores.neo4j_vector import Neo4jVector

from langchain.embeddings.openai import OpenAIEmbeddings

os.environ['OPENAI_API_KEY'] = "OPENAI_API_KEY"

vector_index = Neo4jVector.from_existing_graph(

OpenAIEmbeddings(),

url=url,

username=username,

password=password,

index_name='tasks',

node_label="Task",

text_node_properties=['name', 'description', 'status'],

embedding_node_property='embedding',

)In this example, we used the following graph-specific parameters for the from_existing_graph method.

index_name: name of the vector index.node_label: node label of relevant nodes.text_node_properties: properties to be used to calculate embeddings and retrieve from the vector index.embedding_node_property: which property to store the embedding values to.

Now that the vector index is initiated, we can use it as any other vector index in LangChain.

response = vector_index.similarity_search(

"How will RecommendationService be updated?"

)

print(response[0].page_content)

# name: BugFix

# description: Add a new feature to RecommendationService to provide ...

# status: In ProgressYou’ll see that we construct a response of a map or dictionary-like string with defined properties in the text_node_properties parameter.

Now, we can easily create a chatbot response by wrapping the vector index into a RetrievalQA module.

from langchain.chains import RetrievalQA

from langchain.chat_models import ChatOpenAI

vector_qa = RetrievalQA.from_chain_type(

llm=ChatOpenAI(),

chain_type="stuff",

retriever=vector_index.as_retriever()

)

vector_qa.run(

"How will recommendation service be updated?"

)

# The RecommendationService is currently being updated to include a new feature

# that will provide more personalized and accurate product recommendations to

# users. This update involves leveraging user behavior and preference data to

# enhance the recommendation algorithm. The status of this update is currently

# in progress.One general limitation of vector indexes is they don’t provide the ability to aggregate information like you would using a structured query language like Cypher. Consider the following example:

vector_qa.run(

"How many open tickets are there?"

)

# There are 4 open tickets.The response seems valid, in part because the LLM uses assertive language. However, the response directly correlates to the number of retrieved documents from the vector index, which is four by default. So when the vector index retrieves four open tickets, the LLM unquestioningly believes there are no additional open tickets. However, we can validate whether this search result is true using a Cypher statement.

graph.query(

"MATCH (t:Task {status:'Open'}) RETURN count(*)"

)

# [{'count(*)': 5}]There are five open tasks in our toy graph. Vector similarity search is excellent for sifting through relevant information in unstructured text, but lacks the capability to analyze and aggregate structured information. Using Neo4j, this problem is easily solved by employing Cypher, a structured query language for graph databases.

Graph Cypher Search

Cypher is a structured query language designed to interact with graph databases. It provides a visual way of matching patterns and relationships and relies on the following ascii–art type of syntax:

(:Person {name:"Tomaz"})-[:LIVES_IN]->(:Country {name:"Slovenia"})This pattern describes a node with the label Person and the name property Tomaz that has a LIVES_IN relationship to the Country node of Slovenia.

The neat thing about LangChain is that it provides a GraphCypherQAChain, which generates the Cypher queries for you, so you don’t have to learn Cypher syntax to retrieve information from a graph database like Neo4j.

The following code will refresh the graph schema and instantiate the Cypher chain.

from langchain.chains import GraphCypherQAChain

graph.refresh_schema()

cypher_chain = GraphCypherQAChain.from_llm(

cypher_llm = ChatOpenAI(temperature=0, model_name='gpt-4'),

qa_llm = ChatOpenAI(temperature=0), graph=graph, verbose=True,

)Generating valid Cypher statements is a complex task. Therefore, it is recommended to use state-of-the-art LLMs like gpt-4 to generate Cypher statements, while generating answers using the database context can be left to gpt-3.5-turbo.

Now, you can ask the same question about the number of open tickets.

cypher_chain.run(

"How many open tickets there are?"

)Result is the following:

You can also ask the chain to aggregate the data using various grouping keys, like the following example.

cypher_chain.run(

"Which team has the most open tasks?"

)Result is the following:

You might say these aggregations are not graph-based operations, and that’s correct. We can, of course, perform more graph-based operations like traversing the dependency graph of microservices.

cypher_chain.run(

"Which services depend on Database directly?"

)Result is the following:

Of course, you can also ask the chain to produce variable-length path traversals by asking questions like:

cypher_chain.run(

"Which services depend on Database indirectly?"

)Result is the following:

Some of the mentioned services are the same as in the directly dependent question. The reason is the structure of the dependency graph and not the invalid Cypher statement.

Knowledge Graph Agent

We’ve implemented separate tools for the structured and unstructured parts of the knowledge graph. Now we can add an agent to use these tools to explore the knowledge graph.

from langchain.agents import initialize_agent, Tool

from langchain.agents import AgentType

tools = [

Tool(

name="Tasks",

func=vector_qa.run,

description="""Useful when you need to answer questions about descriptions of tasks.

Not useful for counting the number of tasks.

Use full question as input.

""",

),

Tool(

name="Graph",

func=cypher_chain.run,

description="""Useful when you need to answer questions about microservices,

their dependencies or assigned people. Also useful for any sort of

aggregation like counting the number of tasks, etc.

Use full question as input.

""",

),

]

mrkl = initialize_agent(

tools,

ChatOpenAI(temperature=0, model_name='gpt-4'),

agent=AgentType.OPENAI_FUNCTIONS, verbose=True

)Let’s try out how well the agent works.

response = mrkl.run("Which team is assigned to maintain PaymentService?")

print(response)Result is the following:

Let’s now try to invoke the Tasks tool.

response = mrkl.run("Which tasks have optimization in their description?")

print(response)Result is the following:

One thing is certain. I have to work on my agent’s prompt engineering skills. There’s definitely room for improvement in the tool description. You can also customize the agent prompt.

Knowledge graphs are well-suited for use cases involving both structured and unstructured data. The approach shown here allows you to avoid polyglot architectures, where you must maintain and sync multiple types of databases. Learn more about graph-based search in LangChain here.

The code is available on GitHub.

Learning Resources

To learn more about building smarter LLM applications with knowledge graphs, check out the other posts in this blog series.

- LangChain Library Adds Full Support for Neo4j Vector Index

- Implementing Advanced Retrieval RAG Strategies With Neo4j

- Harnessing Large Language Models With Neo4j

- Knowledge Graphs & LLMs: Multi-Hop Question Answering

- Construct Knowledge Graphs From Unstructured Text

- The Developer’s Guide: How to Build a Knowledge Graph