Neo4j for GenAI

Unify vector search, knowledge graph, and data science to build breakthrough GenAI apps that deliver highly accurate responses, rich context, and deep explainability.

Integrate Domain Knowledge

Incorporate and connect organizational data and facts for tailored accurate responses

Enrich Responses With Context

Connect related facts across your data for more accurate and meaningful answers

Explain Retrieval Logic

Trace sources and understand connections between knowledge and responses

Accelerate AI & Agent Development

Seamlessly integrate with MCP, leading orchestration frameworks, and multi-agent systems

The GraphRAG Manifesto: Adding Knowledge to GenAI

Discover why GraphRAG will subsume vector-only RAG and emerge as the default RAG architecture for most use cases

GraphRAG Explained

Discover a smarter way to build GenAI apps with Neo4j GraphRAG. By combining knowledge graphs and vector search, GraphRAG infuses your AI with deep context and multi-hop reasoning for more accurate, relevant, and explainable results.

Capabilities

Build Your Next GenAI Breakthrough

Transform data from multiple sources into a graph-based model of entities, their attributes, and how they relate. Continuously enrich the graph by adding new data to uncover patterns and hidden relationships.

Learn More

Build smarter apps with fast semantic search across various data types. Find contextually related information and recommend connections based on similarity metrics.

Learn More

Analyze data connections and uncover deeper insights using 65+ production-ready algorithms for improved predictions and decision-making.

Learn MoreAccelerate GenAI app development with our integrations for popular AI frameworks and tools. Seamlessly incorporate graph capabilities into your projects using LangChain, LlamaIndex, Hugging Face, and more.

Learn More

Develop GenAI applications using the LLMS and services that best fit your needs, including state-of-the-art models and GenAI services from OpenAI, Google (Gemini and Vertex AI), Microsoft Azure OpenAI, Amazon Bedrock, as well as open-source models from HuggingFace, Ollama, and others. With Neo4j, you can build powerful, scalable, and context-driven GenAI solutions with minimal complexity.

Learn More

Top Use Cases for Graph & AI

Create Knowledge Graphs for Accurate and Explainable Results

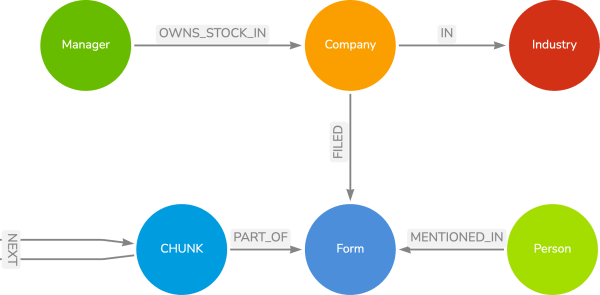

Quickly transform structured and unstructured data into a rich, connected knowledge graph. Our intuitive tools and workflows streamline the process of extracting entities, facts, and relationships from text, enabling you to create a powerful foundation for your GenAI app in minutes, not days.

Transform Interactions With GenAI-Powered Applications

Elevate customer engagement across all touchpoints with intelligent, context-aware chatbots built on Neo4j. By Combining knowledge graphs, vector search, and LLMs, Neo4j enables you to create chatbots that deliver accurate, personalized, and efficient interactions, improving customer satisfaction and driving loyalty.

Enhance Search

With GenAI and

Knowledge Graphs

Deliver a better search experience with GenAI-powered semantic search, built on Neo4j knowledge graphs. Graph-backed Search understands user intent, surfaces relevant results, and provides contextual recommendations, delivering a more intuitive and satisfying search experience.

Loved by Devs. Deployed Worldwide.

1,700+ organizations build on Neo4j for data breakthroughs.

Real World AI Innovations. Powered by Graph.

Explore GenAI Resources

Tools and guides for building cutting-edge GenAI-powered apps.

Neo4j & LLM Fundamentals

Learn the basics of Neo4j and the property graph model

4 hours

Importing Data Fundamental

Learn how to import data into Neo4j

2 hours

Build a Neo4j-backed chatbot using Python

Build a chatbot using Neo4j, Langchain and Streamlit

2 hours

Build a Neo4j-backed chatbot with Typescript

Build a chatbot using Neo4j, Langchain and Next.js

6 hours

Introduction to Vector Indexes and Unstructured Data

Understand and search unstructured data using vector indexes

2 hours

What is GraphRAG?

Discover how GraphRAG revolutionizes GenAI by using knowledge graphs to deliver accurate, reliable, and context-rich answers.

GraphRAG Python Package: GenAI With Knowledge Graphs

Transform unstructured data into knowledge graphs and enhance GenAI retrieval

The GraphRAG Manifesto: Adding Knowledge to GenAI

Discover why GraphRAG will surpass vector-only RAG as the default architecture

Get Started With GraphRAG: Neo4j’s Ecosystem Tools

Develop GenAI applications grounded with knowledge graphs

Neo4j Brings GraphRAG Capabilities for GenAI to Google Cloud

Learn about the native integrations with Google Cloud and Vertex AI

Using a Knowledge Graph to Implement a RAG Application

Learn about RAG and what Forbes recently named the hottest thing in AI

Unifying LLMs & Knowledge Graphs for GenAI: Use Cases

Learn how knowledge graphs and large language models (LLMs) can be used together

Implementing RAG: Write a Graph Retrieval Query in LangChain

Learn how to write the retrieval query that supplements or grounds the LLM’s answer

Accelerate GenAI using the GraphRAG Python package

Learn to build knowledge graphs, implement advanced retrievers, and create GraphRAG workflows

Kickstart GenAI Dev With Neo4j’s GraphRAG Ecosystem

Learn how to quickly start a knowledge graph from unstructured data

Go From GenAI Pilot to Production Faster with a Knowledge Graph

Learn to overcome challenges with hallucinations, search, data integrations, and more

Getting Started with GenAI

Learn how to build GenAI applications for real-world use cases

Building more Accurate GenAI Chatbots

Learn how knowledge graphs can back GenAI apps

Improved Results with Vector Search in Knowledge Graph

Learn the latest AI technologies in data analysis

Start Building GenAI Breakthroughs Today!

Neo4j unifies vector search, knowledge graph, and data science capabilities to accelerate building context-rich, explainable GenAI applications.