An AI agent is an application that uses GenAI models to think and act toward goals. It typically consists of a language model with instructions, access to tools, and an execution loop that allows it to reason, take action, and iterate until a task is complete.

What does that look like in the real world? Imagine you’re sick, and you share your symptoms with an AI agent. It pulls your lab results, evaluates diagnosis and treatment options in medical databases, checks for problematic drug interactions, and comes up with a treatment plan your doctor can refine.

This is the age of agentic AI. Agents can now go well beyond question-and-answer text generation. They can reason, plan, use tools, iteratively make progress, check their own work, and learn from experience. We increasingly trust them with rote, repetitive tasks, freeing human workers to focus on higher-value, judgment-driven projects and act as supervisors.

Today’s developers and AI engineers have shifted from asking “How do I get better outputs from an LLM?” to “How can I build agents that solve complex tasks?” It’s an enormous leap, and it’s already changing how we live and work.

More in this guide:

- The Recent History of AI: Why Are Agents Emerging Now?

- How Knowledge Graphs Provide Context for AI Agents

- What Are the Real-World Use Cases of AI Agents?

- What Are the Different Types of AI Agents?

- Can AI Agents Work Together?

- Challenges and Risks of AI Agents

- Best Practices for Building AI Agents

- What Are the Best AI Agent Frameworks?

- The Future of AI Agents

- Resources

- Frequently Asked Questions

The Recent History of AI: Why Are Agents Emerging Now?

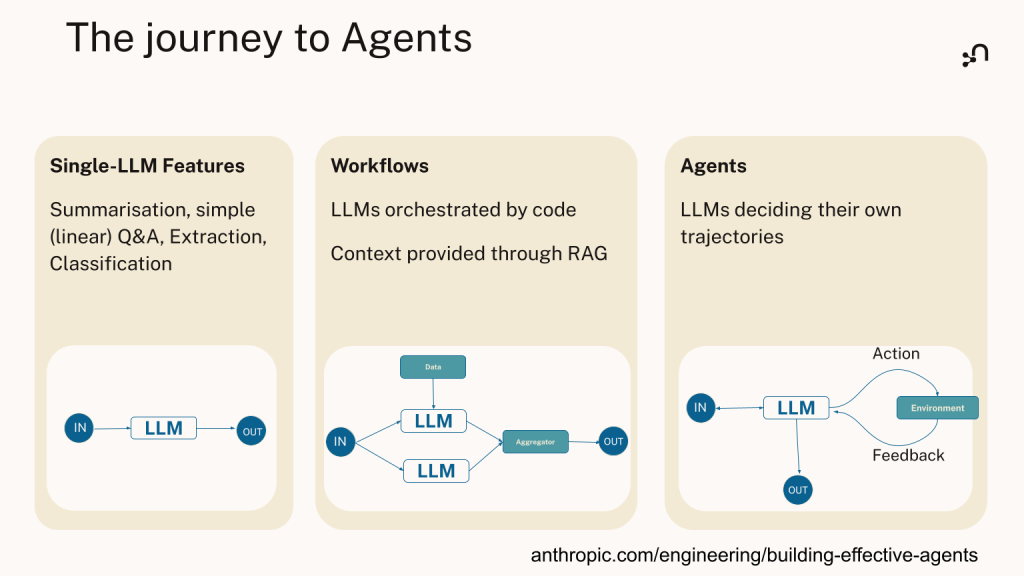

The path to agents makes sense when you look at the evolution of GenAI in recent years. You’ve probably lived through this progression yourself:

- Large language models (LLMs) gave us the text generation foundation. You could get great responses in text if you input a good prompt, but that was it.

- Retrieval-augmented generation (RAG) connected LLMs to data sources to improve accuracy and reduce hallucinations, but it was still reactive — you asked, it retrieved and responded.

- GraphRAG used knowledge graphs for more accurate context retrieval. Instead of just using similarity search, systems could understand how things were related to each other.

- Workflows brought orchestration and multi-step processes. Now you could chain together multiple LLM calls, add conditional logic, and create more complex, repeatable, automated processes. But they still followed predefined paths.

- Agents use LLMs for decision-making. They are capable of observing their environment, using tools, taking action, and learning from past interactions. Rather than just following fixed workflows, agents can dynamically plan and determine their path to completing tasks.

Agentic behaviors are possible thanks to recent advances in AI technology, including:

- Better reasoning: Modern LLMs, trained with reinforcement learning, can handle multi-step reasoning much more reliably. Reasoning models can use chains of thought to make logical deductions and justify their decisions. This also helps with breaking down complex tasks into smaller subtasks to be planned and executed as a workflow.

- Bigger context window: The context window capacity of LLMs has been increasing massively every year. Compared to a few years ago, LLMs are now capable of processing significantly larger amounts of input and output tokens.

- Tool selection: Modern LLMs have been extensively trained to improve their tool selection and function calling capabilities, especially given that larger sets of tools require more reliable selection prediction qualities.

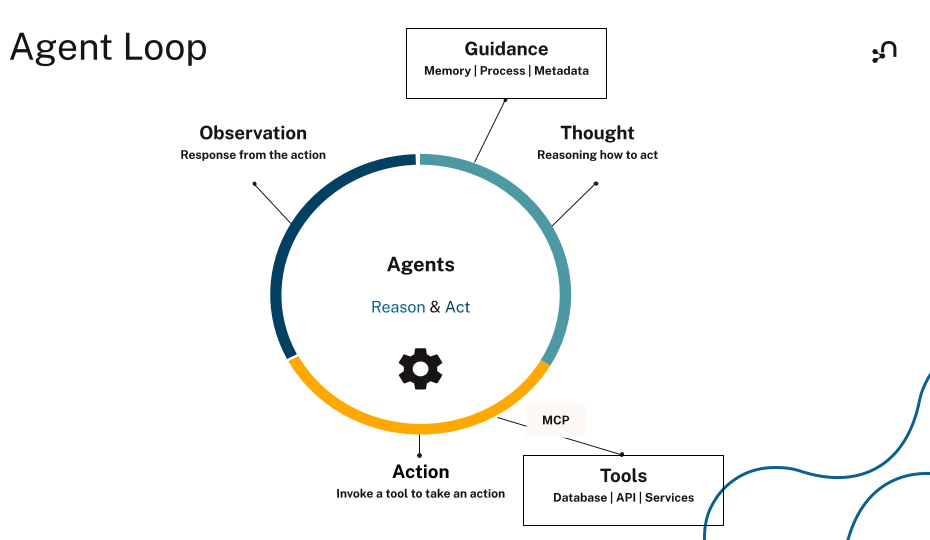

- Tool integration: Previously, integrating LLMs with APIs required extensive custom coding, complex authentication and response handling, and maintaining separate interfaces for each tool or service. Now, unified standards like the Model Context Protocol (MCP) make it easy to connect LLMs to external tools (databases, web services, APIs, etc.) through consistent, prebuilt connectors.

- Structured memory and context: Agents can now connect to structured memory systems (such as databases and knowledge graphs) and run queries to retrieve accurate, context-aware meta-information. Intelligent agents need structured memory for contextual reasoning to avoid inference errors and hallucinations.

These breakthroughs transformed LLMs from simple text generators into systems capable of reasoning, performing complex tasks, and adapting to new contexts with minimal human oversight.

We used to build software in a deterministic way — inputs, conditions, outputs. Agents are different. They’re flexible, iterative, and designed for open-world tasks.

What Are the Components of an AI Agent?

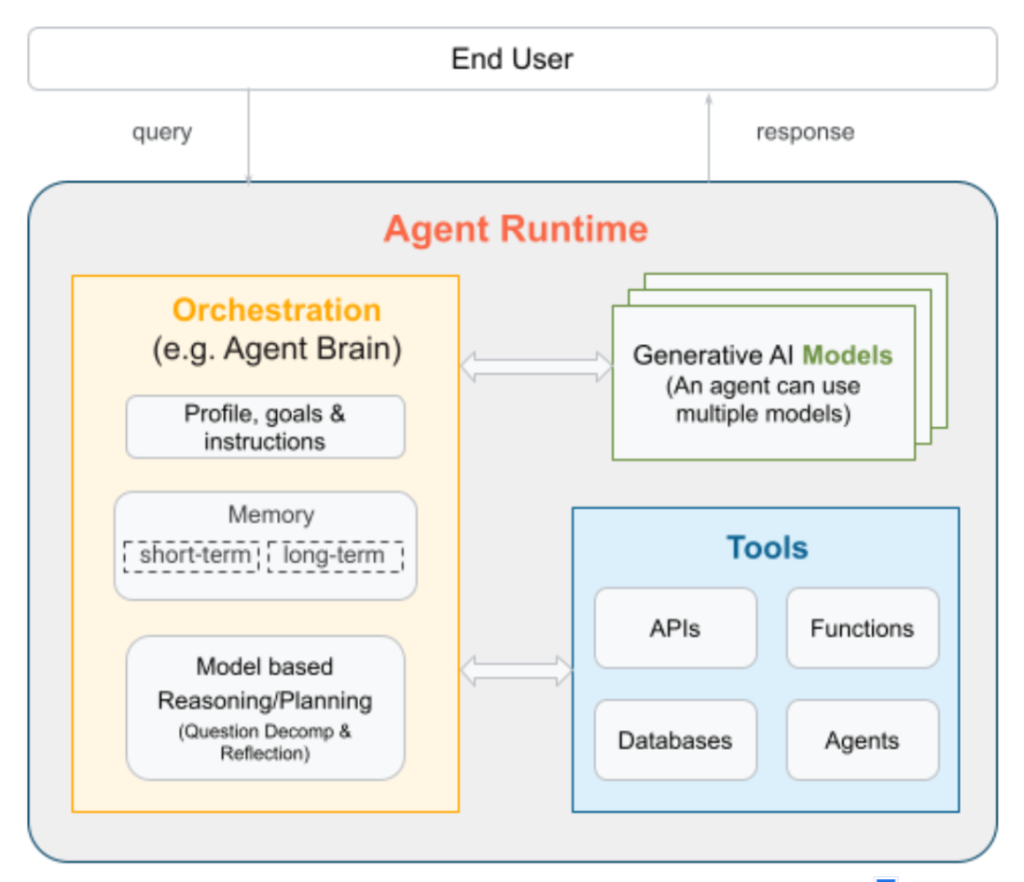

AI agents have three fundamental components: a GenAI model, tools, and orchestration.

The core of an AI agent is really an LLM with instructions and a set of tools that runs in a reasoning and execution loop to complete a goal.

Model

The AI model, such as an LLM, is the core engine of an AI agent. It plans, reasons, decides what to do, and observes the results. This includes:

- Interpreting and breaking down the user’s goal or instruction

- Analyzing any information provided (context, memory, retrieved documents, etc.)

- Planning what action to take, whether it’s answering directly, retrieving more info, or calling a tool

- Observing the outputs from tool calls and integrating them into the context for fulfilling the overall task

The model doesn’t have to know or do everything; it just needs to reason well with the information it has. It needs to be paired with structured memory systems, external tools, and guardrails to enable reliable decision-making and autonomous execution.

Tools

Tools make agents practically useful. They extend the model beyond language, letting it interact with external systems to retrieve relevant information and carry out tasks. Tools are how the agent actually gets things done — fetching data, making API calls, or even triggering workflows. For example:

- Data access: Agents can retrieve and manipulate data from databases or knowledge graphs using generated or parametrized queries.

- API: Agents can interact with internal or external systems over HTTP or RPC, including web search.

- Function calling: Agents can call predefined functions to perform specific tasks.

- Analytics and computation: Agents can analyze data, generate programs, perform calculations, and generate charts and other assets.

- Operators: Agents enabled with operators can use a browser or computer to run programs.

The Model Context Protocol (MCP) has made tool integration easy, standardizing tool discovery, authorization, integration and usage. It offers access to tools, static resources, prompts, and more supporting input and output content types. The protocol includes definitions and annotations for metadata, parameter schemas, and behavior guidance to make them easier for agents to understand and invoke dynamically across frameworks.

Orchestration

Orchestration engines manage and coordinate the components of AI agents and run their reasoning loops, allowing agents to:

- Reason and plan the next action

- Select tools with extracted parameters

- Execute the tools (possibly in parallel)

- Evaluate the results

- Determine the next iteration of the loop

They receive user input; retrieve memories, guidelines, metadata, and guardrails; create a list of applicable tools; and pass all of that as context with the appropriate system prompt to the LLM iteratively.

Orchestration engines support AI agents in three key areas: instructions, profile, and goals; memory; and model-based reasoning and planning.

Instructions, Profile, and Goals

Instructions guide the agent in its main operations and interactions — what it’s meant to do, how it’s serving the user, how it should approach tasks, etc. They often contain examples of effective tool calls and successful answers with the reasoning and execution flow behind them. An agent profile determines its style, succinctness, and fallback behaviors, as well as how to interact with users in certain situations (e.g., when key information is missing). Goals steer the agent toward factual, valuable answers, so that ineffective chains of thought can be stopped and intermediate results can be evaluated.

Memory

AI agents need memory to hold onto important knowledge, past interactions, and context. Agents usually have two types of memory:

- Short-term memory: Keeps track of the current task, ongoing conversation, recent results, etc.

- Long-term memory: Stores important information the agent should remember across tasks, such as past user interactions, business rules, and historical insights. Generally divided into episodic (textual), semantic (structural), and procedural (past executions) memory, it’s often provided by unstructured vector databases or structured knowledge graphs.

Reliable memory systems allow agents to keep conversations coherent, personalize responses, and learn from experience.

Model-Based Reasoning and Planning

Reinforcement learning applied to LLMs — especially when their success can be objectively measured — has allowed them to go beyond mere question answering and execute complex tasks successfully.

In an agentic setup, the AI model assumes multiple roles that are called and used independently with the appropriate context from previous steps and rounds:

- Planner/decider

- Gatherer/executor

- Observer/processor

- Judge

We’ll take a closer look at how agents reason and act in the next section.

How Do AI Agents Work?

There are many types of agents, and many reason and act, often in a loop, until they achieve their goal. ReAct is the most common AI agent pattern.

ReAct agents have advanced reasoning capabilities, which allows them to break goals down into component tasks, and they can use external tools — databases, transaction APIs, web browsers — to accomplish those tasks. They evaluate the end result, learn from mistakes they’ve made, and, if necessary, start over. Following is a breakdown of the ReAct cycle.

Reason

The reason step is the agent’s internal planning phase. Here, the LLM interprets the user’s request, checks what information it already has, and identifies gaps that need filling. Instead of rushing into tool calls or guesses, it uses the context available and outlines a path forward. This stage is about clarity: What is the user asking? And what will it take to get there?

Once the problem is framed, the agent decides on the smallest next step that will reduce uncertainty. It may set boundaries for itself, such as how many times to loop, what information will be “enough,” or which tools are relevant to the task. These constraints prevent wasted effort and give the process a clear direction.

For example, when asked to suggest a treatment plan for a patient with Type 2 diabetes, the agent thinks: “I need recent lab results, the patient’s medical history, and any current medications. I’ll start by retrieving the latest blood glucose levels and A1C results, then review lifestyle factors and comorbidities. I’ll stop once I can recommend a treatment plan that balances medications, diet, and exercise considerations.”

Act

The act step is where the agent transforms its reasoning into a concrete action. The agent selects a tool or resource as determined in the reasoning step and interacts with it directly. This could be a search query, database retrieval, API request, function call, or calculation.

The key is that actions are deliberate and specific. A vague query often returns cluttered results, while a focused one reduces the noise and brings back exactly what the agent needs. By aligning actions tightly with reasoning, each move directly contributes to progress toward the goal.

Continuing the healthcare example, the agent queries an electronic health record (EHR) system for the patient’s most recent blood work, retrieves their medication list, and consults medical guidelines for managing Type 2 diabetes with similar profiles. Each of these actions supports the plan outlined in reasoning, keeping the process efficient.

Observe

The observe step is where the agent takes in the outcome of its actions and makes sense of it. It stores them in its memory and interprets them, keeping what is useful, what fills knowledge gaps, and whether anything still needs clarification.

This interpretation is what allows the agent to be adaptive. A tool output may confirm a hypothesis, reveal an ambiguity, or even contradict expectations. By integrating this back into its working context, the agent keeps its plan dynamic and responsive to what it learns along the way.

In the healthcare scenario, the EHR data shows the patient has elevated A1C levels, a family history of cardiovascular disease, and is currently prescribed metformin. The agent observes that these factors suggest a need for stronger glucose control and cardiovascular monitoring, which helps refine the treatment plan and identify potential next steps, such as considering additional medication or lifestyle adjustments.

Repeat or Finish

The final stage is a checkpoint. With the new information in hand, the agent decides whether it has enough to complete the task or whether another loop is needed. If gaps remain, it returns to reasoning with an updated context; if the requirements are met, it concludes and delivers the final response.

This decision is what keeps the loop both efficient and complete. Stopping too early risks giving an incomplete answer, while looping indefinitely wastes resources. By explicitly evaluating its progress, the agent ensures that it ends at the right moment — it’s confident, not excessive.

In the healthcare example, the agent determines that it now has the patient’s lab data, medical history, and medication profile. It may decide to run a few more loops to cross-check drug interactions, validate the latest treatment guidelines, evaluate lifestyle recommendations, or check for possible adverse reactions between medications. Once enough evidence is gathered, it produces an individualized care plan that combines medication adjustments, diet recommendations, and exercise guidance — without further looping.

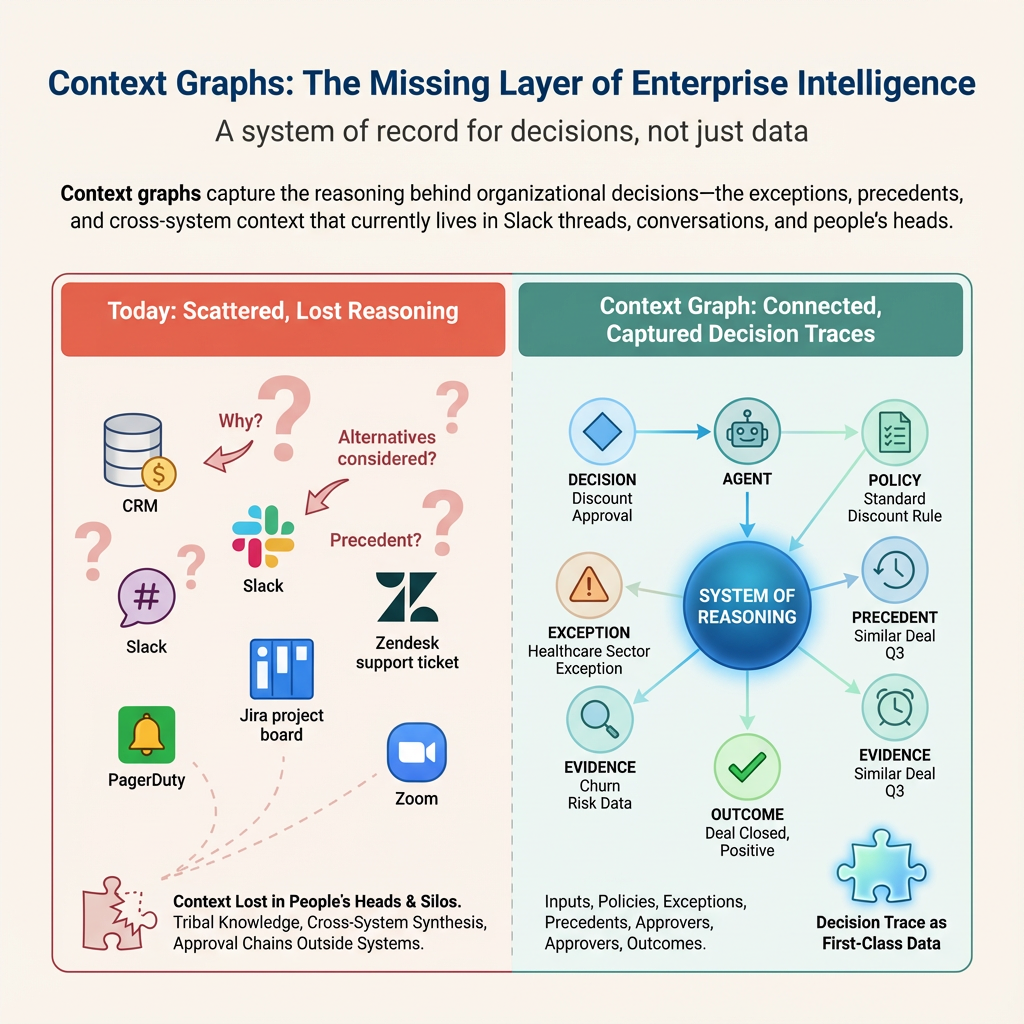

How Knowledge Graphs Provide Context for AI Agents

Knowledge graphs provide the structured memory and reasoning backbone that gives agents the ability to connect facts, reason across tasks, and explain their decisions.

AI agents today can look impressive on the surface. But when you try to build real production-grade agents, one challenge always comes up: context. It’s the single most critical factor in making agents reliable.

Agents need context to work well. Context is everything that gives them “situational awareness” — the tools they can use, the memory of what’s happened so far, their planning ability, and the reasoning “brain” that ties it all together. Without context, an agent is essentially guessing at every step.

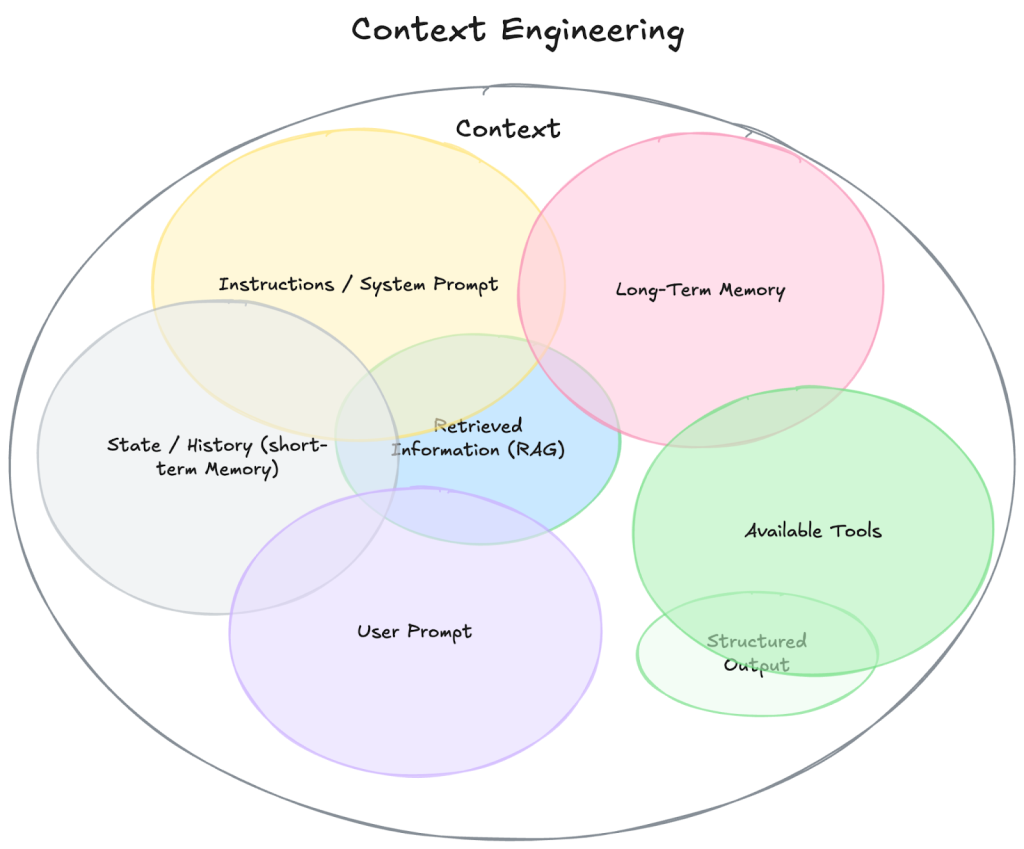

That’s why context engineering has emerged as a new skill for AI developers, much like prompt engineering was a few years ago. It’s about knowing how to give the agent the right information at the right time, both when it’s reasoning and planning what to do next, and when it’s actually executing and generating results.

This discipline of context engineering is quickly becoming a major focus in AI. Developers spend much of their time figuring out how to feed structured data, tool definitions, memory, and retrieved documents into the LLM’s context window — and how to keep that information relevant, fresh, and accurate as the agent moves through its steps.

Knowledge graphs give agents the structured context to think logically, connect the dots, and explain their actions.

A knowledge graph organizes information as entities and relationships, accommodating rich metadata and providing a context layer that can evolve with the agent as tasks change and data updates in real time.

By querying and retrieving from knowledge graphs through a method called GraphRAG, the agent can perform context engineering effectively, dramatically improving its reasoning and decision-making, and reducing inference errors and hallucination caused by the unstructured approach.

Knowledge graphs provide many of the key features in advanced agentic AI systems, including:

- Structured memory: Knowledge graphs provide graph data structures for storing interconnected entities and relationships that serve as the agent’s long-term memory. This goes beyond simple vector stores by capturing complex networks of facts, experiences, and contextual information that the agent can navigate precisely when needed.

- Multi-hop GraphRAG: Knowledge graphs power advanced reasoning capabilities by enabling agents to traverse relationship pathways, retrieve facts, analyze patterns, and draw conclusions based on graph structures. This allows agents to hop through the graph, connecting seemingly unrelated information through intermediate links that would be invisible to traditional agents that rely on unstructured data sources.

- Metadata graphs: By converting source metadata into semantic knowledge graphs, agents gain contextual grounding that helps language models translate natural language into queries. This enables more accurate retrieval and reasoning over underlying data.

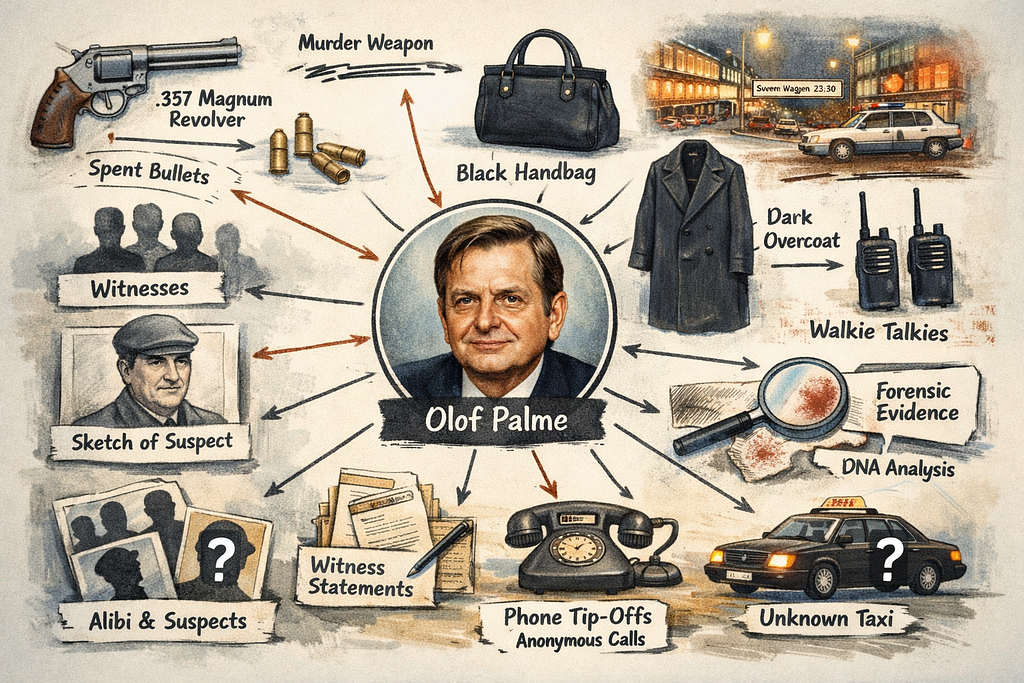

- Explainable decision-making: Graphs allow you to trace decision paths made by agents for debugging and auditing.

- Security and access control: If you’re using Neo4j graph databases, you can even enable role-based permissions that grant agents different levels of access to the knowledge graph, putting guardrails on what data they can retrieve or tamper with.

How to Build a Knowledge Graph

Learn everything you need to know about building knowledge graphs for your AI use cases.

What Are the Real-World Use Cases of AI Agents?

These different types of AI agents are already being deployed across industries in many business applications. They generally take on rote, repetitive work so humans can focus on higher-value responsibilities and act more as supervisors, while a team of agents or digital assistants supports employees in their workflows, boosting productivity and efficiency. Here are some of the most common use cases:

- Customer Service: Handle complex support inquiries, process refunds, update accounts, troubleshoot issues, escalate cases, and detect complaint patterns.

- Marketing & Sales: Research prospects, personalize outreach, qualify leads, optimize campaigns, manage content and email sequences, and identify best outreach timing.

- Finance & Accounting: Reconcile complex transactions, flag unusual spending, generate compliance reports, and predict cash flow issues with real-time analysis.

- Human Resources: Screen resumes, conduct initial interviews, create personalized onboarding, manage time-off policies, and identify employee flight risks.

- Coding & Development: Automate routine coding tasks, generate code from prompts, write unit tests, detect bugs/security flaws, and perform multi-step workflows like refactoring and CI/CD integration.

- Healthcare: Analyze patient histories, cross-reference symptoms, suggest treatments, monitor patient progress, and alert providers to risks or interactions.

- Legal: Review agreements, flag risky clauses, suggest safer language, ensure jurisdiction compliance, and maintain regulatory audit trails.

- Supply Chain: Vet suppliers, predict inventory needs, coordinate inspections, troubleshoot production with IoT data, and optimize logistics routes in real time.

What Are the Different Types of AI Agents?

There are five main types of AI agents, classified based on their intelligence level, decision-making process, and how they interact with their environment. They range from simple automated systems with predefined rules all the way to highly autonomous systems that learn and adapt:

- Simple Reflex Agents: Operate on basic if-then rules without memory, responding only to immediate inputs (e.g., a thermostat).

- Model-Based Reflex Agents: Use predefined rules plus memory of past interactions to adapt decisions (e.g., a robot vacuum remembering cleaned areas).

- Goal-Based Agents: Act with explicit objectives, planning and reasoning about actions to achieve a defined goal (e.g., a flight booking agent).

- Utility-Based Agents: Extend goal-based reasoning by weighing trade-offs and choosing the outcome with the highest overall benefit (e.g., a financial trading agent).

- Learning Agents: Continuously improve by adapting to feedback and experience, making them effective in dynamic and changing environments.

Can AI Agents Work Together?

In more advanced agentic systems, multiple agents work together. Two of the most common systems:

- Multi-agent systems coordinate specialized agents to solve complex problems. Each agent contributes unique capabilities while working toward shared objectives. For example, one agent handles data analysis, another generates reports, and a third manages communications.

- Hierarchical agent systems organize multiple agents in layers with supervisor-subordinate relationships. Higher-level agents delegate tasks to lower-level agents and coordinate their activities, like managers assigning tasks to specialized workers based on workload and experience.

The Agent2Agent (A2A) protocol supports these systems by standardizing how agents communicate and collaborate. It provides a common framework that allows diverse agents to coordinate tasks securely and efficiently, enabling seamless interaction across multi-agent and hierarchical setups without the need for custom integrations.

Challenges and Risks of AI Agents

Although agents present significant benefits, they also pose real challenges you need to plan for. If you don’t think through these issues up front, your agentic systems may fail or even face bigger risks:

- Infinite loops: Agents can get stuck calling the same tools repeatedly or running in a loop without making progress. You need circuit breakers and loop detection built in from day one.

- Context hallucination: LLMs hallucinate due to limited training data, a narrow context window, and optimization for helpfulness over accuracy. In agentic use cases, reasoning and planning dominate, making outputs easier to validate against enterprise systems.

- Tool hallucination: Tool hallucinations can happen when provided tools are used for the wrong purpose, parameters aren’t provided in the right shape or value, results are ignored, or nonexistent tools are made up. The last is the most straightforward error mode; the others are trickier to spot and prevent.

- Tool invocation: Invoking untrusted tools with private information can lead to data exfiltration. Agent builders should always use tools from trusted vendors with minimal permissions, accessing only the data that’s really required and restricting public distribution mechanisms.

- Bias: Bias is prevalent through the pre-training data and post-training (especially through reinforcement learning from human feedback) and context guidance at inference time. Agents are no exception. While agents employ less content generation from the model itself as source of answers, the selection of specific tools, planning a specific course of action or post-processing and judging results can be biased.

- Prompt injection: As LLMs can’t discern easily between instructions and data, the risk of processing untrusted data sources that contain malicious instruction is high and can lead to exfiltration or data loss. It can be partially mitigated by specific agent architectures, at a higher complexity and effort.

- Computational complexity: Running agents can be resource-intensive. The main cost driver for agents is still LLM inference (tokens in and out). That means the real challenge is controlling inference overhead from long contexts, repeated tool use, or multiple agents running at once.

- Multi-agent dependencies: When you have agents depending on each other, one failure can cascade through your entire system. Production systems have gone down because one agent in a chain hit a rate limit and everything downstream broke.

- Data privacy concerns: Agents often need access to sensitive data to be useful, but they also have memory systems that persist information. Make sure you understand what’s being stored where and for how long.

- Human trust and adoption: The biggest hurdle may not be technical. It might be getting people to trust autonomous systems. Start with low-stakes tasks and build confidence gradually rather than trying to automate everything at once.

Best Practices for Building AI Agents

Building effective AI agents requires careful design with clear scope, structured planning, and strong context management. By embedding safeguards like validation, logging, and human oversight, agents can remain reliable, safe, and aligned with business goals. For example:

- Narrow scope: Agents shouldn’t be generalists but have a narrow enough scope for clear instructions, vetting, tool sets, and valuable outcomes. More complex scenarios or workflows can be handled (if needed) by multi-agent setups.

- High-quality planning guidance: Planning is a crucial step for agents. High-quality guidance — such as memory, metadata, guardrails, policies, and tool dependencies — ensures that agents act consistently, avoid redundant work, and remain aligned with business goals. Well-structured planning also helps agents break down tasks into manageable steps and handle dependencies effectively.

- Structured context management: Agents need robust mechanisms to manage and retrieve context across sessions, tools, and workflows. This includes maintaining relevant history, state, and environmental factors while avoiding overload from irrelevant details. Strong context management with structured knowledge helps agents make coherent decisions, sustain multi-turn interactions, and adapt to evolving situations.

- Validation/Vetting: At least once in the agent loop, there should be a judging and validation step where decisions and context data are vetted and reviewed from a less biased point of view (e.g., with a different model or prompt). This can also be a human, if necessary.

- Activity logging: Log key decisions, tool calls, and failures in a structured way so you can debug issues, audit behavior, and explain outcomes.

- Human-in-the-loop design: Build approval workflows for critical decisions and maintain oversight processes. No matter how good your agent is, humans should be able to step in when needed.

- Interruption capabilities: Agents need graceful shutdown mechanisms. When something goes wrong, you want to be able to stop the agent cleanly without leaving your systems in an inconsistent state.

- Role-based access control: Limit agent permissions to only what they need. Just because an agent can access your entire database doesn’t mean it should.

What Are the Best AI Agent Frameworks?

Here are some of the most popular open-source frameworks for developing AI agents today:

- LangGraph (or LangChain)

- Google Agent Development Kit (ADK)

- LlamaIndex Agent Workflow

- AWS Strands Agents SDK

- Microsoft AutoGen

- CrewAI

For managed enterprise-ready services, Microsoft 365 Copilot Studio, Google Vertex AI Agent Builder, and Amazon Bedrock Agents are solid options.

You can integrate Neo4j’s knowledge graph capabilities into any of these frameworks through MCP to build powerful AI agents that can read and write knowledge graphs.

The Future of AI Agents

We’re seeing a fundamental shift from using AI as a text-generation tool to agentic systems that can understand our goals and work autonomously to achieve them.

Looking ahead, most traditional business applications will probably evolve into agent workspaces. Instead of fixed user interfaces, users will describe what they want to accomplish, and agents will create the interfaces and workflows needed to complete those tasks.

For developers ready to start building, the opportunity is significant. For organizations willing to invest in this transformation, the competitive advantages will be substantial. The future of AI isn’t just about better models — it’s about better systems that can act, learn, and adapt in the real world.

To make this future possible, agents need to evolve beyond unstructured memory and vector search, which can return related information but struggle to capture logic and relationships. Agents need to be equipped with structured knowledge graphs that enable them to reason with context.

Learn to Develop AI Agents with Knowledge Graphs

Check out our GraphAcademy courses in the AI development roadmap.

Resources

Free books:

- The Developer’s Guide: How to Build a Knowledge Graph

- The Developer’s Guide to GraphRAG

- Essential GraphRAG

Free GraphAcademy courses for AI agent development:

- Neo4j & GenerativeAI Fundamentals

- Introduction to Vector Indexes and Unstructured Data

- Building Knowledge Graphs with LLMs

- Using Neo4j with LangChain

- Developing with Neo4j MCP Tools

- Building a Neo4j-backed Chatbot Using Python

We have upcoming courses that will cover Building GraphRAG agents with LangGraph, LlamaIndex, Google ADK, and CrewAI, and evaluating GraphRAG with RAGAS. Check out the complete roadmap.

Frequently Asked Questions

No, chatbots are primarily focused on conversation. They’re designed to communicate with humans through text or voice. AI agents are broader, proactive, goal-oriented systems that can plan multi-step tasks and use tools to achieve goals. Conversation might be just one of their capabilities, not necessarily their primary function.

LLMs generate text responses to prompts, while AI agents use LLMs as their “brain” but add memory, planning, tool access, and goal-oriented behavior to autonomously complete tasks.

Basic agents can be built using low-code platforms, but sophisticated agents with custom integrations and complex reasoning require development skills.

Start with LangGraph, access to an LLM (OpenAI, Anthropic, etc.), and integration tools for APIs and databases. For complex memory and retrieval, consider adding a knowledge graph like Neo4j.

With proper safeguards such as human oversight, comprehensive logging, access controls, and approval workflows for high-stakes decisions, agents can be deployed safely in production environments.

Not necessarily. Agents can run locally with offline LLMs and local data sources, though cloud-based LLMs and external API integrations obviously require internet connectivity.

Yes, sophisticated agents can learn from their experiences by storing outcomes in memory systems and adjusting their strategies based on what worked or failed in past interactions.

Knowledge graphs are not necessary for simple use cases or hobby projects. But they’re essential in mission-critical enterprise applications where agents are required to understand complex relationships, reason with context, and explain their actions.

Absolutely. Agents are designed to work with multiple tools and can switch between different tasks based on context, though more specialized agents often perform better than highly generalist ones.

RAG retrieves and augments information for responses, while agent orchestration coordinates multiple agents and tools to execute complex workflows and achieve specific goals autonomously.