AI Ethics: What Is It and Why Should You Pay Attention?

Senior Staff Writer, Neo4j

12 min read

AI is no longer just a breakthrough technology with tons of potential. It’s helping companies automate complex systems, improve their decision-making, reduce expenses, and make processes safer, fairer, and better able to operate at scale. Business use of AI has become integral across industries, including healthcare, banking, fraud prevention, retail, and manufacturing. It even has a promising application for nuclear power. IDC expects spending on AI solutions to surpass $500 billion worldwide by 2027, and by 2030, the market for AI technologies is expected to top $1.8 trillion.

The Rise of Ethical AI

AI is evolving so rapidly that it’s difficult to keep up with the latest advances, let alone the ethical considerations around its use. Yet this topic is headline-worthy in the business world because creating and rolling out AI-aided apps involves considerable risk, and companies are becoming aware of a number of possible negative ramifications. If technology leaders don’t handle the use of AI well — that is, in a way that’s ethically oriented — a healthy reputation and bottom line are vulnerable.

As executives, data scientists, and developers work on AI projects, they must be aware of certain challenges associated with putting this technology into practice and do whatever’s possible to minimize adverse outcomes. They also need to be up to date on recently passed laws that impact companies globally.

In this blog post, we look at the ethical issues associated with AI technology, the latest AI regulations and emerging corporate strategies, and key guidance every company using AI needs to know for managing ethical risk.

What Is AI Ethics?

Classic ethics is a multidisciplinary field geared toward ensuring human safety, security, and well-being, encompassing individuals and society as a whole. Along those lines, the ethics of AI comprises guiding principles for taking precautions while developing and using AI. Ethical concerns encompass how to create algorithms that treat people fairly, ensuring satisfactory decision-making processes, being able to clearly see and explain how AI arrives at its conclusions, maintaining data accuracy, avoiding the spread of disinformation through generative AI, and keeping people’s sensitive information safe.

Algorithmic Bias

It’s typical to use AI models that learn from historical data; in this case, algorithms ingest past data to analyze patterns and predict potential future outcomes. “Part of the appeal of algorithmic decision-making is that it seems to offer an objective way of overcoming human subjectivity, bias, and prejudice,” points out Harvard professor Michael Sandel.

However, a biased algorithm can lead to an AI system making decisions that are unfair to certain individuals and groups. Why? Because the system can then reflect — and even amplify — real-world biases that exist in data sets and, as a result, unintentionally cause harm such as discrimination. “We are discovering that many of the algorithms that decide who should get parole, for example, or who should be presented with employment opportunities or housing … replicate and embed the biases that already exist in our society,” says Sandel.

Examples of bias perpetuated by AI algorithms have shown up in:

- Job recruitment. Software may reject applicants due to biases against their gender, age, or other characteristics. Amazon discovered that its recruiting software was biased toward men in technical positions; it was partial to terminology on resumes that men more often used, such as “executed.”

- Healthcare treatment. People of color are underrepresented in AI training data sets, and algorithms have been found to be biased against black patients.

- Chatbot language. After being introduced on Twitter and talking with people, Microsoft’s recently introduced Tay chatbot started making misogynist and racist comments.

- Social media content. An experiment with new social media accounts, which had no user input that could be used for reference in populating content, found that “social media automatically delivers troubling content to young men, largely without oversight.”

- Law enforcement. Racial bias has been seen with police patrols: some neighborhoods have been unfairly targeted as crime locales.

Lack of Accuracy, Transparency, and Explainability

Accuracy

Is your AI output accurate? Particularly when it comes to generative AI, the answer may not always be a resounding yes. To date, AI-promulgated inaccuracies have ranged from mildly entertaining falsehoods to off-base suggestions, such as when an algorithm comes up with alarming recipes.

If you use generative AI for your business and need to ensure accuracy, a graph database such as Neo4j is a great way to proceed. Graphing improves data accuracy by adding context and peripheral details that serve to “ground” the information in facts.

Transparency

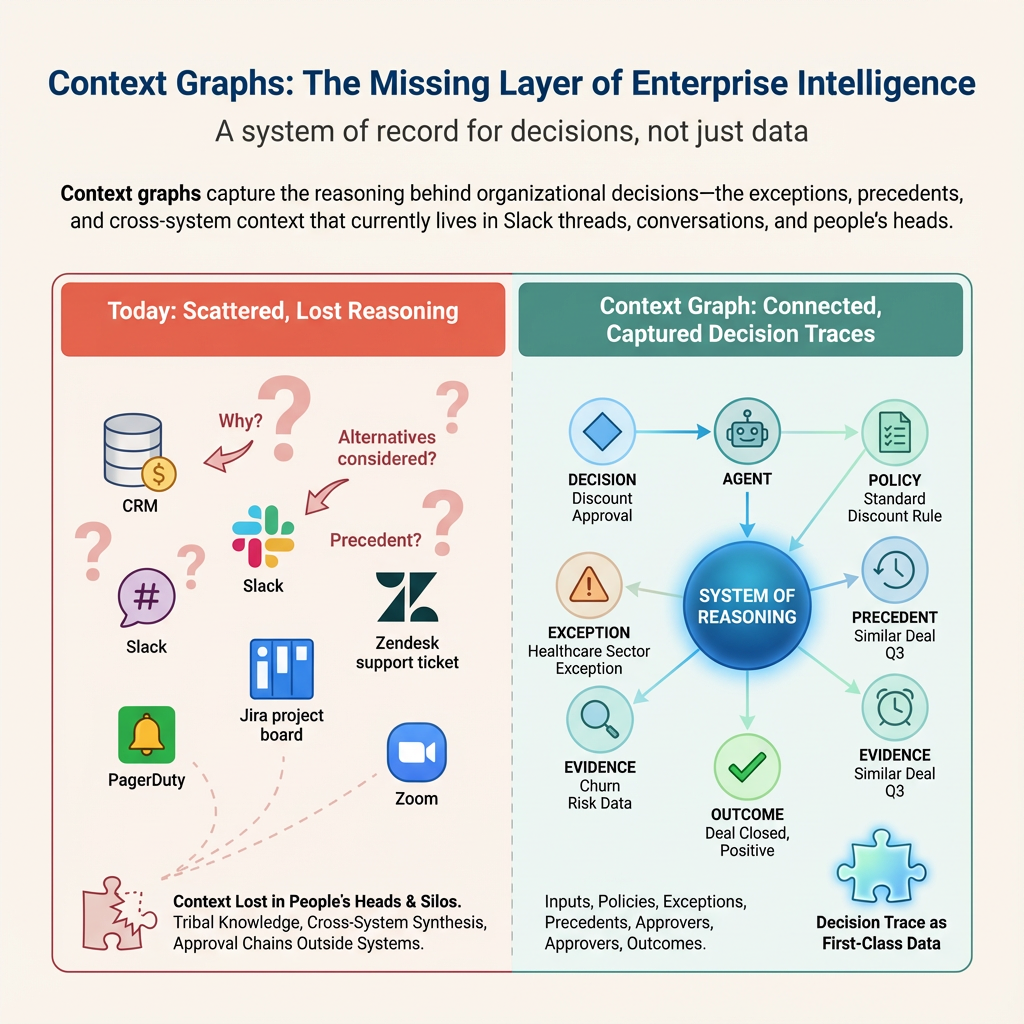

Many AI systems are “black boxes,” meaning that engineers, data scientists, and executives don’t know how AI conclusions are made. Interpretable machine learning that provides transparency upfront is superior to trying to explain what’s in an AI black-box model afterward.

Human-made decisions, even those that don’t prove to be “right,” can often be explained or traced to a decision point, which engenders trust. Similarly, having the ability to interpret a machine learning model’s “thinking” increases trust in the model and also lets it learn from a mistake. In fields such as finance and healthcare, this is essential. Ideally, you know what influences every decision AI makes, and you have the ability to intervene if you suspect problems with the accuracy of an explanation.

One way to build transparency into an AI process is to incorporate the context of data relationships by using a graph database. Peripheral and contextual information facilitates understanding of the logic by giving AI more to work with. Graph databases allow you to identify the data that was used in decision processing, see any patterns in the source data, and catch any sources of bias.

Explainability

Transparency goes hand in hand with AI explainability. It allows you to look back at the “breadcrumbs” that led to an AI decision and explain it in a way that people (for example, customers) can comprehend.

Traditional AI processing doesn’t generally provide explanations, or, if it does at the technical level, the details aren’t exactly decipherable for humans. If you’re an executive, you feel the weight of AI decisions nonetheless — if not just for the legal ramifications but because your company prioritizes fairness and ethical standards. And if you’re a regulator, you may both desire and require a crystal-clear explanation.

According to McKinsey, improved AI explainability has led to better adoption of AI. Their research also found that when companies prioritize digital trust for customers, such as by incorporating explainability in algorithmic models, they’re more likely to grow annual revenue by 10 percent or more.

Graph technology also solves the problem of explainability. Graphs can facilitate descriptions of the retrieval logic by showing data sources and illuminating why the system selected the source information.

Privacy Violation

With digital data, the protection of consumer privacy rights may be overlooked or not prioritized. The news is full of alerts about cyber attacks compromising personally identifiable information (PII), such as at Yahoo, where sensitive data in 3 billion user accounts was compromised, releasing people’s names, email addresses, phone numbers, birthdays, and hashed passwords.

Adding insult to injury, a company in this situation typically faces damage to its reputation and a drop in customer trust that can have a far-reaching impact. In Yahoo’s case, Verizon was able to reduce its purchase price by $350 million, while Yahoo also incurred regulatory scrutiny, fines, and lawsuits.

With the advent of ChatGPT, personal data isn’t the only type of information at risk, as anyone — such as employees who don’t realize the possible consequences — can release proprietary corporate data to an AI system. To compound the situation, according to a 2024 survey by Microsoft and LinkedIn, 78% of AI users bring their own AI tools to work, which may violate companies’ tool-use policies.

Once again, a graph database can help a company interested in the ethical use of AI keep data secure, such as is mandated by the California Consumer Privacy Act and European Data Privacy, Risk, and Compliance law.

An AI Ethics Boom

According to ScienceDirect: we’re in an “AI ethics boom”. Worldwide, there’s unprecedented demand for AI regulation and guidance. Heading into 2025, that’s abundantly clear as government and intergovernmental entities step up the pace of enacting ethical AI laws.

To date, these AI-related legal milestones have passed:

- In 2024, the European Union passed the sweeping Artificial Intelligence Act. It impacts American companies with global reach, or that produce output for the European market, spanning AI development, use, importation, and distribution. (American companies must also comply with existing laws related to equal employment opportunities.)

- Also in 2024, the National Institute of Standards and Technology (NIST), after soliciting and receiving input from AI-oriented companies including Neo4j, drafted guidelines for the responsible use of AI technology.

- In the absence of formalized US AI Ethics laws, in 2023, President Biden put forth the Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.

- Adopted in 2018, Europe’s General Data Protection Regulation (GDPR) was billed as “the toughest privacy and security law in the world.”

- Also passed in 2018, the California Consumer Privacy Act (CCPA) gave California consumers more control over their personal information that’s collected by businesses; it also issued guidance for companies on putting its tenets into effect.

It Takes a (Tech) Village

As companies put AI into real-world use, they’ve realized that the process is complex. When it comes to AI ethics, “leading organizations now realize the need for more systematic approaches,” observes the Institute for Experiential AI. “Deploying AI and, likewise, Responsible AI, demands a strategy that spans across teams and verticals, with new expertise, creative design workflows, and compliance with emerging regulations.”

AI ethics expert Reid Blackman adds: “Companies are quickly learning that AI doesn’t just scale solutions — it also scales risk. In this environment, data and AI ethics are business necessities, not academic curiosities.”

With that awareness, some companies are moving ahead with ethical AI strategies, doing due diligence to operate responsibly and retain trust. Many engineers, data scientists, and product managers know to ask practical, business-relevant, risk-related questions, though they may not always get clear answers. Together, this array of stakeholders can cover most or all necessary bases.

In other organizations, management and employees are still attempting to find their footing in how to implement ethical principles and conform to emerging, evolving standards.

If you’re unsure where to start with AI ethics, here are some tips.

How to Manage AI Risk

When you’re looking for ways to integrate AI in your private-sector business model, managing the potential risks that may surround it means making an effort to protect against human harm on both individual and societal levels. You can minimize risk by creating an AI code of ethics, being proactive, protecting data privacy, designing ethically, and adding context to your data to ensure accuracy, transparency, and explainability.

1. Create an AI Code of Ethics

With responsible AI practices, your efforts should reflect a desire to do the right thing and your inherent values as a company. You can get off to a positive start by creating your own inspired approach to honoring AI ethics.

- Want inspiration? Check out what companies like Indeed and HPE have done

- Create an AI ethics handbook for your employees and associates

- Start with an AI policy statement

- Define the roles and responsibilities for handling AI-related decisions

- Detail requirements for how your AI technology must be deployed and monitored

- Detail how employees can and should guard against bias in machine learning algorithms

- Provide training and continuing education to facilitate compliance

2. Be Proactive

With global and local AI ethics regulations coming more sharply into focus, you don’t want to get bogged down in implementation issues at the last minute. It pays to generate your own momentum and try to anticipate what’s coming. In that spirit, you can:

- Focus on embracing principles as opposed to pushing forward and possibly making avoidable mistakes

- Treat AI as a partner, with humans retaining control, recommends Raj Mukherjee of Indeed, as AI can’t replace human judgment and oversight

- Form a team of business leaders, data scientists, and developers who are committed to jointly adhering to best practices for data use that ensure safe AI deployment

- Be curious: Critically evaluate your AI tools, looking at the data and assumptions behind them, suggests business behavior expert Dr. Diane Hamilton

- Build in ethical parameters on your initiatives early; don’t risk causing problems that could raise red flags and invite investigation

- Survey your systems once they’re up and running to make sure they’re operating in accordance with responsible AI principles and as the latest laws require

3. Keep Your Data Safe

With the use of data sets that contain personally identifiable information, you want to double down on keeping it safe. You’re fulfilling a moral obligation to your customers and stakeholders who trust you, and, along with losing their data, you can’t afford to lose their (and others’) trust.

- Get informed. Looking for guidance on data safekeeping? Emulate European AI ethics approaches

- Be forthcoming about how you’re using and protecting data, such as by notifying home-page visitors of your policies

- Embed security and privacy measures by using a Neo4j graph database: schema-based security enforces protection, whether you have multiple self-managed graph databases or shards of a large graph in multiple cloud repositories

4. Design Ethically

The best time to consider ethical implications is at the beginning of the development process. You’re less likely to go wrong by creating AI applications from the ground up with fairness in mind and the intention to make the process transparent.

Evaluation: Ask key questions

- What’s the purpose of the AI app — is it truly worth creating?

- For your use case, what principles should you embrace?

- If your AI solution is safer than the human version it’s replacing (for instance, an autonomous driving system that still can’t eliminate fatalities), what’s the least damaging option?

- What could conceivably go wrong?

Assessment: Look at your data

- Define fairness as it relates to the app

- How was the training data collected?

- How well does the data set represent the experiences of affected groups?

- Does the data reflect social disparities that could result in unfair conclusions?

- Are you being inclusive? You may need more or different data

- How will you equitably treat different individuals and groups?

Testing: Check your system for safety

- Monitor your end-to-end lifecycle with an AI governance tool that lets you train your AI models, manage the data you use, track your metrics, and apply safeguards to prevent bias

- Be open about the results of your testing

- Adjust as needed, such as to eliminate bias, then retest

5. Add Data Context for Accuracy, Transparency, and Explainability

As companies continue to rely on AI algorithms to make significant business choices, having the ability to produce accurate data, understand decision logic, and clearly explain decisions will build trust and acceptance. If you responsibly create and supervise your machine learning models, you can promote fair choices, objectivity, and inclusivity, making your outcomes robust and trustworthy.

Graph database technology allows you to embrace responsible artificial intelligence practices that reflect societal norms. The way graphs do this best? Adding meaningful context, which:

- Promotes data accuracy through “grounding” data alongside peripheral information

- Enables AI transparency through the tracing of data paths back to sources, providing an understanding of the data used to make decisions and the connections between knowledge and AI responses

- Can improve LLM response accuracy with retrieval augmented generation (RAG) by retrieving relevant source information from external data stores

- Protects against data manipulation and enables verifiability

- Provides explainability by letting you point to source data, resulting data, and what the algorithm did and why

- Lets you identify potential biases, such as inequities caused by collecting data for only one gender, in your existing data, as well as in how you collect new data and train models

- Facilitates making your algorithm fair in the ways it treats individuals and groups

- Lets you document your ethical framework: how you trained the system, your data collection processes, what training data was used, and what went into the algorithm’s recommendations

- Lets you scale data acquisition while seamlessly adapting to changing compliance requirements

Adopt Ethical AI Practices with Neo4j

Want to be proactive and create ethically sound applications, ensuring data accuracy, transparency, and explainability? That’s imminently doable with Neo4j graph technology. Contact us to learn more and get started.

For More Information

These organizations provide abundant AI ethics guidance and inspiration:

- AlgorithmWatch: a nonprofit research and advocacy organization that develops “well-founded demands to policymakers, companies, and public authorities”

- AI Now Institute: a nonprofit focused on research about the social implications of AI and responsible AI

- CHAI: The Center for Human-Compatible Artificial Intelligence is focused on promoting trustworthy AI. One project involves testing social-media AI algorithms to reduce polarization and increase news knowledge and feelings of well-being.

- DARPA: The Defense Advanced Research Projects Agency is an arm of the US Department of Defense that promotes explainable AI and AI research

- HAI: Stanford University’s Institute for Human-Centered Artificial Intelligence (HAI) promotes best practices for human-centered AI

- ITEC: The Institute for Technology, Ethics and Culture’s focus is creating a roadmap for technology ethics

- NIST: The National Institute of Standards and Technology, an agency of the US Department of Commerce, works to garner trust in AI technologies