Implementing Neo4j GraphRAG Retrievers as MCP Server

Graph ML and GenAI Research, Neo4j

6 min read

Expand your MCP toolbox with VectorCypher search and other GraphRAG retrievers

The Model Context Protocol (MCP) is an open standard that defines how applications provide context to LLMs, enabling them to access external data and functions through standardized tools.

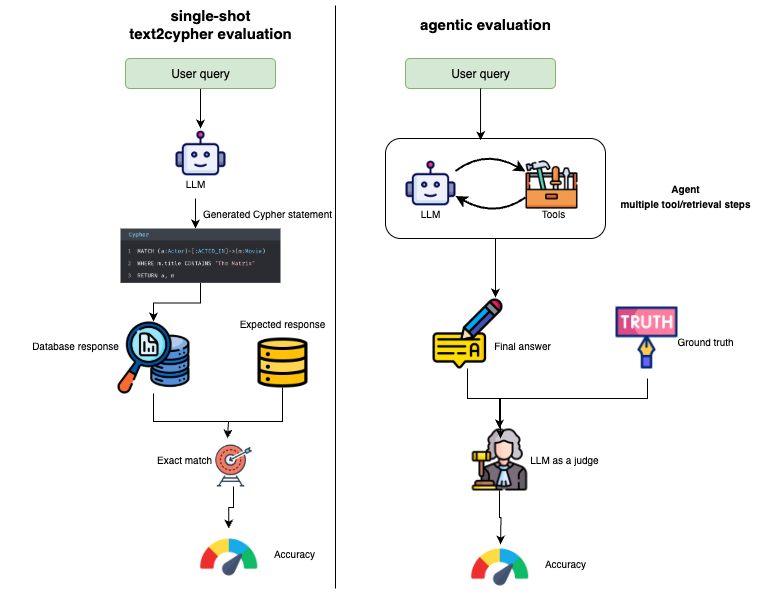

One such MCP server is the MCP Neo4j Cypher server, which enables the agent to query a Neo4j database. This MCP server makes it possible to retrieve database schema information and execute both read and write Cypher queries generated by LLM (Text2Cypher) without needing to learn Cypher syntax directly. In this way, the Text2Cypher approach provides a transparent and effective bridge between natural language and traditional graph queries.

However, vector search introduces a different challenge. Unlike Text2Cypher, vector search does not simply translate natural language into Cypher queries. Instead, it requires generating embeddings for the data and the queries, then comparing them in a vector index. Because of this, the process is less transparent: to interpret results meaningfully, it’s important to know which text embedding model was used to create the embeddings and how queries are matched against them. Designing effective vector search requires not just Cypher generation but also embedding management and careful query formulation.

To address this, Neo4j provides the GraphRAG VectorCypherRetriever, which combines vector search with Cypher. In this blog post, we’ll walk through how to expose the retriever as an MCP server, making it available as an additional tool for our agent.

The MCP code is available on GitHub.

Exposing GraphRAG Retriever as an MCP Server

As mentioned, we need a custom retriever because we also need to generate text embeddings. You might wonder why we don’t simply provide the LLM with an embedding model as a tool and let it combine that with Text2Cypher. The reason is that the only way to pass the output of one tool to another today is through the LLM context itself. But embeddings are high-dimensional vectors, and routing them through the LLM is inefficient and costly in terms of tokens and latency.

As shown above, a better approach is to let the retriever handle the entire workflow, generating embeddings and performing vector search, so that no embeddings ever pass through the LLM and everything is cleanly abstracted behind a single tool interface.

GraphRAG VectorCypher MCP

Now it’s time to implement the retriever. I’m not an expert in MCP frameworks, so I started by copying the structure of the MCP Neo4j Cypher server, which is built with FastMCP, and adapted it.

We need a flexible way to implement our embedding model. To make the VectorCypherRetriever MCP-compatible with any model without needing code modifications, we’ll leverage LangChain’s init_embeddings function. This function uses a simple provider:model string, which makes it incredibly easy to switch between different embedding models:

# Example

embedding_model = init_embeddings("openai:text-embedding-3-small")For the retriever to work, we need to define the following components:

- The Neo4j database connection

- The vector index name

- The Cypher query for retrieval

After configuring these settings, we pass the retriever object to the function that builds the server:

embedding_model = init_embeddings(embedding_model)

driver = GraphDatabase.driver(db_url, auth=(username, password))

retriever = VectorCypherRetriever(

driver,

index_name=index_name,

embedder=embedding_model,

retrieval_query=retrieval_query,

neo4j_database=database

)

mcp = create_mcp_server(retriever, namespace)An MCP server acts as a bridge between an LLM and external functionalities, which are exposed as tools. By defining these tools, the server allows the LLM to request their execution to help answer user queries.

In this case, we’ll expose a single tool: a function that performs a vector search.

@mcp.tool(

name=namespace_prefix + "neo4j_vector",

annotations=ToolAnnotations(

title="Neo4j vector",

readOnlyHint=True,

destructiveHint=False,

idempotentHint=True,

openWorldHint=True,

),

)

async def vector_search(

query: str = Field(..., description="Natural language question to search for.")

) -> list[ToolResult]:

"""Find relevant documents based on natural language input"""

try:

result = vector_retriever.search(query_text=query, top_k=5)

text = "\n".join(item.content for item in result.items)

return ToolResult(content=[TextContent(type="text", text=text)])

except Neo4jError as e:

logger.error(f"Neo4j Error executing read query: {e}\n{query}")

raise ToolError(f"Neo4j Error: {e}\n{query}")

except Exception as e:

logger.error(f"Error executing read query: {e}\n{query}")

raise ToolError(f"Error: {e}\n{query}")In short, the function acts as a semantic search tool for a Neo4j database. When an LLM decides to use this tool, it passes a natural language query to it. The function then uses a vector retriever to find the top five most relevant documents in the database and returns their combined text content to the LLM.

This @mcp.tool decorator is what formally registers the vector_search function as an available tool. A tool’s description is automatically generated from its docstring to provide clear instructions for the LLM. About the tool:

- The tool’s purpose (the “what”): The function’s main docstring becomes the tool’s overall description. This high-level summary tells the LLM what the tool does, helping it decide when to use it.

- The tool’s inputs (the “how”): Each parameter the function requires must also be described. These specific descriptions tell the LLM exactly what information to provide for each input, ensuring that it calls the tool correctly.

Additionally, we can define tool annotations:

name: It gives the tool a unique name.readOnlyHint=True: This is a promise that the tool will only read data and won’t make any changes to the database.destructiveHint=False: Explicitly states the tool is not destructive.idempotentHint=True: This means calling the tool multiple times with the exact same query will yield the same result without causing additional side effects. Searching for “cats” twice produces the same results and doesn’t change anything.openWorldHint=True: This informs the LLM that the tool’s knowledge source (the Neo4j database) can be updated independently. The answer it gets today might be different from the one it gets tomorrow if new data is added.

Testing in Claude Desktop

We can now test the server using the following configuration in the Claude desktop application.

This setup is pre-configured to connect to a public, read-only Neo4j movie recommendations database. You only need to fill in the OPENAI_API_KEY for the text embedding model.

{

"mcpServers": {

"neo4j-dev": {

"command": "uv",

"args": ["--directory", "/path/to/servers/mcp-neo4j-vector-graphrag", "run", "mcp-neo4j-vector-graphrag", "--transport", "stdio", "--namespace", "dev"],

"env": {

"NEO4J_URI": "neo4j+s://demo.neo4jlabs.com:7687",

"NEO4J_USERNAME": "recommendations",

"NEO4J_PASSWORD": "recommendations",

"NEO4J_DATABASE": "recommendations",

"OPENAI_API_KEY": "sk-proj-",

"INDEX_NAME": "moviePlotsEmbedding",

"EMBEDDING_MODEL": "openai:text-embedding-ada-002",

"RETRIEVAL_QUERY": "RETURN 'Title: ' + coalesce(node.title,'') + 'Plot: ' + coalesce(node.plot, '') AS text, {imdbRating: node.imdbRating} AS metadata, score"

}

}

}

}The moviePlotsEmbedding index enables natural language searches across embedded movie plot data. Let’s test it.

Summary

Last year’s retrieval systems aren’t becoming obsolete — they’ve just gotten a new form. In the agentic era, the retrievers are wrapped as modular, efficient, and standardized tools. By abstracting the complexity of embedding generation and vector search behind a clean MCP interface, we empower the LLM to focus on reasoning and orchestration, rather than the low-level mechanics of data retrieval.

The agent doesn’t need to know the intricacies of text-embedding-3-small or the structure of a vector index; it just needs to know it has a powerful semantic search tool it can call upon. You can apply the same principles to expose other GraphRAG retrievers, or any custom data access logic, to create a versatile and powerful toolbox for your agent.

The MCP code is available on GitHub.

Resources

- Free Essential GraphRAG book

- Everything a Developer Needs to Know About the Model Context Protocol (MCP)

- What Is GraphRAG?

Implementing Neo4j GraphRAG Retrievers as MCP Server was originally published in Neo4j Developer Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.