Production-Proofing Your Neo4j Cypher MCP Server

Graph ML and GenAI Research, Neo4j

7 min read

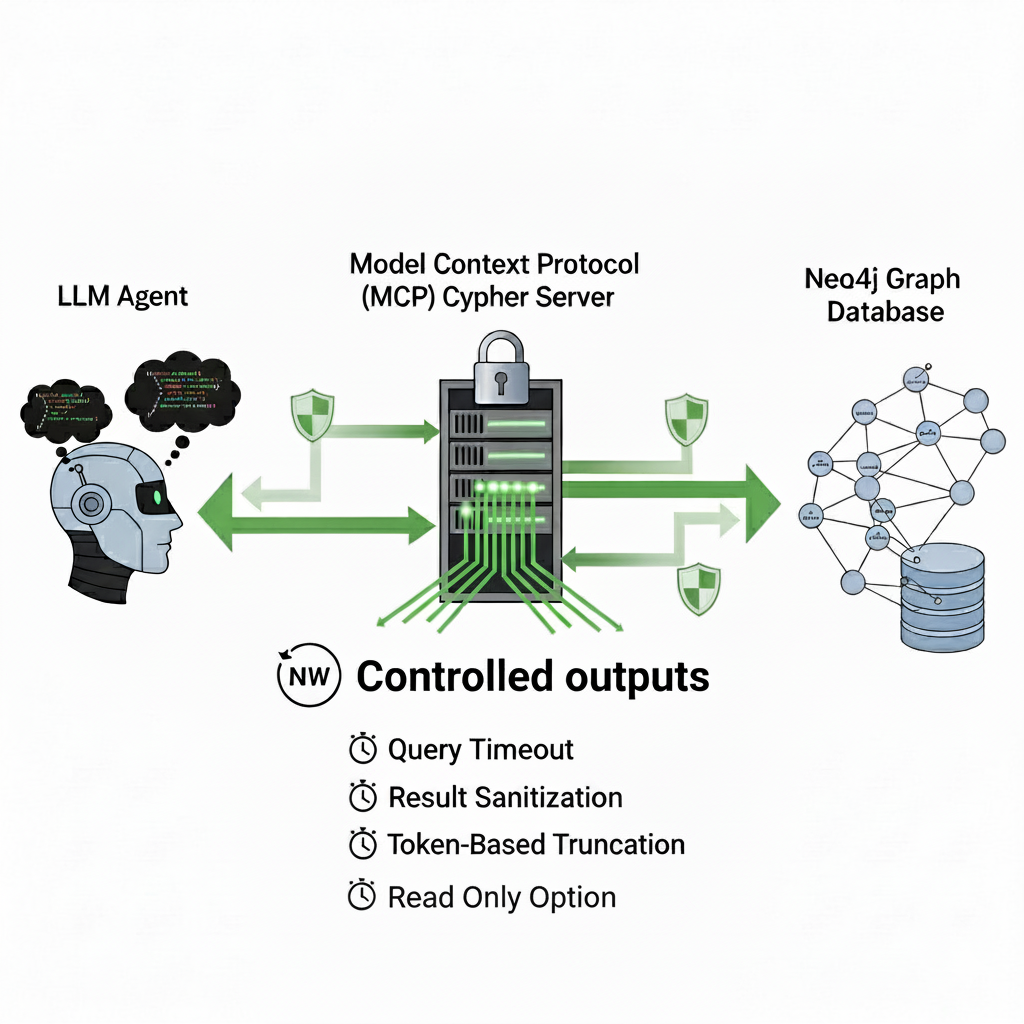

MCP Cypher server introduces token limits, read-only execution, timeouts, and more

Large language models connected to your Neo4j graph gain incredible flexibility: They can generate any Cypher queries through the Neo4j MCP Cypher server. This makes it possible to dynamically generate complex queries, explore database structure, and even chain multi-step agent workflows.

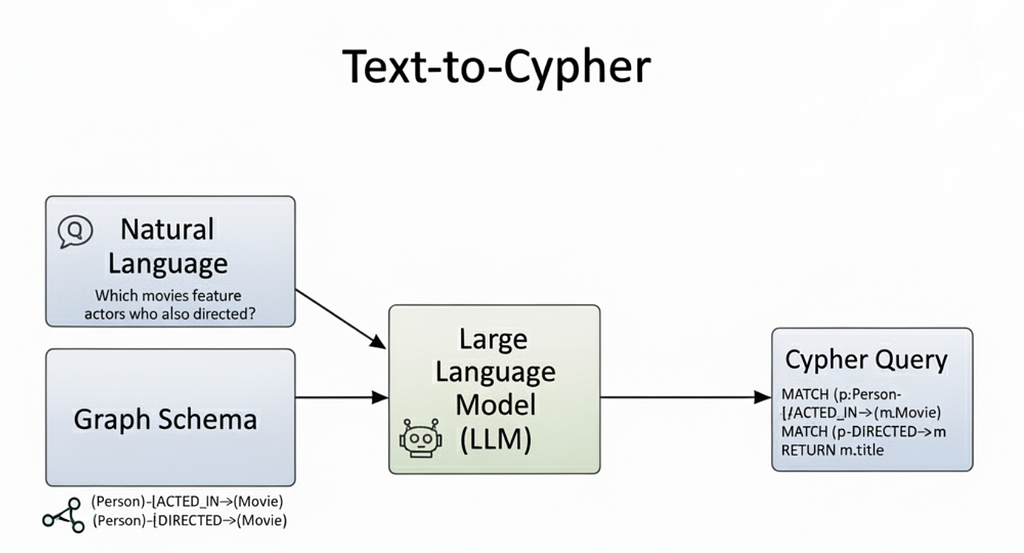

To generate meaningful queries, the LLM needs the graph schema as input: the node labels, relationship types, and properties that define the data model. With this context, the model can translate natural language into precise Cypher, discover connections, and chain together multi-hop reasoning.

For example, if it knows about (Person)-[:ACTED_IN]->(Movie) and (Person)-[:DIRECTED]->(Movie), it can turn “Which movies feature actors who also directed?” into a valid query. The schema gives it the information needed to adapt to any graph and produce Cypher statements that are both correct and relevant.

But this freedom comes at a cost. When left unchecked, an LLM can produce Cypher that runs far longer than intended or returns enormous datasets with deeply nested structures. The result is not just wasted computation but also a serious risk of overwhelming the language model itself. At the moment, every tool invocation returns its output back through the LLM’s context. That means when you chain tools together, all the intermediate results must flow back through the model. Returning thousands of rows or embedding-like values into that loop quickly turns into noise, bloating the context window and reducing the quality of the reasoning that follows.

This is why throttling responses matters. Without controls, the same power that makes the Neo4j MCP Cypher server so compelling also makes it fragile. Crucially, introducing a read-only database user provides a foundational layer of security, preventing the LLM from executing any unwanted write queries that could corrupt the database. By also introducing timeouts, output sanitization, row limits, and token-aware truncation, we can keep the system responsive and ensure that query results stay useful to the LLM instead of drowning it in irrelevant detail.

The server is available on GitHub.

Security Improvements

Let’s first walk through the security improvements introduced in the later versions.

👓 Read-Only Mode

The freedom for an LLM to generate any Cypher query is powerful, but it also creates a significant risk: The model could inadvertently generate a query that modifies or even deletes critical data. For production environments, preventing unwanted database writes is a non-negotiable requirement. This layer of security is enabled by setting the NEO4J_READ_ONLY environment variable to true. When active, this mode completely dismisses the tool responsible for write operations.

This simple but effective constraint ensures that the LLM can only act as an observer, safely exploring and retrieving data without any possibility of altering the state of the graph.

🛡️ DNS Rebinding Protection

To prevent DNS rebinding attacks, the server uses TrustedHostMiddleware to validate all incoming Host headers. This ensures that only authorized domains can access the server. Benefits:

- Secure by default: Out of the box, only requests to localhost and 127.0.0.1 are permitted. This default configuration prevents malicious websites from tricking a user’s browser into accessing your local server instance.

- Configuration: You can easily extend this protection to include your own trusted domains by setting an environment variable.

NEO4J_MCP_SERVER_ALLOWED_HOSTS="example.com,www.example.com"🌐 CORS Protection

By default, the server enforces a strict Cross-Origin Resource Sharing (CORS) policy. This blocks all browser-based requests originating from a different domain, protocol, or port, effectively preventing unauthorized web applications from interacting with the MCP server. Details:

- Configuration: To allow specific web applications to communicate with the server, you can add their origins to an allow list using an environment variable.

NEO4J_MCP_SERVER_ALLOW_ORIGINS="https://app.example.com,https://www.example.com"Controlled Outputs

So how do we prevent runaway queries and oversized responses from overwhelming our LLM? The answer is not to limit what kinds of Cypher an agent can write because the whole point of the Neo4j MCP server is to expose the full expressive power of the graph. Instead, we place smart constraints on how much comes back and how long a query is allowed to run. In practice, that means introducing three layers of protection: timeouts, result sanitization, and token-aware truncation.

Controlling Execution Time With Timeouts

An LLM has no inherent concept of computational cost, meaning it can inadvertently generate a runaway query — like a massive Cartesian product or a deep traversal across millions of nodes — that could stall the entire system. The most fundamental safeguard against this is to enforce a strict time budget on every query.

This is managed through the NEO4J_READ_TIMEOUT environment variable, which defaults to 30 seconds. If a query exceeds this limit, it’s terminated immediately. This “fail-fast” approach ensures that a single expensive operation won’t hang the workflow, allowing the system to remain responsive and stable.

Sanitizing Noisy Values

Modern graphs often attach embedding vectors to nodes and relationships. These vectors can be hundreds or even thousands of floating-point numbers per entity. They’re essential for similarity search, but when passed into an LLM context, they’re pure noise. The model can’t reason over them directly, and they consume a huge amount of tokens.

To solve this, we recursively sanitize results with a simple Python function. Oversized lists are dropped, nested dicts are pruned, and only values that fit within a reasonable bound (by default, lists less than 128 items) are preserved.

Token-Aware Truncation: Guaranteeing a Manageable Output

A Cypher query can easily return results that are too verbose for an LLM’s finite context window, especially when records contain long text properties or complex nested data. To manage this, the server relies on token-aware truncation as the primary safeguard for controlling output size.

Before returning any result, the server uses a tokenizer, specifically OpenAI’s tiktoken library, to measure its length in tokens. This maximum token count is configured using the NEO4J_RESPONSE_TOKEN_LIMIT environment variable. If the result exceeds this limit, it’s truncated. This process acts as a safeguard, ensuring that the data passed back to the model is always a manageable size, preventing context overflow, and preserving the integrity of the agent’s workflow.

Tying It Together

With these layers combined (read-only mode, DNS rebinding protection, CORS policies, timeouts, sanitization, and truncation), the Neo4j MCP Cypher server remains fully capable but far more disciplined. The LLM can still attempt any query it needs, but the responses are always bounded, secure, and context-friendly.

Instead of flooding the model with overwhelming amounts of data or risking unintended database modifications, you return just enough structure to keep it smart and safe. This disciplined approach transforms the server from a powerful but potentially risky tool into one that feels purpose-built for production LLM workflows.

Here’s a complete configuration with all security and performance controls enabled:

{

"mcpServers": {

"neo4j-database": {

"command": "uvx",

"args": ["mcp-neo4j-cypher@0.4.1", "--transport", "stdio"],

"env": {

"NEO4J_URI": "bolt://localhost:7687",

"NEO4J_USERNAME": "neo4j_reader",

"NEO4J_PASSWORD": "<your-secure-password>",

"NEO4J_DATABASE": "production",

"NEO4J_READ_ONLY": "true",

"NEO4J_READ_TIMEOUT": "30",

"NEO4J_RESPONSE_TOKEN_LIMIT": "8000",

"NEO4J_MCP_SERVER_ALLOWED_HOSTS": "localhost,127.0.0.1,api.yourcompany.com",

"NEO4J_MCP_SERVER_ALLOW_ORIGINS": "https://app.yourcompany.com,https://dashboard.yourcompany.com"

}

}

}

}Summary

The result of all of this is a server that gives LLMs the freedom to explore your graph database intelligently while staying safe, responsive, and production-ready.

Resources

- Everything a Developer Needs to Know About the Model Context Protocol (MCP)

- Evaluating Graph Retrieval in MCP Agentic Systems

- Model Context Protocol (MCP) Integrations for the Neo4j Graph Database

Production-Proofing Your Neo4j Cypher MCP Server was originally published in Neo4j Developer Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.