Why I’m Replacing My RAG-Based Chatbot With Agents

Developer Experience Engineer at Neo4j

5 min read

“The rumors of RAG’s demise have been greatly exaggerated.”

That was the response from Alexy Khrabrov, Neo4j’s eyes and ears on the ground in San Francisco, when I asked him whether I should be worried about our GenAI curriculum on GraphAcademy.

That said, public opinion has turned on retrieval-augmented generation (RAG), and I can’t move for thought pieces on how RAG is dead. I see this as mostly hyperbole, but I do think the position of RAG within a GenAI stack is changing.

Breaking With Traditions

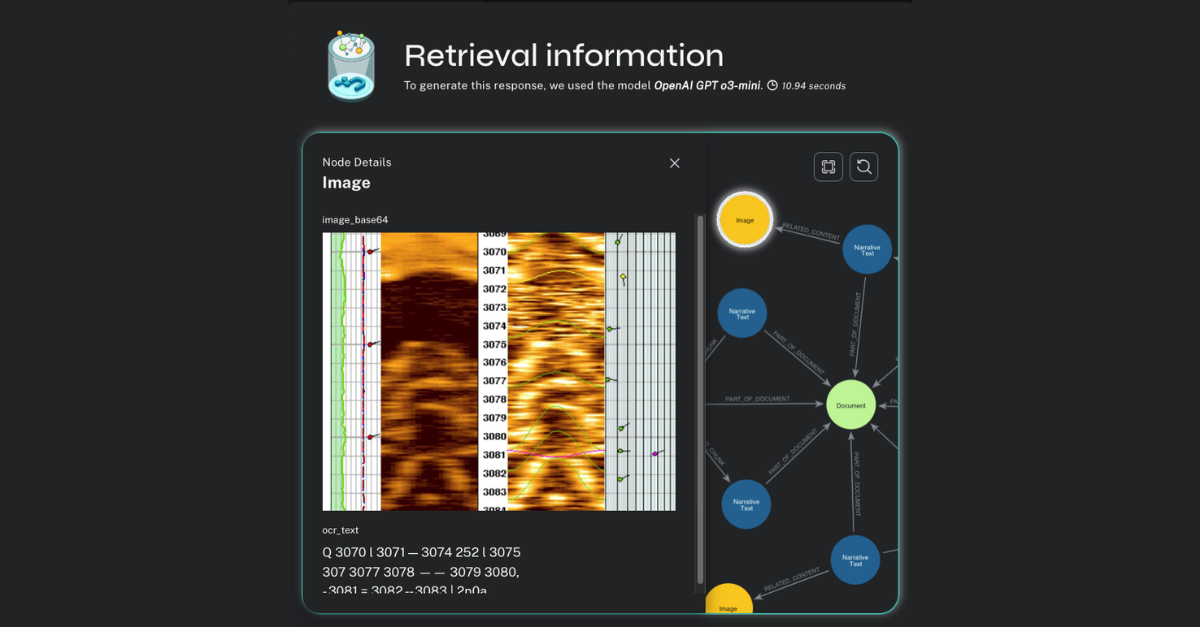

As LLMs improve their ability to follow instructions and as context windows grow larger, the “traditional” process of chunking documents, creating embeddings, and using vector-based semantic search to find similar results is starting to look like using a sledgehammer to crack a nut.

As the industry evolves, new techniques are emerging frequently to solve the problems posed by GenAI. For example, the same LLMs that are great for text summarization can be used to create an llms.txt file, short enough to fit within a context window, which will provide the LLM with succinct instructions it needs to solve the task. Given a list of these files with titles, LLMs are more than capable of selecting the correct file in the context without the need for external data sources.

So while RAG certainly isn’t dead, the conversation has changed, both literally and figuratively.

Our RAG-Based Chatbot

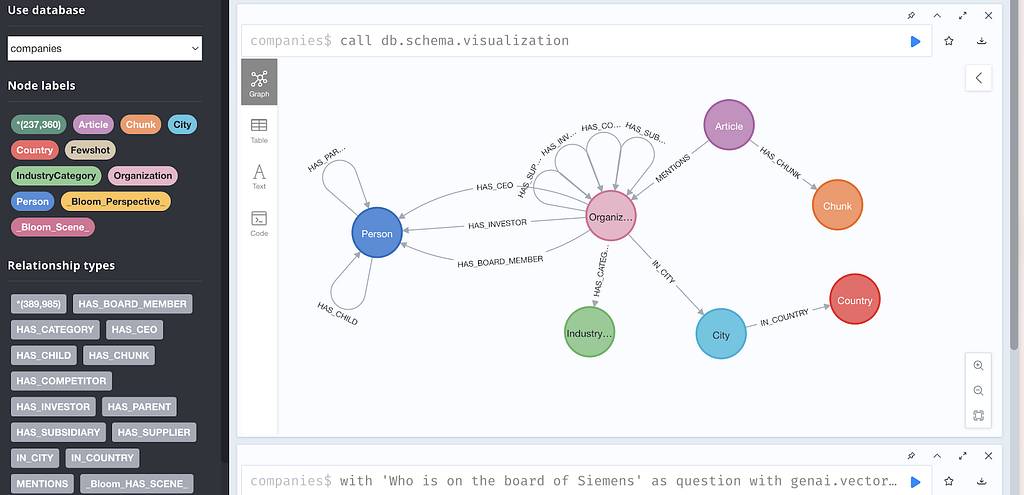

Like many, we were eager to jump on the bandwagon, releasing an educational chatbot on GraphAcademy in 2023. The chatbot was capable of using Neo4j’s vector indexes to look up relevant results and generate a reasonable answer for the user. This solved the problem of static information retrieval and grounded the LLM’s response with real information, but it still fell short in many aspects.

If you’ve used GraphAcademy for any length of time, you may have noticed intermittent issues with our integrated query window. This can happen for many reasons: The server may still be provisioning the Neo4j instance, the browser may be blocking a port, or there may be a problem within the instance itself.

This topic came up often in the conversation history, and while semantic search could surface a document with potential tips to resolve the problem, it couldn’t say for sure what the problem was.

Another key frustration was our hands-on challenges. Throughout our courses, we challenge our learners to get hands-on, performing actions like creating and deleting nodes. The GraphAcademy website connects to the database and runs a Cypher statement to verify that the challenge has been completed successfully.

For all of the reasons above and more, a learner may not be able to complete the challenge, or we may not be able to verify that they have from within the website.

From Knowing to Doing

Modern agent architectures allow us to solve these problems and more by creating an agent with a list of tools that will enable it to act on the user’s behalf. This acts as an additional interface on top of the data, triggering functionality that already exists on the website when needed, providing the underlying LLM with the context it needs to provide additional support.

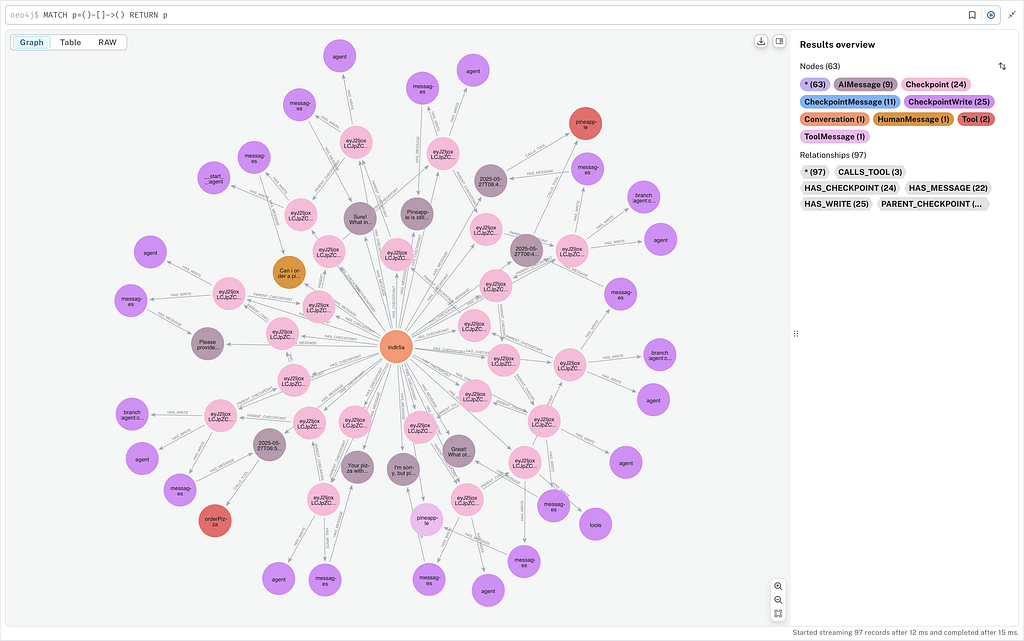

The most common pattern to solve open-ended problems is ReAct: Reason about the problem and use tools to act. The agent will continue in a loop, calling tools as necessary, until the task is complete.

If a learner reported that they couldn’t access their database instance, or the integrated browser window refused to connect, the old chatbot would only be capable of providing generic instructions.

Now, the ReAct-based learning agent can call tools to get the learner’s database credentials, verify the connection locally, and even provide a working code sample that they can copy and paste to test the connection from their end.

If the learner is stuck on a challenge, the agent can inspect the database and, with full context of the lesson objective, guide the user toward the solution.

If the learning agent detects a hint of frustration, and the user has demonstrated the knowledge needed to pass the lesson, it has the agency to complete the lesson on the learner’s behalf. After all, we’re here to aid learning, not to force the learner down a specific path.

Learning on the Fly

Anyone who’s released a public-facing AI application will know that evaluating responses and learning on the fly are important.

The learner’s conversation history is tied to the lesson and stored as a scratchpad for future reference. We can use this information to detect common patterns of user intent and tool calls and use that information to create a dedicated tool that performs the action end to end without needing to communicate with the LLM after every step.

Yeah, But What About MCP?

No GenAI article in 2025 would be complete without mentioning the Model Context Protocol (MCP), a standardization of tool definitions and consumption. MCP provides hosts with a way of consuming tools written by third parties and is a giant leap forward for both online and desktop-based AI applications.

While there is a serious discussion to be had about the position of MCP tools within proprietary applications, the protocol does allow us to use MCP tools released by Neo4j to improve the learner experience and provide up-to-date guidance and best practices.

For example, the Neo4j Data Modeling MCP Server provides resources and prompts that teach best practices of graph data modeling. These tools sit perfectly within our stack and provide the learner with the guidance needed to create and refactor an optimal data model.

This tool will be combined with our own persistence tools to save the data models to the database and expose them via a REST API to other Neo4j services. Other popular third-party open-source tools, such as Stripe and Google Drive integrations, won’t make it into our stack.

Learning With GraphAcademy

If you’d like to learn more about any of the topics mentioned here, I recommend visiting Neo4j’s GraphAcademy, where we use GenAI and knowledge graphs to teach developers and data scientists about GenAI and knowledge graphs.

We have a comprehensive knowledge graph and GraphRAG learning path that covers the entire process, from GraphRAG fundamentals to building knowledge graphs for LLM to applied GraphRAG courses, including developing with Neo4j MCP tools.

Why I’m Replacing My RAG-Based Chatbot With Agents was originally published in Neo4j Developer Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.