What Is Agentic AI? The Shift Toward Autonomous, Goal-Driven AI Systems

16 min read

We’re at the next major shift in AI. GenAI amazed us with fluent text, creative code, and natural conversation. But a new class of systems is emerging that goes far beyond writing words—they act with intent. Instead of generating outputs, they pursue goals independently, making decisions and coordinating actions across tools and data.

This is the rise of agentic AI.

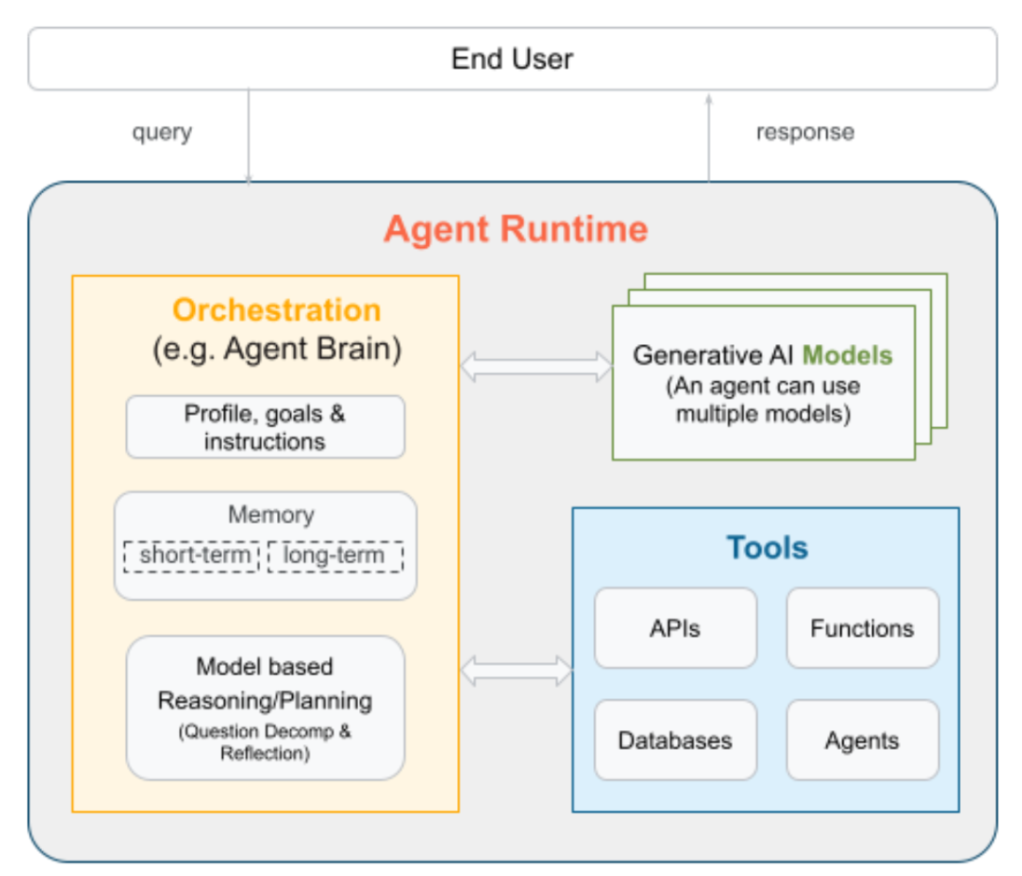

Agentic AI is software that can plan steps, use tools, observe results, and iterate until it achieves a goal—with reasoning, memory, and guardrails built in. It doesn’t wait for a prompt; it knows what to do next.

Our article covering AI agents explored the anatomy of a single agent and how it works. Now, we’re zooming out to help you understand what it means for AI to become agentic, why this changes your architecture and guardrails, and how to move from demos to dependable and scalable outcomes.

What Makes AI Agentic?

Picture your engineering team on a Tuesday morning. Overnight, errors spiked for a premium customer. Before anyone opens a dashboard, your agent has already pulled the incident history, checked the customer’s entitlements, compared the stack trace to known fixes, and drafted a ticket with the right severity and owner. It keeps following up until someone resolves it or it runs out of safe options. That is agentic behavior.

Agentic software keeps a clear objective in its internal state, reasons about the next best step, picks a tool, checks the result, and adapts until it finishes. It acts more like a thoughtful teammate, ready to help, than a chatbot waiting for the next prompt.

AI Agent vs. Agentic AI

They may sound like the same thing, yet the difference changes your application and data architecture. You move from a single AI agent in one interface to an agentic system with shared state, orchestrated tools, graph-backed memory, and explicit guardrails. Here’s the key difference:

- An AI agent is a single unit—a concrete implementation that acts on a task with a defined interface.

- Agentic AI is the system and philosophy. Think multiple agents, tools, and services coordinating around a shared goal and shared state.

In practice, a single AI agent can choose the next action, call a tool, check the result, and continue until it reaches the goal or a safe stop. For example, in billing, the agent would pull the contract, check the policy, draft a compliant credit memo, and open a task for finance with references.

An agentic system goes further by coordinating multiple specialized agents around a shared goal and state. One agent plans the steps, another checks policy, a third calls the ledger API, a fourth validates the output, and a fifth handles notifications. They hand off work through a common memory, keep a decision trace, and escalate to a human when risk or ambiguity rises.

Before you connect the agents to tools and APIs, establish a minimal control loop so you can test and audit each step. Start with the following:

- Objectives with pass or fail tests

- A planner that breaks work into steps

- Small, well-scoped tools with clear contracts

- Memory for traces and long-lived facts

- Guardrails that validate inputs and outputs at every step

This setup lets a single agent complete simple flows and allows the agentic system to coordinate roles on more complex work while keeping results explainable.

The Journey to Agentic AI: From Deterministic Code to Tool‑Using Systems

Five years ago, most teams automated with hard‑coded if-then rules, then added narrow ML models. When LLMs arrived, you layered RAG so answers could use private data, which delivered helpful chat but still didn’t close the loop on real workflows. To do that, models need to plan and act, not just answer.

Here’s how we got to agentic systems:

- Deterministic automations: Cron jobs, webhooks, and BPMN workflows kick off a fixed sequence of steps without model‑driven decisions.

- ML-in-the-loop: Models score or classify items, but humans still move work between systems and make the final decisions.

- RAG for answers: Hybrid retrieval brings in passages from documents and tables so the model can generate grounded responses to user questions.

- Reasoning loop: A planner breaks the goal into steps, calls tools with structured inputs, evaluates results, and writes back to your systems.

- Multi‑agent setups: Several small, specialized agents coordinate through shared state to plan, act, and verify work across roles.

The technical tools and approaches below allowed us to move through these steps — from helpful chat to reasoning loops and multi-agent systems:

- Model Context Protocol (MCP) exposes tools, prompts, and resources through one standard instead of bespoke adapters.

- Context engineering as a discipline designs instructions, memory, retrieval, and guardrails for each phase so the model never guesses.

- Graph‑aware retrieval or GraphRAG adds entities and relationships, so agents choose the right records and people, not just similar text.

This unlocks runbooks that execute end to end, agents that can justify actions with provenance, and a path from one agent to a small team without a rewrite. With that journey in mind, the differences between traditional ML, GenAI, and agentic AI become concrete and testable.

Traditional ML vs. GenAI vs. Agentic AI

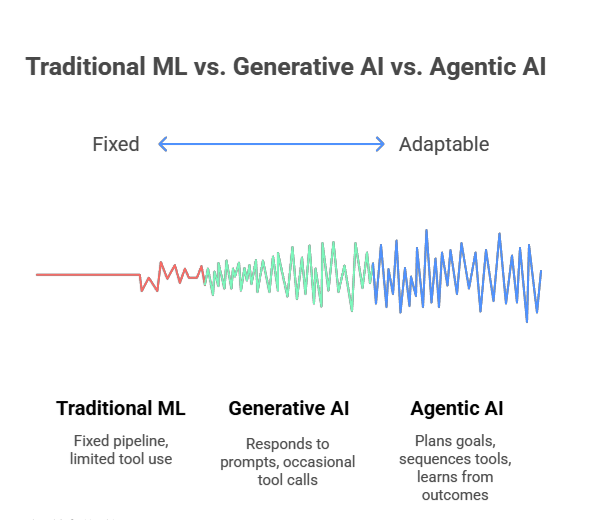

Before you decide whether a chat assistant is enough or if you need agents, align on what each approach actually delivers. The table below compares traditional ML, GenAI, and agentic AI across goals, tool use, context, adaptability, and collaboration so you can choose the right architecture for your workflow.

| Capability | Traditional ML | GenAI | Agentic AI |

| Goal handling | Fixed pipeline | Responds to prompts | Plans multi‑step goals and stops on success |

| Tool use | Limited | Occasional tool calls | Chooses and sequences tools across steps |

| Context | Features and models | Prompt plus retrieval | Structured memory, GraphRAG, metadata, provenance |

| Adaptability | Retrain to change | Prompt to adapt | Learns from outcomes and revises plan |

| Collaboration | N/A | Single assistant | Multi‑agent roles with shared state |

Core Characteristics of Agentic Systems

GenAI chatbots wait for prompts; agentic systems don’t. Alerts fire, logs change, builds fail, policies update. Agentic systems create value in those moments by taking the next step and moving the workflow forward. Here are their core characteristics:

- Proactive: The system takes the first step toward the goal instead of just answering a prompt. For example, when a high-priority issue hits your system, it can act on its own by opening the ticket, setting the severity, and pinging the owner.

- Adaptable: Plans are updated with new context. The reasoning loop allows the system to learn from outcomes, store what worked, and reuse it the next time.

- Collaborative: Agents talk to agents. Use an explicit agent‑to‑agent protocol and shared state so specialized agents can reliably hand off work.

- Organized like human teams: Depending on the architecture, agentic systems can mirror how departments ship outcomes together, each with clear ownership and SLAs.

- Multi‑agent and specialized: The system has specialized roles (planner, retriever, tool caller, validator, and reviewer) so each role does one thing well and reports back.

- Beyond chat: Some agents are background/ambient and event‑driven, while others integrate into existing workflows (tickets, PRs, dashboards), so conversation is optional.

These traits are key for shifting from chat assistants to goal-driven systems that plan and act. When workflows trigger events on their own, you need software that takes the first step, coordinates with other agents, and records every decision.

Next, we map the journey from deterministic code to LLMs and RAG, and the standards and practices, like MCP and context engineering, that make agentic AI practical at work.

The Developer’s Guide to GraphRAG

Build a knowledge graph to feed your LLM with valuable context for your GenAI application. Learn the three main patterns and how to get started.

Real‑World Use Cases of Agentic AI

Now, let’s make it real. Below are four everyday workflows that show how agentic systems deliver measurable value. For each one, you’ll see industry-specific scenarios, what the agent reads (“Inputs”), what it does (“Tools”), and how we know it’s done (“Done when”).

Support Triage in Customer Service

On‑call engineers face late‑night alert spikes, with evidence scattered across logs and the customer relationship management (CRM) system, and service‑level agreements (SLAs) that keep ticking.

It’s 2:11 a.m. when a premium customer’s error rate jumps. By 2:13 a.m., the agent links prior incidents, checks the customer’s entitlements, sets the correct severity, and routes the ticket to the right owner with the supporting evidence attached.

- Inputs: Ticket text, customer tier, recent incidents

- Tools: GraphRAG search, entitlement checker, and incident/ticket API

- Done when: Severity is set, related incidents are linked, and the ticket is assigned

- Outcome: Faster response times, a complete audit trail, and early detection of complaint patterns with proactive escalation to product owners

Updating Care Plans in Healthcare

Clinicians need evolving plans that combine patient history, new lab results, and current research.

New lab results arrive. The agent updates the treatment plan, cites the supporting studies, and alerts the care team when a threshold is crossed.

- Inputs: Electronic health record (EHR) history, lab results, research index

- Tools: EHR API, GraphRAG search, alerting service

- Done when: The plan is updated with citations and required alerts are delivered

- Outcome: Timely interventions with traceable reasoning

Contract Review in Legal and Compliance

Contract reviews stall across jurisdictions with shifting rules.

A supplier contract includes a risky clause. The agent compares it to company policy, proposes compliant language, and logs the change with references for audit.

- Inputs: Contract text, policy library, jurisdiction data

- Tools: Clause classifier, policy checker, document editor

- Done when: The contract includes approved language with an audit trail

- Outcome: Faster reviews and fewer manual escalations

Rerouting Around Disruptions in Supply Chain

Disruptions from weather, port closures, or supplier delays require quick rerouting.

A port closes unexpectedly. The agent reroutes shipments, updates estimated times of arrival (ETAs), and notifies affected stakeholders.

- Inputs: IoT telemetry, carrier status, weather feeds, contracts

- Tools: Route optimizer, supplier API, notification service

- Done when: Orders are rerouted with updated ETAs and stakeholders are notified

- Outcome: Fewer stockouts and more on‑time deliveries

Agentic AI’s Reliability, Safety, and ROI Challenges

Demos tend to look great because they run under ideal, pre-scripted conditions where everything goes right. Production, however, is messy: alerts fire, inputs drift, tools fail, and people change data underneath you. When that happens, weak context and loose tooling fail in predictable ways. Naming those failures (and fixing them) keeps your agent from getting stuck or doing the wrong thing.

Use the lists below as checkpoints during design reviews and incident postmortems. It identifies the failures you’ll see in logs and traces and turns them into tests and guardrails you can enforce.

Common Failure Modes

Use this as a quick diagnostic list. These are the patterns you’ll see in logs and traces when agents fail in production:

- Goals are vague and you have no success tests, so the loop cannot decide when to stop.

- Tools lack clear contracts, timeouts, and idempotency, so retries waste tokens and can take unsafe actions.

- Retrieval fetches look‑alike passages and misses entities or time windows, so the agent targets the wrong records.

- You don’t capture provenance, so you can’t explain or reproduce a decision.

- Plans run for many steps without checkpoints, so one bad step cascades into larger failures.

How to De‑Risk in Production

The checks below will help you turn those failures into tests, guardrails, and safe defaults:

- Write goals as executable tests that a machine can pass or fail.

- Define tool schemas with strict input and output contracts, and enforce timeouts.

- Add pre‑flight validations and post‑flight verifications around every tool call.

- Ground actions in retrieved facts with citations and enforce least‑privilege scopes.

- Track unit economics per task and alert on drift in tokens, latency, and failure rate.

While technical safeguards are critical, they’re only part of the picture. Human trust, collaboration, and accountability also determine whether agentic systems succeed. Teams must decide when humans approve actions, how agents explain reasoning, and how outcomes are audited. As agents gain autonomy, questions of ethics, governance, and explainability move from theory to design requirements.

While interest in agentic AI is growing, most systems most likely won’t reach production. Gartner predicts that 40 percent of agentic AI projects will be canceled by the end of 2027 due to cost overruns, reliability gaps, and unclear value. Today’s LLM-based agents still don’t achieve 100-percent task accuracy, and that error rate compounds as workflows grow more complex. These realities don’t diminish the opportunity, though; instead, they underscore it. To move beyond demos, teams need stronger context engineering, governance, and graph-based memory to make agentic AI reliable at scale.

The Role of Knowledge Graphs in Agentic AI

Agentic systems operate on networks of information that span many layers—from how agents share context and memory to how they observe, reason, and decide. To make those loops reliable, they need structured data that can be queried, contextualized, and explained. That’s what knowledge graphs provide: a shared map of entities, relationships, and lineage that gives agents both context and accountability. Try asking your agent a hard, real question:

“Is this outage related to the SSO changes we shipped last Friday for our EMEA premium tenant?”

To answer, it must connect customers, entitlements, services, deploys, and incidents. A knowledge graph gives you those connections with lineage and policy in one place.

Knowledge graphs help most when:

- You depend on multi‑hop relationships.

- You want retrieval that returns facts, neighbors, and citations, not just similar text.

- You need entity resolution across systems.

- You need analytical capabilities of graph algorithms.

- You must prove why the agent acted.

Pair GraphRAG with your graph store so the agent can query entities, traverse relationships, and attach evidence to each step. Neo4j Enterprise offers ACID transactions and role‑based access control (RBAC) so you can audit decisions.

What Lies Ahead? From Apps to Agent Workspaces

Over the next year, most enterprise applications will evolve into agentic systems that become dynamic environments where humans and AI collaborate. Instead of fixed dashboards and forms, you’ll have agent workspaces that watch signals, plan next steps, and ask for approval when risk rises.

Humans will set the direction and review high-stakes actions while agents will handle routine, multi-step work across systems. The shift from tools you operate to teammates you supervise is already under way. And, while many major platforms now ship agent runtimes, Neo4j provides the connective layer that makes them reliable.

How Neo4j Powers Agentic AI

Neo4j gives agents a single source of truth for context and memory. You can model accounts, entitlements, services, deployments, and incidents as a graph in Neo4j so agents can retrieve not only similar text but also the entities and relationships that drive decisions.

- Use Neo4j GraphRAG to build the graph index and return entities, relationships, and citations alongside passages.

- Run vector search and hybrid search side by side, then use Cypher to traverse multi‑hop paths at low latency.

- Keep provenance and long‑term memory in one store with ACID transactions and RBAC.

- Connect your runtime through MCP tools or LangGraph so agents can read and write safely.

- Run it in Neo4j AuraDB for a managed service, or deploy Neo4j yourself if you need full control.

Essential GraphRAG

Unlock the full potential of RAG with knowledge graphs. Get the definitive guide from Manning, free for a limited time.

Agentic AI FAQs

Agentic AI is software that keeps a clear objective, plans steps, calls tools, checks results, and repeats until it reaches the goal or a safe stop.

Insight: Think of it as an outcome‑driven automation powered by language models, not just a chatbot that replies to prompts.

GenAI produces content in response to a prompt, while agentic AI plans and executes actions across tools with memory and checkpoints.

Insight: Use agentic AI when the task needs multi‑step plans, external systems, and success tests rather than a single answer.

Non‑agentic systems react once to an input; agentic systems hold objectives, choose the next action, observe the result, and continue until done. The loop matters because it lets the system recover from errors, adapt to new context, and show how it reached the outcome.

No, it’s not, and interest is growing fast. PagerDuty’s 2025 report found that more than half of companies have deployed AI agents and expect agentic AI to have faster adoption and higher ROI than GenAI. However, reliability and governance still lag in many deployments.

The GitHub Copilot coding agent can analyze a repository, propose a fix, run tests, and open a pull request for review.

Insight: Keep a human in the loop for merges and production changes so the system stays safe and auditable.

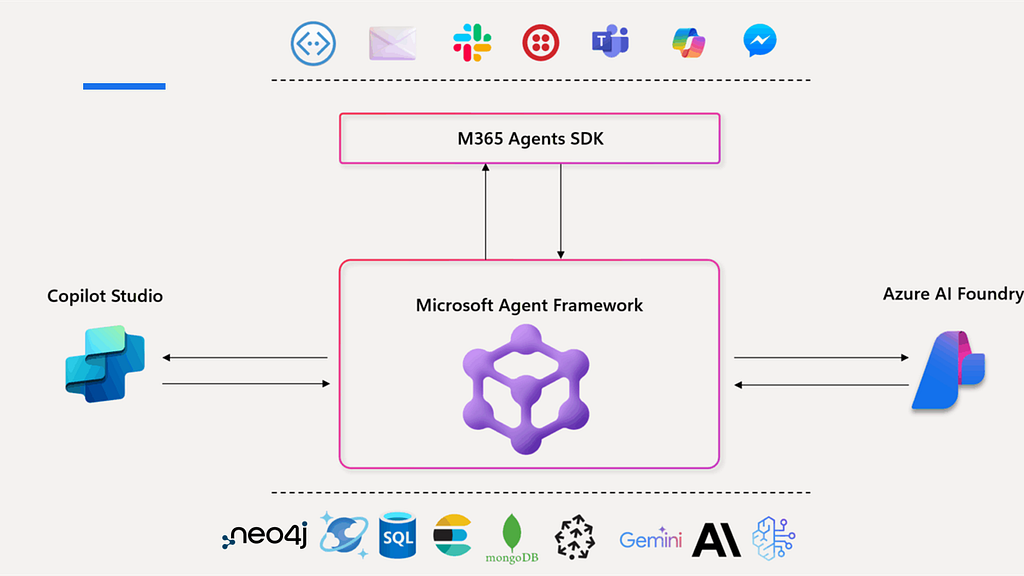

Major platforms now ship agent capabilities: Microsoft 365 Copilot includes multi‑agent orchestration and an agent store, Google Agentspace targets agent‑driven work, and Amazon Q Business adds agentic RAG for multi‑step retrieval. Open standards like MCP and frameworks like LangGraph also accelerate adoption.

Insight: Vendors set the runtime, but your data and graph‑based memory decide reliability, which is why many teams pair agents with Neo4j GraphRAG.

Teams are moving toward agent workspaces where specialized agents coordinate through shared state, graph‑native memory, and stronger evaluations.

Insight: Expect tighter safety controls, task‑level ROI tracking, and deeper graph integration so agents explain decisions with citations and lineage.