Getting Started with Apache Spark and Neo4j Using Docker Compose

Developer Relations

6 min read

[This post was originally published on Kenny Bastani’s blog and was used with permission.]

I’ve received a lot of interest since announcing Neo4j Mazerunner.

People from around the world have reached out to me and are excited about the possibilities of using Apache Spark and Neo4j together. From authors who are writing new books about big data to PhD researchers who need it to solve the world’s most challenging problems.

I’m glad to see such a wide range of needs for a simple integration like this. Spark and Neo4j are two great open source projects that are focused on doing one thing very well. Integrating both products together makes for an awesome result.

Less Is Always More, Simpler Is Always Better

Both Apache Spark and Neo4j are two tremendously useful tools.

I’ve seen how both of these two tools give their users a way to transform problems that start out both large and complex into problems that become simpler and easier to solve. That’s what the companies behind these platforms are getting at. They are two sides of the same coin.

One tool solves for scaling the size, complexity and retrieval of data, while the other is solving for the complexity of processing the enormity of data by distributed computation at scale. Both of these products are achieving this without sacrificing ease of use.

Inspired by this, I’ve been working to make the integration in Neo4j Mazerunner easier to install and deploy. I believe I’ve taken a step forward in this and I’m excited to announce it in this blog post.

Announcing Spark Neo4j for Docker

I’ll start by saying that I’m not announcing yet another new open source project. Spark Neo4j is a Docker image that uses the new Compose tool to make it easier to deploy and eventually scale both Neo4j and Spark into their own clusters using Docker Swarm.

Docker Compose is something I’ve been waiting awhile for. It’s one pillar of Docker’s answer to cluster computing using containers.

This was previously a not-so-easy thing to do on Docker and is now completely doable – in a simple way. That’s really exciting.

Now let’s get on to this business of processing graphs.

Installing Spark Neo4j

The tutorial below is meant for Mac users. If you’re on Linux, I’ve got you covered: Spark Neo4j Linux install guide.

This tutorial will walk you through:

- Setting up a Spark Neo4j cluster using Docker

- Streaming log output from Spark and Neo4j

- Using Spark GraphX to calculate PageRank and Closeness Centrality on a celebrity graph

- Querying the results in Neo4j to find the most influential actor in Hollywood

Requirements

Get Docker: https://docs.docker.com/installation/

After you’ve installed Docker on Mac OSX with boot2docker, you’ll need to make sure that the DOCKER_HOST environment variable points to the URL of the Docker daemon.

$ export DOCKER_HOST=tcp://$(boot2docker ip 2>/dev/null):2375

Neo4j Spark uses the

DOCKER_HOSTenvironment variable to manage multiple containers with Docker Compose.

Run the following command in your current shell to generate the other necessary Docker configurations:

$ $(boot2docker shellinit)

You’ll need to repeat this process if you open a new shell. Spark Neo4j requires the following environment variables:

DOCKER_HOST,DOCKER_CERT_PATH, andDOCKER_TLS_VERIFY.

Start the Spark Neo4j Cluster

In your current shell, run the following command to download and launch the Spark Neo4j cluster.

$ docker run --env DOCKER_HOST=$DOCKER_HOST

--env DOCKER_TLS_VERIFY=$DOCKER_TLS_VERIFY

--env DOCKER_CERT_PATH=/docker/cert

-v $DOCKER_CERT_PATH:/docker/cert

-ti kbastani/spark-neo4j up -d

This command will pull down multiple Docker images the first time you run it. Grab a beer or coffee. You’ll soon be taking over the world with your new found graph processing skills.

Stream Log Output from the Cluster

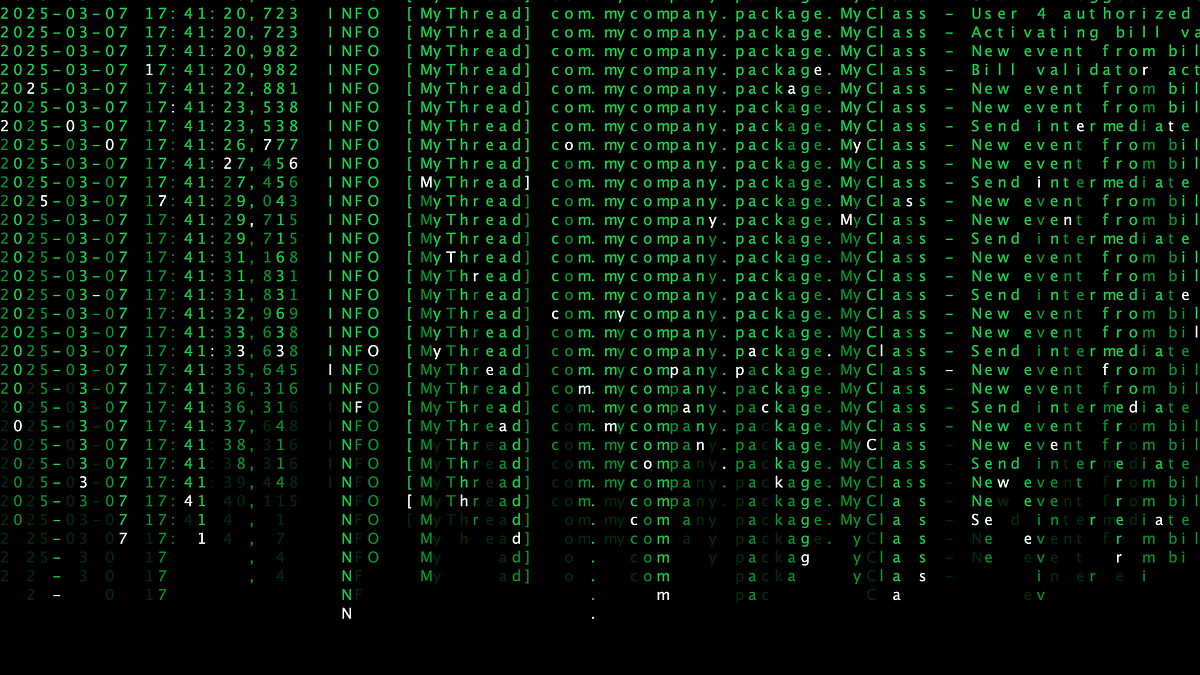

After the Docker images are installed and configured, you will be able to access the Neo4j browser. To know whether or not Neo4j has been started, you can stream the log output from your Spark Neo4j cluster by running this command in your current shell:

$ docker run --env DOCKER_HOST=$DOCKER_HOST

--env DOCKER_TLS_VERIFY=$DOCKER_TLS_VERIFY

--env DOCKER_CERT_PATH=/docker/cert

-v $DOCKER_CERT_PATH:/docker/cert

-ti kbastani/spark-neo4j logs graphdb

This command will stream the log output from Neo4j to your current shell.

...

graphdb_1 | 20:18:36.736 [main] INFO o.e.jetty.server.ServerConnector - Started ServerConnector@788ddc1f{HTTP/1.1}{0.0.0.0:7474}

graphdb_1 | 20:18:36.908 [main] INFO o.e.jetty.server.ServerConnector - Started ServerConnector@24d61e4{SSL-HTTP/1.1}{0.0.0.0:7473}

graphdb_1 | 2015-03-08 20:18:36.908+0000 INFO [API] Server started on: https://0.0.0.0:7474/

graphdb_1 | 2015-03-08 20:18:36.909+0000 INFO [API] Remote interface ready and available at [https://0.0.0.0:7474/]

Confirm that Neo4j has started before continuing.

CTRL-Cwill exit the log view and bring you back to your current shell.You can alter the above command to stream log output from all service containers simultaneously by removing

graphdbfrom the last line.

Open the Neo4j browser

Now that you’ve confirmed Neo4j is running as a container in your Docker host, let’s open up Neo4j’s browser and test running PageRank on actors in a movie dataset.

Run the following command to open a browser window that navigates to Neo4j’s URL.

$ open $(echo "$(echo $DOCKER_HOST)"|

sed 's/tcp:///http:///g'|

sed 's/[0-9]{4,}/7474/g'|

sed 's/"//g')

This command finds the

$DOCKER_HOSTenvironment variable to generate the URL of Neo4j’s browser. On Linux, this would be https://localhost:7474.

Import the Movie Graph

In the Neo4j console type :play movies and press enter. Follow the directions to import the movie sample dataset.

We’ll use this dataset to test the Spark integration by running PageRank on the “Celebrity Graph” of actors.

Now that the movie dataset has been imported, let’s create new relationships between actors who appeared together in the same movie. Copy and paste the following command into the Neo4j console and press CTRL+Enter to execute.

MATCH (p1:Person)-[:ACTED_IN]->(m:Movie),

(p2:Person)-[:ACTED_IN]->(m)

CREATE (p1)-[:KNOWS]->(p2)

PageRank measures the probability of finding a node on the graph by randomly following links from one node to another node. It’s a measure of a node’s importance.

Calculate PageRank on the Celebrity Graph

Now that we’ve generated our “Celebrity Graph” by inferring the :KNOWS relationship between co-actors, we can run PageRank on all nodes connected by this new relationship.

In the Neo4j console, copy and paste the following command:

:GET /service/mazerunner/analysis/pagerank/KNOWS

and press enter.

If everything ran correctly, we should have a result of:

{

"result": "success"

}

This means that the graph was exported to Spark for processing.

Monitor Spark’s Log Output

We can monitor the log output from Spark by returning to the terminal we used during setup from earlier. From that shell, run the following command:

$ docker run --env DOCKER_HOST=$DOCKER_HOST

--env DOCKER_TLS_VERIFY=$DOCKER_TLS_VERIFY

--env DOCKER_CERT_PATH=/docker/cert

-v $DOCKER_CERT_PATH:/docker/cert

-ti kbastani/spark-neo4j logs

Calculate Closeness Centrality

You’ll now be able to monitor the real-time log output from the Spark Neo4j cluster as you submit new graph processing jobs.

Return to the Neo4j browser and run the following command to calculate the Closeness Centrality of our “Celebrity Graph.”

:GET /service/mazerunner/analysis/closeness_centrality/KNOWS

If your log output from the terminal is visible, you’ll see a flurry of activity from Spark as it calculates this new metric. Don’t blink, you might miss it.

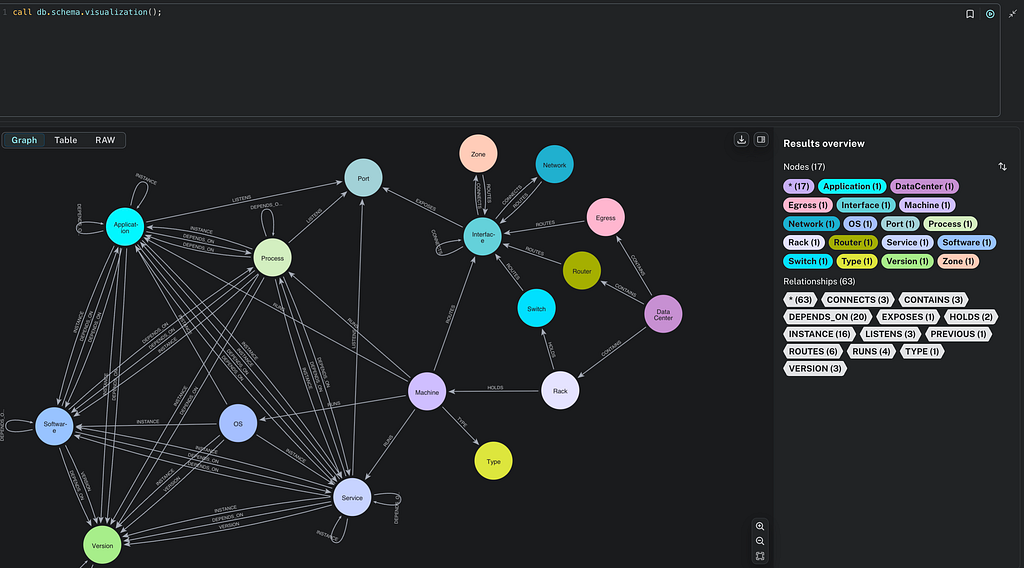

Querying the Metrics from Neo4j

You can now query on the newly calculated metrics from Neo4j. In the Neo4j browser, run the following command:

MATCH (p:Person) WHERE has(p.pagerank) AND has(p.closeness_centrality) RETURN p.name, p.pagerank as pagerank, p.closeness_centrality ORDER BY pagerank DESC

The results show which of the celebrities have the most influence in Hollywood.

Go forth and process graphs.

Want to learn more about the power graph processing? Click below to get your free copy of O’Reilly’s Graph Databases ebook and discover how to use graph technologies in your application today.