Machine Learning, Graphs and the Fake News Epidemic (Part 1)

Developer Relations

4 min read

Regardless of your political affiliation or demographic it’s difficult to deny the effects of the recent fake news boom.

The Pew Research Center conducted a survey that found that two in three adults in U.S. believe that the upsurge of fake news has left Americans confused about even basic facts. They also found that a quarter of adults admitted to sharing fake news themselves, with nearly equal parts doing so knowingly and inadvertently.

Many have attributed this upsurge of falsified information to social media outlets, and in fact, an analysis by BuzzFeed News found that in the final three months leading up to the 2016 presidential election, the 20 top-performing fake news articles on Facebook resulted in more shares, reactions and comments than the top 20 articles from major news sources such as the New York Times.

Fake News Isn’t Just a Technology Problem

Since then many have taken action, trying to mitigate the damage caused by fake news. While some look to artificial intelligence and machine learning as a way to automate the process in which fake articles are debunked, experts like Carnegie Mellon’s Dean Pomerleau and Facebook’s Yann Lecun stress that the problem at hand is too complex for technology alone.

Even the sub-problem of defining the criteria under which to classify news as “fake” creates ambiguity that requires human judgement. Pomerleau even went so far as to state that the ability to build such a machine “would mean AI has reached human-level intelligence,” which is currently not the case.

Additionally, it is difficult even for trained humans to distinguish intentionally misleading news from genuine mistakes, satirical pieces or even real articles that only sound absurd. (Try it for yourself via this quiz created by the BBC.)

Determining the Role of Machine Learning

So then, what is the role of machine learning and artificial intelligence in the battle against fake news? Pomerleau and others believe that rather than replacing human fact checkers, intelligent algorithms – which can recognize news as potentially fake – will be used alongside humans in order to flag and quarantine possible misinformation before it can be spread in mass.

As an example, Pomerleau prompted the AI community with the Fake News Challenge, where participants were asked to create a model which could categorize the bias of the body of an article towards a specific claim as either agreeing, disagreeing, discussing or unrelated.

This, amongst several other tasks, is an essential part of the process that human fact checkers work through when investigating the validity of an article. The goal is to gather as much relevant information about all sides of a claim, so as to make an enlightened decision about its credibility. Machine learning serves as a fast and scalable means of gathering this information.

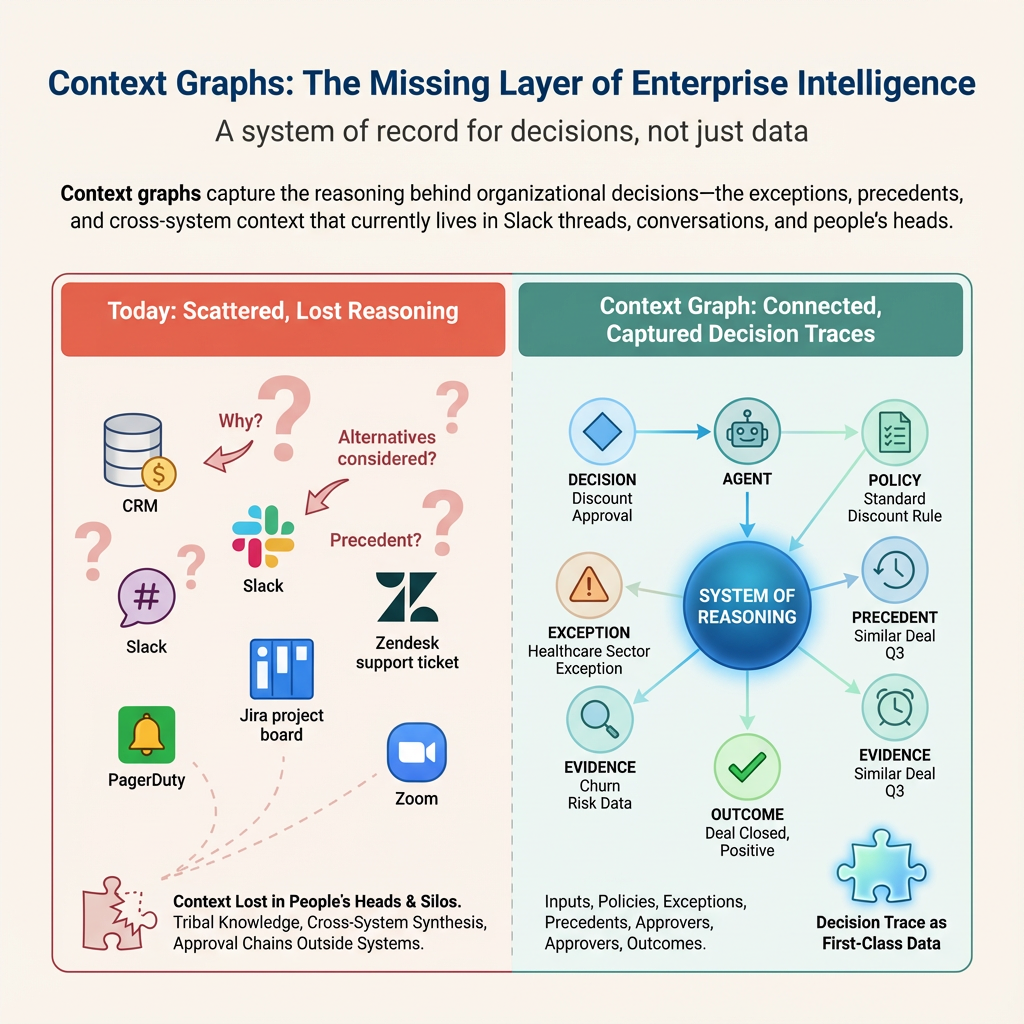

However, extracting this information is only half the battle. The ability to determine whether an article is real or fake requires more than just information about the article; it requires an understanding of the article’s position within the context of the greater news realm.

Thus, in order to reach any meaningful conclusions, we must also connect and structure our data in a way that empowers us to leverage underlying relationships within it.

Take Pomerleau’s stance detection task, for example: distinguishing the bias of an article towards a specific claim adds some clarity as to the article’s motives, but imagine if we could also, in real time, compare the article’s bias to the the biases of thousands of other articles and even weight and compare the credibility of the publications of articles which contradict it.

Such a tool would not only be useful for fact checkers, but also for researchers looking to explore all sides of a topic. With such strong correlation between utility and connectivity, there is a clear advantage in building a database that best supports connected data.

How Graph Technology + Machine Learning Will Help

Graph database technology is the most powerful way of both recognizing and leveraging connections in mass amounts of data.

In this article, John Swain discusses how he used a graph to detect major influencers on Twitter and the networks that they influence. Additionally, organizations like the International Consortium of Investigative Journalists (ICIJ) have been using graphs to detect fraud and corruption on a massive scale.

Google even uses a knowledge graph to enhance its search engine and map out the entire web. Is it really so bold to think that graph technology could also be the answer to detecting fake news?

By using several machine learning algorithms to build and feed a “news graph,” we can then use this as our basis for analyzing news from multiple perspectives, providing additional insight to the bias and credibility of both individual articles and the sources of those articles.

In next week’s post, I will lay down a foundation for such a solution and give an overview of both the structure of our news graph and the algorithms used to obtain the data which populates it.

Click below to get your free copy the O’Reilly Graph Databases book and learn how to harness the power of graph technology.