Polyglot Persistence for Microservices Using Spring Cloud and Neo4j

9 min read

Editor’s Note: Last October at GraphConnect San Francisco, Kenny Bastani and Josh Long — Spring Developer Advocates at Pivotal — delivered this presentation on polyglot persistence for microservices using Spring Cloud and Neo4j.

For more videos from GraphConnect SF and to register for GraphConnect Europe, check out graphconnect.com.

Kenny Bastani: I’m a Spring Developer Advocate for Pivotal. Josh Long and I are writing a book Cloud Native Java about writing applications with Java in the cloud – on a platform like Cloud Foundry with Spring Boot – that will come out in January of this year.

Josh Long: If you have any questions after reading or watching our talk, you can reach out to me on Twitter or by email.

Pivotal is a small 2013 startup in Silicon Valley that spun off from both EMC Corporation and VMware. We have a lot of great open source technology at our disposal, which includes Spring Cloud, Tomcat, RabbitMQ and Redis. These all serve our theme of helping customers deliver code to production as quickly, efficiently and safely as possible.

Agile vs. Waterfall Workflows

There are a few different reasons why some customers are held back from moving code into production. Consider the example of Netflix, which realized that in the typical IT organization — in which work flows from product management, to user experience, to developers, to QA and then on to the various administrators — you end up with a gated conveyance of work across different groups.

To achieve lean manufacturing you have to reduce inventory, which is the queuing between workstations. Even though it may take only an aggregate of one or two weeks to complete a project, it could take months because your project has to wait in a queue. This obviously frustrates your ability to move quickly.

Modern developers don’t see this as very controversial because they are typically agile and move code quickly into production. Where developers are agile and the rest of the organization is waterfall, you end up with a trend called Water-Scrum-Fall.

Netflix famously realized this problem and moved to a structure called feature teams.

Instead of having different work stations, they put one team on one set of features and deliverables, which they were responsible for it its entirety. If the feature is extremely large, it can be broken it down into smaller pieces.

But this poses a new problem — if you spend too much time synchronizing, stabilizing and integrating code, you lose the benefits of this agile delivery process.

The Benefits of Microservices

This naturally leads people to microservices, which are small, singly focused features delivered as independently deployable services. By having small teams work on small features, build microservices and automate everything at the end, each team now delivers value and functionality.

There is a great talk by Chris Richardson about the benefits of microservices that is featured in the book The Art of Scalability which was written by Martin Abbott and Michael Fisher. They have consulted with a number of organizations, including eBay, using the scale cube.

The cube provides three axes on which to scale out your application. The axes are:

- Functional decomposition, the idea that you break apart an application into small functional blocks of microservices

- Horizontal duplication, which is load balancing across multiple similar nodes and then looking up oriented splits

- NoSQL, the idea that you can now share or use scalable data access technologies

If you embrace all three, you get near infinite scale.

One of the largest benefits of microservices is that when you break things out into such small services, you are forced to formalize the boundaries of these services and define the domain within each service.

This is because you no longer have a distributed transaction and therefore can’t make consistent different shards of the domain model. It also requires more crisp, clear definition of these model terms.

The below example from Martin Fowler’s bliki entry page on the subject includes an example of a sales and support context with a shared domain model.

Both the Sales Context and the Support Context include customers and products, but both are very different depending in which context they’re contained. In the Sales Context, you try to incentivize a customer to buy while in the Support Context, you try to support somebody threatening to leave. Persuading vs. saving.

When there is only one ERD diagram or graph, the defining characteristics of both “customers” and “products” becomes muddy. It’s therefore useful to formally separate both into different domains and contexts. This idea was most effectively introduced first by Eric Evans in his classic tome Domain-Driven Design.

This idea of a bounded context fits very naturally in the microservices world, because microservices give us the ability to choose our database technology. Now that we’ve separated the different domain models in terms of network boundaries and APIs, all the different services communicate with each other via the service, not the database.

We’re not able to then reach in behind some other service’s database and ask new questions — we need to communicate through the REST API. Once you’ve divorced data access from the databases, the type of database you’re using behind the REST API no longer matters. This provides the freedom to use the right database technology for a given purpose.

This is a very big win. In traditional organizations we see a lot of “database gravity,” which is the tendency to make the database you’re currently using work for your data, even though it may not be the best tool for the job you’re trying to perform.

With this formal network boundary — this modularization — we no longer have any reason not to use the right tool for the job.

Polyglot Persistence

This gets to what we refer to as polyglot persistence — this idea that you can use the right technology for the right job.

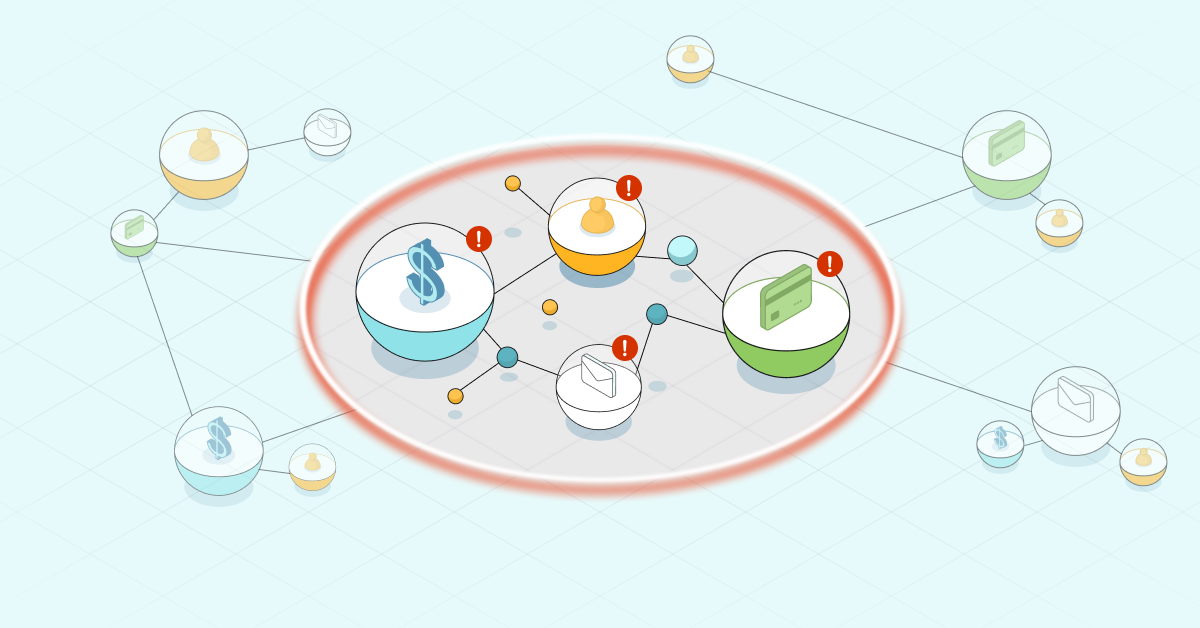

Kenny: With microservices, each team gets one database, which can be different from the databases the other teams are using.

The above image refers to a demo available later in this post, which is running on Lattice on EC2. The user service is connected to MySQL; the recommendation service is using MongoDB; the rating service, Neo4j, is connected to a Hadoop cluster; our analysis service, Spark, is running as a microservice within Spring Boot; and our movie service is also in MySQL.

Josh: To recap, in order to move code into production quickly, we need to be able to break off the smallest piece possible and essentially build an API. Another benefit of the microservices model is that it allows you to incorporate new technologies as they are developed as APIs.

If you’re using the JVM, we recommend using Spring Boot, which is a convenient tool that helps stand up APIs and applications. Our cofounder – Phil Webb – described this in the following way: Spring proper is a set of different tools that you can use in any application and Spring Boot is the final product, cake.

Kenny: The Spring framework includes your ingredients (like security, etc.). You can also choose the flavor of your cake, and how to decorate it.

Josh: Compiling all these features can be tedious. Spring Boot provides the integration for a lot of other things above and beyond the Spring ecosystem.

The service has become increasingly popular with companies such as Netflix, Ticketmaster, Baidu and Alibaba. In July of 2015 alone, there were 1.6 million downloads. Spring Boot makes it very easy to pull together various parts of the Spring ecosystem, including Spring Data Neo4j.

Spring Data is an umbrella project under which there are modules that service different types of data access technology. Michael Hunger gave an excellent talk on Spring Data and Neo4j, which explains how Spring Data provides easy access to the Neo4j database from the Spring ecosystem, which is then easy to pull into the Spring Boot project.

Spring Boot and REST API

The ability to describe a REST API is very powerful.

Typical REST APIs take advantage of HTTP idioms and headers, but are lacking because they don’t provide any information to clients about what they can do and where to go when given a certain resource. To support that pattern, we have something called Hypermedia — or HATEOAS — which uses hypertext as the engine of application state.

To learn more about hypermedia, read REST in Practice by Dr. Jim Webber.

This shows Hypermedia as the engine of application. There’s a payload with metadata, and the metadata is described in terms of links that tell you where you can go given a certain payload.

As a human operator, we can see links from a certain website — Amazon.com, for example — and understand the context around those links. We know that to navigate to the next stage in the process of purchasing an item, we should click a certain link.

Taken together, these navigations create a protocol. On the other hand, machines need to be told what to do, which is done by providing links, or metadata. The machine gets a payload and looks at the links, which tell it what steps to follow to achieve a certain result. Spring Data REST makes this very simple.

Watch the following demo to see how to describe a REST API using Spring Boot:

REST API Challenges in Distributed Systems

Creating a number of different REST APIs in a distributed system requires programming that isn’t easy. A number of concerns arise along with the need to integrate non-functional requirements.

An example is service registration discovery, which is the ability to find a service by its logical ID, divorce the availability of a service from the host and subsequently report how much that service is running. Some great technologies that help with this process are Zookeeper, ETCD and Netflix’s Eureka.

Configuration is another non-functional requirement. It requires keys and values, database locators, credentials and properties. You can decouple the configuration of a service from the process that is running that service.

With gateways, you need the ability to stand up a façade or door into a system so that other services don’t have to compose things manually. We use Zuul from Netflix, which is based off of Spring Cloud and provides cohesive integration of different pieces — such as Apache Zookeeper, Amazon Web Services and Consul — and supports design patterns.

Neo4j Deployment

Kenny: On the platform side, you deploy Neo4j into a platform using Docker. Lattice — a cloud-native platform used for deploying and scaling containers in production — is a platform-as-a-service that you can download and host yourself. It provides a cluster of containers in the cloud that are connected via Spring Cloud and allows for a polyglot persistence architecture. You are also able to select your choice of cloud provider.

In the cloud, everything scales elastically based on demand. Having your VMs scale up and down helps control costs. Below, there are multiple containers running on each virtual machine with our applications, one of which could be Neo4j.

Autoscaling, shown below, is elastic scaling based off of demand. It can actually look at a spike in traffic and predict that it’s going to need to scale up or down.

In order for this to work correctly with both Docker and containers, you need composition which connects these things together. Something like service discovery, which is provided by Spring Cloud with Spring Boot, provides the ability to register applications inside the containers when they spin up.

Watch the demo below to learn more about polyglot persistence for microservices using Spring Cloud and Neo4j. Prior to the demo, Kenny created a cluster using Lattice; you can learn how to do that here.

Inspired by Kenny and Josh’s talk? Register for GraphConnect Europe on April 26, 2016 at for more industry-leading presentations and workshops on the evolving world of graph database technology.