Unifying LLMs & Knowledge Graphs for GenAI: Use Cases & Best Practices

Senior Developer Marketing Manager

4 min read

The development of Generative AI tools and technologies has changed the AI landscape forever.

Just a few years ago, intelligent machines that understand and respond to you were a thing of Sci-Fi — something you could only find in Star Trek. Today, large language models (LLMs) like ChatGPT are at our fingertips, waiting for our prompts. The future is now.

Knowledge graphs are another trending technology known for complex analysis, structured representation, and semantic querying. They complement LLMs, making AI more intelligent and the output more accurate and reliable.

This blog post examines how knowledge graphs and large language models (LLMs) can be used together, including top use cases, examples, and advice on getting started with this impactful AI technology combination.

What Is a Large Language Model (LLM)?

Creating a company chatbot in early 2022 often required assembling a team of machine learning experts to build and train a custom AI model from scratch. With the introduction of LLM models, the barrier to entry has dropped significantly. Now, you can perform advanced AI tasks by simply prompting ChatGPT.

An LLM, like OpenAI’s GPT series, is a type of machine learning model based on natural language processing on a massive scale. They’re a crucial part of Generative AI, capable of understanding and generating human-like text.

LLMs are trained on diverse and extensive text corpuses, encompassing a wide spectrum of human knowledge and communication.

The real power of LLMs lies in their deep learning architecture, often based on transformer models, which excel in interpreting and managing sequential data. This makes them adept at understanding context and nuances in language, enabling applications like content creation, text summarization, language translation, chatbots, and technical assistance.

However, challenges like managing biases and hallucinations have been a sticking point for those involved in developing and deploying these models.

What Is a Knowledge Graph?

A knowledge graph is an advanced data structure that represents information in a network of interlinked entities. It’s connected to a long history of research in an area of artificial intelligence called knowledge representation.

Adding an ontology, which describes the types of entities and relationships, is a common practice to enrich a knowledge graph. This additional semantic layer consistently explains what is in the knowledge graph in a unified way. Ontologies provide crucial context about the contents of the data in a knowledge graph to make it more understandable. (Learn more in The Developer’s Guide: How to Build a Knowledge Graph.)

Knowledge Graph + LLM: Retrieval Augmented Generation

LLMs simplify information retrieval from knowledge graphs. They provide user-friendly access to complex data for various purposes without needing a data expert. Now anyone can directly ask questions and get summaries instead of searching databases through traditional programming languages.

Knowledge graphs are a reliable option for grounding LLMs with their ability to represent both structured and unstructured data, unlike vector databases. This technique is called retrieval augmented generation (RAG)

First, the LLM retrieves relevant information from the knowledge graph using vector and semantic search. Then, it augments the response with the contextual data in the knowledge graph. This GraphRAG process generates more precise, accurate, and contextually relevant output, while preventing false information, also known as LLM hallucination.

Generative AI Use Cases: Top LLM + Knowledge Graph Sessions

If you’re curious to understand what all this looks like in practice, I’ve curated my top five favorite sessions from Neo4j’s annual developer and data science conference, NODES 2023. Feel free to poke around these generative AI examples and applications, and let me know what you think. These sessions are highly technical, covering best practices for combining LLMs with knowledge graphs.

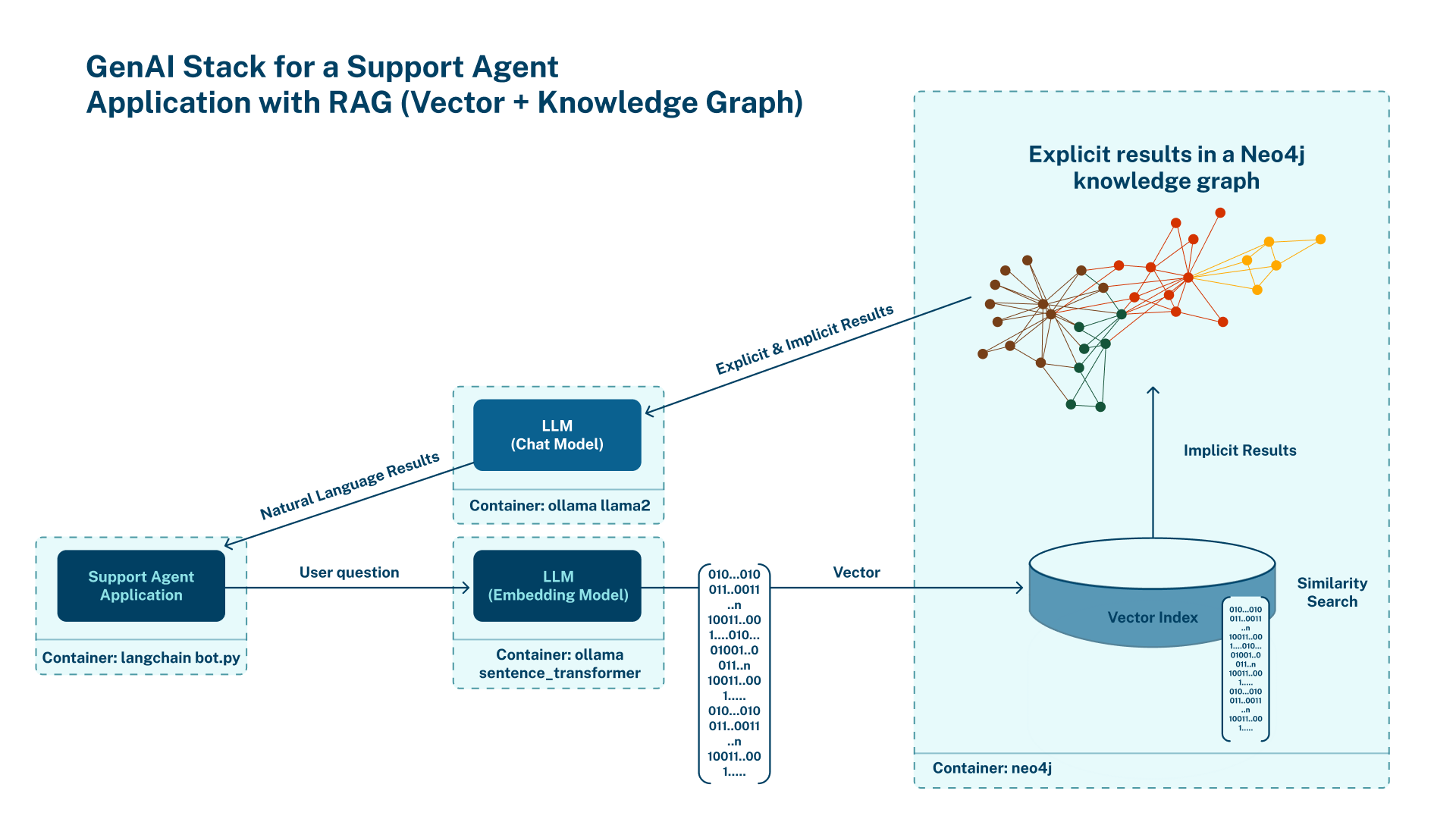

1. Build Apps With the New GenAI Stack from Docker, LangChain, Ollama, and Neo4j

Oskar and Harrison look behind the scenes into the containers of the GenAI Stack, how they work together, and how to implement LangChain, knowledge graph, and Streamlit Python apps. They build and run their own GenAI application, taking data from StackOverflow.

2. Using LLMs to Convert Unstructured Data to Knowledge Graphs

Noah demonstrates how LLMs can be used for entity extraction, semantic relationship recognition, and context inference to generate interconnected knowledge graphs. This is a great inspiration to harness LLMs for your uses of unstructured data.

3. Create Graph Dashboards With LLM-Powered Natural Language Queries

Niels demonstrates how to use NeoDash and OpenAI to create Neo4j LLM dashboards with natural language. Using a brand new plugin, you can visualize Neo4j data in tables, graphs, maps, and more – without writing any Cypher!

4. Cyberattack Countermeasures Generation With LLMs & Knowledge Graphs

Gal showcases an innovative solution that automatically identifies concrete countermeasures from vulnerability descriptions and generates comprehensive step-by-step guides. Using a hybrid approach of generative Large Language Models (LLMs) and knowledge graphs powered by NeoSemantics.

5. Fine-Tuning an Open-Source LLM for Text-to-Cypher Translation

Jonas demonstrates how to fine-tune a large language model to generate Cypher statements from natural language input, allowing users to interact with Neo4j databases intuitively and without knowledge of Cypher.

And if these are not enough, check out the full NODES 2023 playlist with 100+ technical videos on knowledge graphs and AI.

Read our other blog post to learn how Neo4j and LLMs work together.

Getting Started With Neo4j & LLM

Free Course: Neo4j & LLM Fundamentals

We’re offering a free GraphAcademy course on how to integrate Neo4j knowledge graph with Generative AI models using Langchain.

Neo4j + AWS for GenAI

Neo4j has officially partnered with AWS to solve GenAI challenges like LLM hallucination. Neo4j AuraDB Pro now integrates seamlessly with Amazon Bedrock, one of the simplest and most powerful ways to build and scale GenAI apps using foundation models.

The Developer’s Guide: How to Build a Knowledge Graph

This developer’s guide walks you through everything you need to know to start building a knowledge graph with confidence.