Real Examples of Why We Need Context for Responsible AI

Graph Analytics & AI Program Director

8 min read

This was not on my radar 12 months ago. My good friend and smart colleague, Leena Bengani, suggested we look a little more closely into AI standards and tools. Part of the impetus was a White House request of the National Institute for Standards and Tools to engage with the AI community at large and consider what tools and standards NIST should develop.

My first thought was, “Oh my goodness, this just big and hairy and a lightning rod, and I don’t want to do this.” But Leena’s instincts are always on point, and she really spurred us to consider what Neo4j can do as good corporate and global citizens to help guide the AI community.

That’s when I did a blog series on Responsible AI and am now presenting this material at events, while gathering insights and feedback from the AI community.

In this post, I’ll cover AI myths, biased AI, unknowable AI, and inappropriate AI, each step of the way illustrating the case for why context is necessary for achieving responsible AI.

The presentation these posts are based on was also recorded (posted just above), which some additional information.

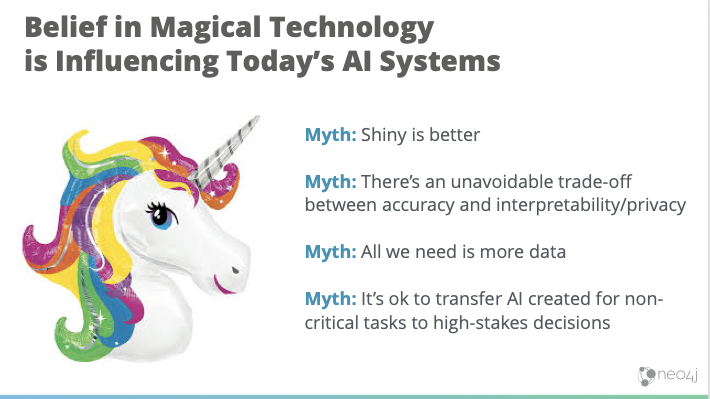

Technology Myths About AI

There are a few myths negatively impacting the way we implement AI today.

The first is that “new is better.” It’s very human for us to want to see new technology and say, “This is going to solve all my problems.” If it’s shiny, people get really excited, they want to do news articles about or take classes or fund new technology in the hope of a silver bullet. Unfortunately, that means we often overlook things that currently exist, or could be expanded on, that may even be better than this newfangled thing.

If find this second myth really surprising, that there’s an unavoidable trade-off between accuracy and interpretability or privacy. But that’s actually not true. If you carefully select and craft your models, then often you don’t have to give up goals like interpretability for accuracy. The assumption that this trade-off is inevitable keeps people from pushing for better ways to create AI.

The other myth that’s interesting is “all I need is more data.” I don’t know how many times I’ve heard from data scientists, “If I just had more data, I could be accurate and things would be perfect.” But if you think about things like bias in data; if I’m just adding more biased data, then I’m not really fixing anything. I still have a problem. So it’s not just about volume, but also about the quality and dimensions of data as well.

The fourth myth I see impacting us now is the idea that we can take something that was originally developed for, let’s say, a movie recommendation and use it elsewhere.

If I’m recommending movies to somebody and I have a 5 percent error rate, okay, maybe I can live with that. And maybe because I have cost-sensitive issues, that’s okay. But if I’m trying to predict patient care or patient outcomes, 5 percent may not be okay. Also, if I’m in a field like healthcare, I want to know why a decision was made. I really don’t care why a decision was made regarding a movie recommendation, but I do care when it comes to a diagnosis or treatment plan.

Biased AI

I would say magical thinking about AI is causing us to see a lot of uncomfortable results. One example that I think most of us are probably familiar with, is biased AI.

For example, Amazon had a recruiting tool they shut down after realizing it was biased against women. They hadn’t intended for that. They actually would like to see more balance, but they were training their AI recruiting based on current employees.

They didn’t explicitly know or look at a gender, but it turns out when you look at LinkedIn profiles and resumes of men and women, men and women have a tendency to choose different sports, social activities or clubs, and adjectives to describe our accomplishments. So the AI was picking up on these subtle differences and trying to find recruits that matched what they internally identified as successful.

So yes, bias in data can unintentionally leak into our results.

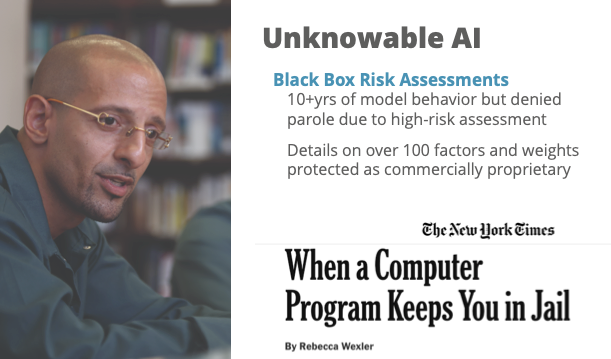

Unknowable AI

Beyond bias, there’s also another concerning area: unknowable AI.

Many of us have heard about the idea of the Black Box. It’s very hard to explain what happens, but if it’s accurate, who cares. So there’s software called COMPAS, which is used by multiple cities around the U.S. and Canada, that does risk assessments.

In this particular example, Glenn Rodriguez, who’s in the picture here, was sentenced after participating in a robbery when he was 16. He spent 10 years being a model inmate. He did volunteer programs. He tried to mentor other people on how to do better. And when it came time to go before the hearing to learn the fate of his parole, everybody, including himself and his lawyers and even the parole board, was in favor of initially granting Rodriguez parole.

But they denied him parole, stating that his COMPAS assessment rendered a high-risk score. They were surprised, but they weren’t willing to go against the software.

And you can imagine, of course, Rodriguez was disappointed. The lawyer said, okay well, tell us how this assessment happened. Tell me how you came up with this score. There’s over 100 different factors that go into how the software assessed risk, as well as lots of different weights.

Turns out, the assessment process was deemed protected because of commercial proprietary, so the company wasn’t required to share that information. So imagine how you’d feel if you’re denied something – insurance, credit, parole – and not even have the right to understand how that score came up.

On a third subsequent parole hearing, he eventually did get parole. After looking at the data, and comparing his scores and to others, there was one question in particular that stood out. It was a subjective question, but one that would’ve changed his risk score significantly. They suspected that’s what it was, but they can’t see the data so they can never know.

So, when I think about unknowable AI, there’s really just two different types of unknowable situations. There’s the software type that’s really complex and difficult to understand. And then there’s the type that we’re not allowed to look at. Both of these types are a problem.

Inappropriate AI

The third type of concern that I come across a lot is inappropriate AI. This is where AI is working exactly like it was intended to but maybe the outcomes are questionable. In other words, just because we can, doesn’t mean we should. That’s the essence of inappropriate AI.

The Chinese have implemented a social credit system that will credit both your financial credit worthiness and also your social worthiness. The system takes into account things you might normally consider, like were you late paying a bill.

Then there’s also things that you normally wouldn’t consider (at least in this country) that are more behavioral, like jaywalking. They use facial recognition to see and track if you jaywalk. They can do the same if you smoke in a non-smoking area, which would negatively affect your social score.

Your score impacts everything, from whether you get a mortgage to whether you can get high-speed internet. So there’s a lot of ramifications to personal rights, and they believe by next year, there will be 1.4 billion people who live in China and have a social credit score.

For me, this is an example of “can but I don’t believe we should.”

Context for Responsible AI

The idea of bias in data and algorithms, unknowable AI, and inappropriate AI, has really set in my bones that we have a responsibility. Creators of artificial intelligence and those that implement systems that rely on AI, have a duty to guide how they’re developed and deployed, and we have a responsibility to do it in a way that aligns with our social values.

Now, responsible AI may be different across countries and cultures. In the U.S., I think about things like accountability, which requires some sort of transparency. I think about fairness, which is a lot about appropriateness.

I think about public trust, which has two components: Is the system operating as intended, and is it operating as expected by the end users?

I’d like to do a double click into why AI and context together make sense. But first, to just clarify when I talk about AI and AI systems, I’m talking about the what. The processes that have been developed to have some kind of action similar to the way a human thinks, and that is very probabilistic. So if I think about how I make a decision, I’m never 100% sure. I’m hopeful it’s a good solution, but it’s very probabilistic. We never know for sure.

So we have the what (AI systems), and then we have the how, which is machine learning. Machine learning is about the algorithms trying to iterate to optimize a solution based on a set of training or examples its been given. But I don’t have to tell it how to do that.

Now, machine learning actually takes quite a bit of data, and it’s really not surprising because think about how we make decisions.

We make tens of thousands of decisions every day. You do that by looking around your surrounding circumstances. You grab information and likely don’t realize even know how much or what all you’re taking in. You mentally make connections, and then you try to make the best decision you can with the context and the information you have at the time. Then you move on.

It shouldn’t be any different for AI. AI requires that same type of information – the same context and connections – so it can learned based on the context. With all that adjacent information, AI systems can make necessary adjustments, just as we do, as circumstances change. And circumstances always change, don’t they?

Conclusion

Graphs are actually the best, fastest and most reliable way to add context to whatever you’re doing. It’s a natural fit.

AI without context is really limited, and there’s a funny example: These four little words, “We saw her duck.” You can interpret this many different ways. What does that really mean? Does it mean I ducked? (Hopefully nobody threw anything at me.) But maybe it means that we saw a friend’s pet bird. It might mean somebody named “We” saw a pet bird. Or maybe even that we went over to a friends house for a duck dinner and we had to cut the duck in half. (That’s not so pleasant.) There’s probably some other interpretations as well.

Without context, AI is narrowly focused on exactly what we’ve trained it to focus on, and the result is subpar predictions. You also have less transparency – of course, if you can’t explain how a decision was made, then you can’t hold people accountable and you’re not going to trust those decisions.

In next week’s installment of this two-part series on responsible AI, I’ll take a look at what context consists of when considering AI.

Read the white paper, Artificial Intelligence & Graph Technology: Enhancing AI with Context & Connections