Constructing Knowledge Graphs From Unstructured Text Using LLMs

Software Engineer, Neo4j

5 min read

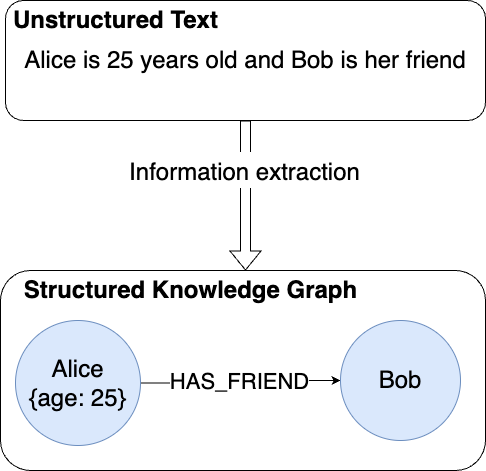

Unstructured data, like text documents and web pages, holds a wealth of valuable information. The challenge is figuring out how to tap into those insights and connect the dots across disparate sources.

Knowledge graphs turn these unstructured data into a structured representations. They map out the key entities, relationships, and patterns – enabling advanced semantic analysis, reasoning, and inference.

But how do you go from a pile of unstructured text documents to an organized knowledge graph? Previously, it would have required time-consuming manual work, but large language models (LLMs) have made it possible to automate most of this process.

In this blog, we’ll explore a simple yet powerful approach to building knowledge graphs from unstructured data using LLMs in 3 steps:

- Extracting nodes and edges from the text using LLMs.

- Importing the data into Neo4j to store and analyze the knowledge graph.

- Performing entity disambiguation to merge duplicate entities.

The code for this project can be found on GitHub.

1. Extracting Nodes and Relationships

We take the simplest possible approach, passing the input data to the LLM and letting it decide which nodes and relationships to extract. We ask the LLM to return the extracted entities in a specific format, including a name, a type, and properties. This allows us to extract nodes and edges from the input text.

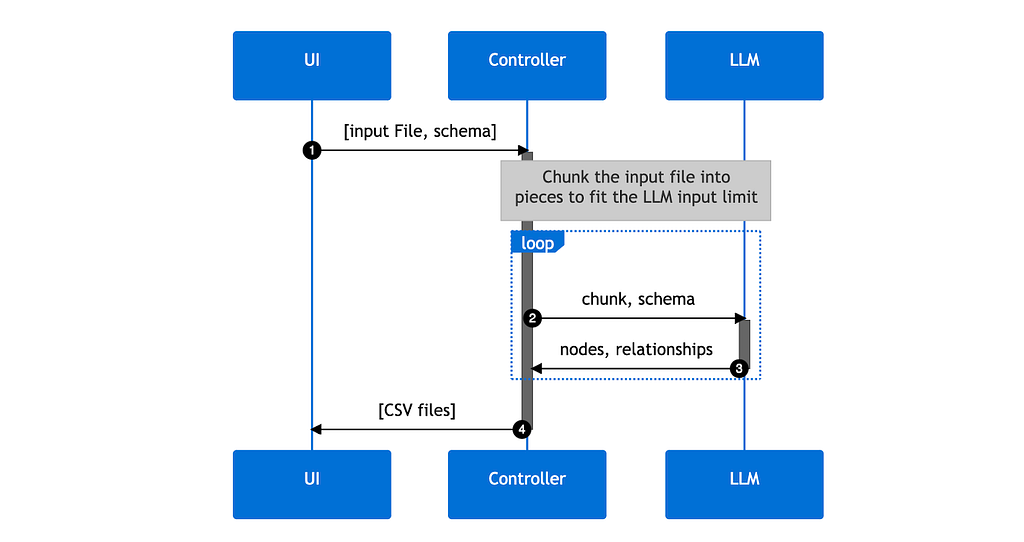

However, LLMs have a limitation known as the context window (between 4 and 16,000 tokens for most LLMs), which can be easily overwhelmed by larger inputs, hindering the processing of such data. To overcome this limitation, we divide the input text into smaller, more manageable chunks that fit within the context window.

Determining the optimal splitting points for the text is a challenge of its own. To keep things simple, we’ve chosen to divide the text into chunks of maximum size, maximizing the utilization of the context window per chunk.

We also introduce some overlap from the previous chunk to account for cases where a sentence or description spans multiple chunks. This approach allows us to extract nodes and edges from each chunk, representing the information contained within it.

To maintain consistency in labeling different types of entities across chunks, we provide the LLM with a list of node types extracted in the previous chunks. Those start forming the extracted “schema.” We’ve observed that this approach enhances the uniformity of the final labels. For example, instead of the LLM generating separate types for “Company” and “Gaming Company,” it consolidates all types of companies under a “Company” label.

One notable hurdle in our approach is the problem of duplicate entities. Since each chunk is processed semi-independently, information about the same entity found in different chunks will create duplicates when we combine the results. Naturally, this issue brings us to our next step.

2. Entity Disambiguation

We now have a set of entities. To address duplication, we employ LLMs once again. First, we organize the entities into sets based on their type. Subsequently, we provide each set to the LLM, enabling it to merge duplicate entities while simultaneously consolidating their properties.

We use LLMs for this since we don’t know what name each entity was given. For example, the initial extraction could have ended up with two nodes: (Alice {name: “Alice Henderson”}) and (Alice Henderson {age: 25}).

These reference the same entity and should be merged to a single node containing the name and age property. We use LLMs to accomplish this since they’re great at quickly understanding which nodes reference the same entity.

By iteratively performing this procedure for all entity groups, we obtain a structured dataset ready for further processing.

3. Importing the Data Into Neo4j

In the final step, we focus on importing the results from the LLM into a Neo4j database. This requires a format that Neo4j can understand. To accomplish this, we parse the generated text from the LLM and transform it into separate CSV files, corresponding to the various node and relationship types.

These CSV files are then mapped to a format compatible with the Neo4j Data Importer tool. Through this conversion, we gain the advantage of previewing the data before initiating an import into a Neo4j database, harnessing the capabilities offered by the Neo4j Importer tool.

Together, we’ve now created an application that consists of three parts: a UI to input a file, a controller that executes the previously explained process, and an LLM that the controller talks to. The source code can be found on GitHub.

We also created a version of this pipeline that works essentially the same, but with the option to include a schema. This schema works like a filter where the user can restrict which types of nodes, relationships, and properties the LLM should include in its result.

If you’re interested in knowledge graphs and LLM, I suggest checking out the Neo4j GenAI page for further learning and resources.

Demonstration

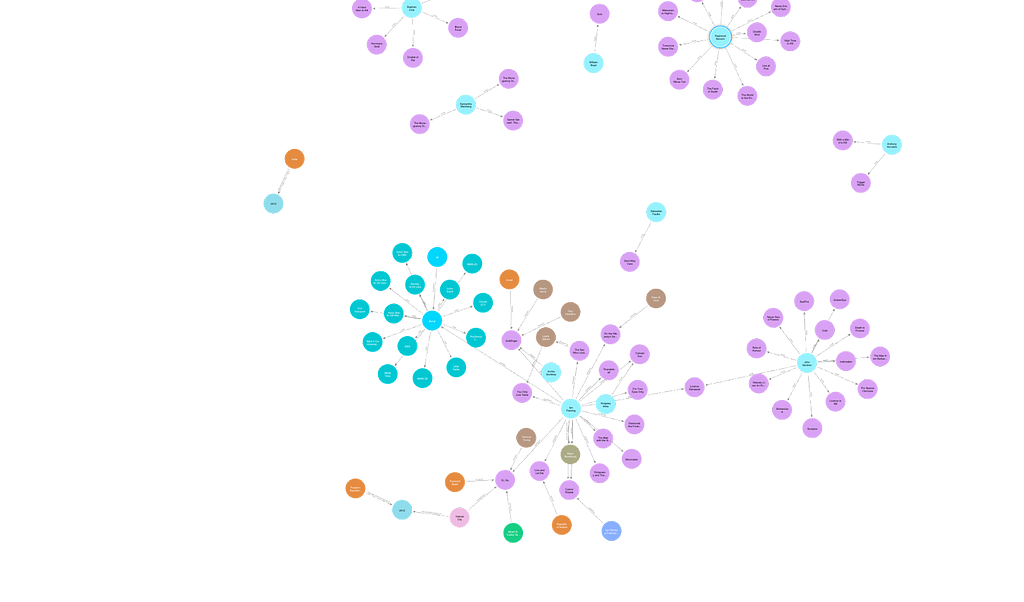

I tested the application by giving it the Wikipedia page for the James Bond franchise and then inspected the knowledge graph it generated.

The provided graph subset showcases the generated graph, which, in my opinion, provides a reasonably accurate depiction of the Wikipedia article. The graph primarily consists of nodes representing books and individuals associated with those books, such as authors and publishers.

However, there are a few issues with the graph. For instance, Ian Fleming is labeled as a publisher rather than an author for most of the books he wrote. This discrepancy may be attributed to the difficulty the language model had in comprehending that particular aspect of the Wikipedia article.

Another problem is the inclusion of relationships between book nodes and directors of films with the same titles, instead of creating separate nodes for movies.

Finally, it’s worth noting that the LLM appears to be quite literal in its interpretation of relationships, as evidenced by using the relationship type “used” to connect the James Bond character with the cars he drives. This literal approach may stem from the article’s usage of the verb “used” rather than “drove.”

Watch the full demonstration here:

Challenges

This approach worked fairly well for a demonstration, and we think it shows that it’s possible to use LLMs to create knowledge graphs. However, certain issues with this approach must be addressed:

- Unpredictable output: This is inherent to the nature of LLMs. We do not know how an LLM will format its results. Even if we ask it to output in a specific format, it might not obey. This might cause problems when trying to parse what it generates. We saw one instance of this while chunking the data: Most of the time, the LLM generated a simple list of nodes and edges, but sometimes the LLM numbered the list. Tools like Guardrails and OpenAIs Function API are being released to help work around this. It’s still early in the world of LLM tooling, so we anticipate this will not be a problem for long.

- Speed: This approach is slow and often takes several minutes for just a single reasonably large web page. There might be a fundamentally different approach that can make the extraction go faster.

- Lack of accountability: There’s no way to know why the LLM decided to extract some information from the source documents or if the information even exists in the source. The data quality of the resulting knowledge graph is, therefore, much lower than those created by processes not using LLMs.

Unlocking Insights from Unstructured Data

raw text into a structured knowledge graph representation, we can surface hidden connections and patterns.

With this three-step approach, anyone can build knowledge graph using LLMs. and efficiently analyze large corpora of unstructured data. Whether working with documents, web pages, or other forms of text, we can use automate the construction of knowledge graphs and discover new insights easily.

We should also keep in mind the challenges of this approach, such as unpredictable LLM output formatting, speed limitations, and potential lack of accountability.

The code for this project can be found on GitHub.

Learning Resources

To learn more about building smarter LLM applications with knowledge graphs, check out the other posts in this blog series.

- LangChain Library Adds Full Support for Neo4j Vector Index

- Implementing Advanced Retrieval RAG Strategies With Neo4j

- Harnessing Large Language Models With Neo4j

- Knowledge Graphs & LLMs: Multi-Hop Question Answering

- Using a Knowledge Graph to Implement a RAG Application