My Eastern European grandfather had a very old TV in his room that was created back in the old Soviet era. On occasions, the telly went blurry, sometimes the sounds got distorted, and rarely it looked like it was tuning out of the channel, halfway through the movie.

The classic solution of my grandpa, to any of these problems, was the same; he gave an actual, physical slap to the side of the telly, and eventually, the quality of the service got restored.

Many decades later I find myself looking at Kubernetes pods, that run certain microservices, and when one of them starts misbehaving, sometimes I give them a virtual kick by restarting them, and weirdly, the quality of the service just restores.

I’d like to think that I am not just a weird repetition of history, carrying some special genes in my cells that I inherited from my grandad. It is rather a phenomenon that plagues thousands of engineers’ everyday life; no wonder, some people think that SRE stands for Service Restarting Experts.

Is that really the best thing we can do as engineers to mitigate certain, hard-to-catch misbehaviors? That’s the question we asked ourselves in the Neo4j Aura Team, and we found a very satisfying alternative, which ended up saving the business from a LOT of toils, worthy of writing an article about it.

At Neo4j Aura, we are hosting thousands of scalable, reliable Neo4j graph databases that our users can use as they like, scale as they like, or pause as they like.

For us, databases are pretty much like cars in a factory. They come in different models, shapes, and sizes, they go out on a conveyor belt to their rightful owners, and we keep a close eye on their metrics while the drivers are putting thousands of miles (nodes and relationships) into them.

Sometimes some of the owners are so excited about their new toy, and the lack of maintenance needed from them, that they decide to take their precious car to a drifting competition.

In the old times, their enjoyment of the heavy use of resources could turn the squeaky noise of the wearing tire into a painful ringing sound, representing a Pager from Opsgenie going to my phone, on a Saturday evening.

As easy as it can be to set up a database as a user, maintaining them used to be a pain as an engineer. To achieve high resilience, we run the graph databases in a redundant way on Kubernetes.

If you are an SRE reading this, you may think the automation is easy, just make sure that the pod automatically restarts every time we notice an error of any kind, and I know that many businesses do exactly that.

But you cannot restart your way out of all of the problems that can happen, because you can’t predict the nature of every problem that may arise. Also, just imagine what would happen if an error would restart an entire stateful database cluster.

It would cause unavailability which is the worst scenario that can happen to the business and the user. And finally, a simple pod restart does not always guarantee that the service will run well when new pods come up.

The thing is, we are not just a company hosting software in a resilient way. We are also the company that writes the software that we are hosting.

And having all this data about potential issues and scenarios that can go wrong, is by far the most useful tool that we can utilise to make our graph database even better.

So as tempting as it may have been, running a restart.sh script on a CRON schedule was no option.

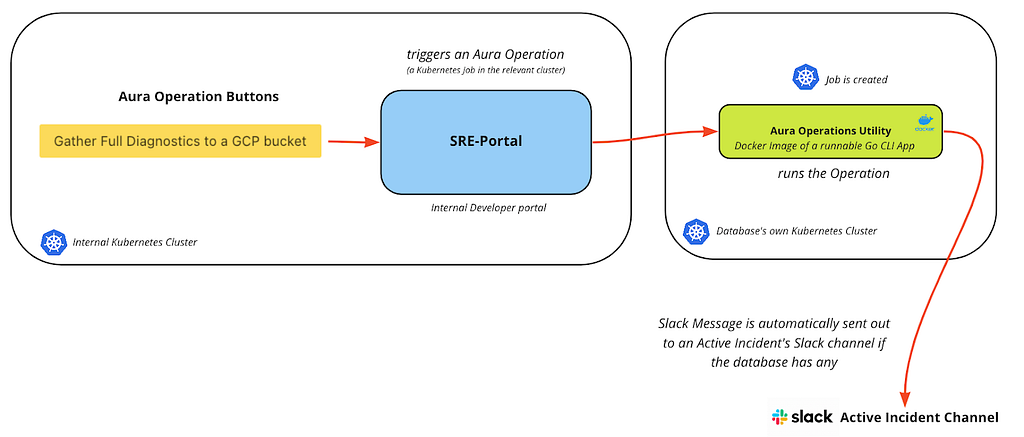

In order to gather the most information about any issues that can occur, related to Neo4j itself, or its configuration, we started creating an internal portal which we humbly named The SRE Portal. (Because not all heroes wear capes.)

You can imagine it as the Tinder of Neo4j Aura databases. Thousands of profiles, showing all intricate details of each and every database that is living in hundreds of different locations (Kubernetes clusters). And as it is with most Tinder profiles, most of them will never be checked during their lifetimes. (Sorry, not sorry)

However, if we notice an issue with any one of them, we can immediately check their stats, looking for discrepancies, and act on it, by raising an incident directly from the SRE portal.

That’s great; engineers now don’t have to go to the length of connecting to a Kubernetes cluster, checking the Custom Resources, the Statefulsets, or describing a pod’s status, all of the relevant information is presented on the page itself! 🎉

However, we quickly realized that our role as SREs within the business should be not about firefighting, but giving the tools to all engineers to analyze the issues themselves and have an appropriate response to them, instead of a hasty reaction.

And for that reason, we decided to put shiny, colorful HTML buttons on the portal, each representing a complex series of actions.

We named these buttons collectively: The Aura Operations

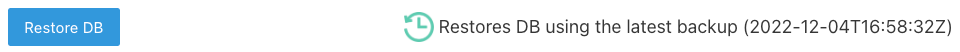

Do you want to create a backup, based on a certain Neo4j Cluster member? Just click the button!

Would you like to revert the database to an older state? No worries, do a quick click… you guessed it… on the button!

Would you like a Kubernetes job to spawn into the cluster, triggering a Java Heap Dump for analysis? You don’t even know what it means? Don’t worry about it, JUST CLICK THAT SHINY BUTTON WE MADE FOR YOU.

Jokes aside, we of course made sure that certain buttons can only be used by certain engineers within the business.

The idea is to take frequently needed processes, and automating them away, abstracting and grouping them all behind these buttons.

We have built a Go CLI App with the help of Cobra and Viper, and every time someone clicks on a button, our Go App gets pulled down from the Container Registry to the relevant Kubernetes cluster with the appropriate parameters, to deal with the required process in the form of a Kubernetes Job.

Down to the Technicalities

Viper and Cobra are such a nice duo when one creates a CLI App. It is to Go, what Bud Spencer and Terrence Hill were to comedic television. A real pleasure to have around.

One of the amazing things about Viper is how it allows you to take a set of Environment Variables and turn them into a Go struct with a one-liner.

type Config struct {

Environment string `mapstructure:"environment"`

SlackToken string `mapstructure:"slack_token"`

SlackChannel string `mapstructure:"slack_channel"`

OperationCaller string `mapstructure:"operation_caller"`

StartedTimestamp string `mapstructure:"started_timestamp"`

}

var (

config Config // config holds the App's global config in one place

)

func initConfig() {

viper.Unmarshal(&config) // storing the whole config in a single struct

}

You can also easily validate their content, and come back with an error if anything seems amiss.

At the same time, Cobra allows you to define subcommands, so you can easily call the App as:

./aura-operations-utility gather --dbid abcdef12 --core 1,2,3 --full-diagnostics

That will run the function that you related to this sub-command.

gather = &cobra.Command{

Use: "gather",

Short: "gathers diagnostics from a core's member",

Long: "Grabs logs, and a heapDump and uploads them into a bucket. The URL to the bucket is

printed to stdout."

Example: "aura-operations-utility gather --dbid abcdef12 --core 1,2,3 --full-diagnostics",

Run: func(cmd *cobra.Command, args []string) {

// Implementation of the analysis gathering...

},

}

This framework generates helpers for each subcommand automatically, so you can really focus on the business logic itself. This structure above also makes it incredibly easy to scale a CLI Application, while having a nice, separated structure that contains the implementation of every subcommand separate from each other.

So at the end of the day, instead of finding the appropriate Cluster on our Terminal, then getting permissions sorted, then connecting to the Cluster, then looking up and editing their Kubernetes resources in place… all of these can be concerns of a dystopian era of the past.

And what remains is nothing more but a Magic Remote Control that I wished my grandfather had; A button click for every scenario that can happen; one that allows our business not just to react, but to respond, gather information, and analyze issues. That is, so we can learn from them, without having to go through the same, complex processes over and over again.

If you’re curious about what these databases look like from a user’s point of view, check out Neo4j AuraDB.

Creating the Magic Remote Control was originally published in Neo4j Developer Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.