What Is Data Lineage? Tracking Data Through Enterprise Systems

Content Strategist

6 min read

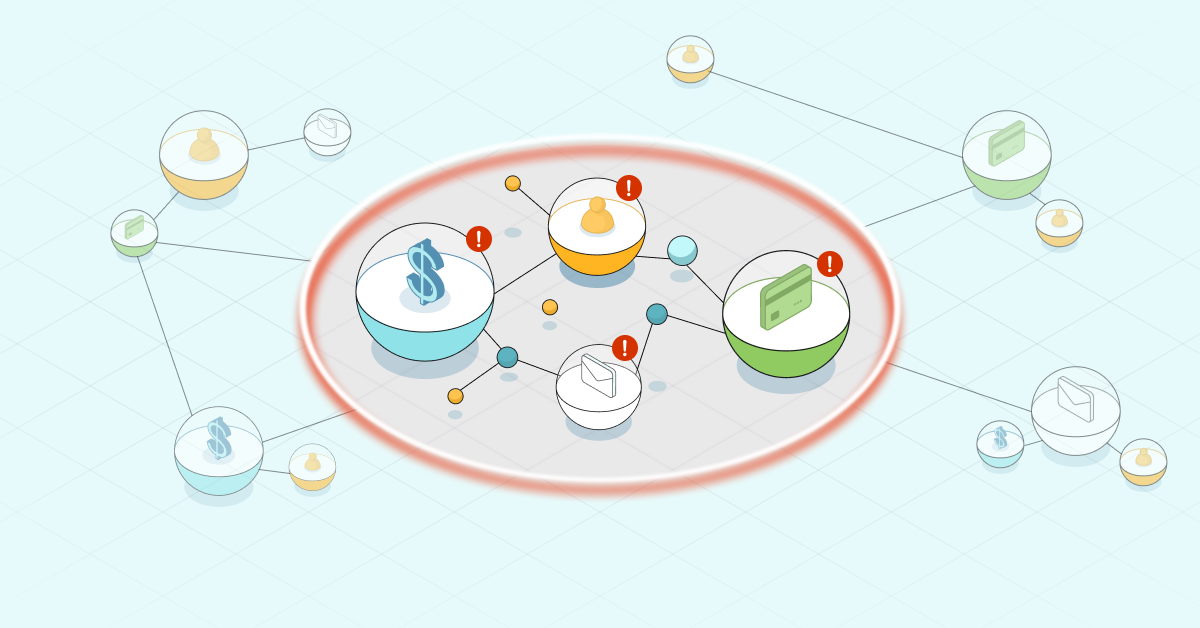

Data lineage documents how data flows through an organization’s systems, from its origin to final use. It records data transformations through ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processes. A data lineage system provides a meta-view of data flow, which enables data teams to trace dependencies and anomalies. It is used in the enterprise to strengthen data governance for better data quality, control, and regulatory compliance.

In dynamic data environments, knowledge graphs have emerged as an effective solution for storing and analyzing data lineage. Built on graph database architecture, these systems store data as interconnected networks rather than traditional tables. This approach naturally captures how information flows and transforms across systems. The underlying structure allows teams to add and modify data types without redesigning the entire schema, supporting integration across multiple sources.

More in this guide:

Is Data Lineage Important?

The short answer is yes because data lineage can help ensure data quality and effective data management. When teams discover discrepancies in reports, lineage enables rapid problem-solving by tracing issues to their source, eliminating the need for lengthy investigations.

Lineage also serves as a crucial tool for change management: before modifying any data structures or processes, teams can understand the full downstream impact. This level of transparency and traceability is particularly vital in regulated industries like finance and healthcare, where organizations must document and certify how sensitive data is handled throughout its life cycle.

Data Provenance vs. Data Lineage

Data provenance and data lineage are sometimes used interchangeably, but provenance focuses on the origins of data, while lineage lineage describes the full data life cycle.

Data provenance tracks the sourcing of data – whether through creation or acquisition – and might also capture the modifications that have occurred along the way. It answers the question, “Where does this data come from?”

Data lineage provides a more comprehensive view, encompassing where data came from, where it moves next, how it is used, and relevant dependencies. A data lineage pipeline maps data from its upstream sources through various systems and processes to its final destinations downstream. With this broad remit, lineage systems should be able to answer questions at any point of the data’s journey, including:

- Where does this data come from?

- How does this data move through different systems?

- How has the data been modified?

- Where is the data used?

- Who has accessed or changed this data?

- What is the impact of a failure in the data pipeline?

These questions can help engineering teams to answer further questions, such as whether data is reliable or appropriate for the given use case. Data lineage also offers a means of improving data integrity and meeting compliance requirements with an auditable trail.

How Data Lineage Works

Any data lineage system has several parts: lineage capture (as data is created, transformed, and used), storage of the lineage metadata, and tools for understanding and analyzing the lineage information.

Lineage capture typically occurs through two main methods. The first is using ETL tools like AWS Glue, which automatically track lineage as data moves through a pipeline. The second method involves writing custom code to capture lineage manually. This requires instrumenting ETL scripts (e.g., Python with Pandas or PySpark) to log source tables and transformations.

Once the lineage information is captured, it must be stored effectively for analysis. A well-suited database should enable easy retrieval and analysis. As data lineage can change greatly over time through transformations across data platforms, data model flexibility and scalability are crucial for a lineage database.

Common Data Capture Methods

Modern data lineage tools employ several methods to track data movement through enterprise systems. Here are the four most common capture methods used in production environments:

Query Parsing

Organizations can capture data lineage by analyzing SQL queries. These queries reveal how data moves through systems, showing exactly how information is selected, combined, transformed, and stored in new tables.

Automated tools such as Apache Atlas and Collibra can scan query execution logs to determine how data flows. However, this method may struggle with dynamically generated queries, stored procedures, or complex transformations where additional context is needed beyond static SQL analysis.

Log-Based Lineage Tracking

In environments where direct query parsing isn’t practical, you can use log-based tracking to infer data lineage. Many relational databases maintain write-ahead logs or binary logs that record all changes made to the data. By analyzing these logs, tools like Debezium (a change data capture framework) can track modifications in real time, making it possible to reconstruct lineage.

In event-driven architectures, Apache Kafka enables lineage tracking by capturing message producers and consumers across a pipeline. Cloud platforms such as AWS Glue Data Catalog and Google Data Catalog integrate log-based tracking to extract lineage from cloud storage and data warehouse activity. This approach provides valuable insights into how data moves, even when transformation logic is not explicitly captured in queries.

Instrumentation in Data Pipelines

Lineage can be captured directly within data processing pipelines, including ETL and ELT frameworks. Platforms like Apache Airflow, dbt, and Talend provide built-in mechanisms to track dependencies and execution history. In dbt, for example, lineage is automatically generated based on SQL model dependencies, allowing a clear view of how data moves between transformations in warehouses such as Snowflake or BigQuery.

Metadata Extraction from APIs

Many modern data platforms expose lineage metadata through APIs, offering a programmatic approach to lineage capture. Solutions like Google Data Catalog API, AWS Glue Data Catalog API, and Databricks Unity Catalog provide endpoints that return lineage details based on table relationships, schema changes, and query execution history.

What Is the Best Database for Storing Lineage?

A data lineage database stores metadata that tracks how data moves and changes across systems. Traditional relational databases often struggle with lineage storage because they require complex JOIN operations to reconstruct relationships between datasets, making lineage queries slow and difficult to scale.

A graph database provides a more efficient approach by storing lineage as direct relationships rather than building and inferring them through query joins. This structure allows lineage dependencies, transformation steps, and downstream impacts to be represented explicitly, enabling faster traversal and analysis.

Instead of scanning multiple tables, graph queries can trace relationships in real time, helping teams quickly assess the impact of changes, identify root causes, and visualize data flows.

Use Cases in Data Lineage

Data lineage serves multiple purposes in an organization, including:

Impact Analysis and Change Management – Before making changes to data systems or processes, teams can use lineage maps to understand downstream dependencies and potential impacts. This helps prevent unintended disruptions when modifying data pipelines, schemas, or business logic.

Migration Planning—During system migrations, data mapping reveals critical interdependencies that need to be maintained. This ensures critical data flows aren’t disrupted when moving between systems.

Data Quality and Trust – By tracking data’s journey from source to consumption, teams can identify where quality issues emerge and implement controls at critical points. Understanding lineage helps build trust in data assets by providing transparency into their origins and transformations.

Root Cause Analysis – When data issues arise, lineage helps teams quickly trace back through transformations to identify the root cause. Rather than investigating each system individually, lineage provides a map to efficiently pinpoint where problems originated.

Data Governance—Lineage documentation supports governance by helping organizations inventory their data assets, establish ownership, and enforce policies around data usage and transformation. It provides the visibility needed for effective data stewardship.

Regulatory Compliance and Auditing – Data lineage helps organizations demonstrate compliance with regulations like GDPR, CCPA, and HIPAA by tracking how sensitive data moves and transforms throughout systems. When auditors request evidence of data handling practices, lineage documentation provides a clear audit trail.

Analytics and Machine Learning – Data scientists use lineage to understand input data sources, verify transformation logic, and ensure model features are properly derived. This supports reproducibility and helps maintain model quality over time.

How to Get Started With a Knowledge Graph

To gain practical experience with data lineage and knowledge graphs, consider starting with Neo4j Aura Free, a cloud-hosted platform that lets you experiment with graph databases. You can use Cypher queries to trace data movement, identify dependencies, and analyze downstream impact—all essential skills for effective data governance.

Learn to Build a Knowledge Graph

Read this step-by-step developer’s guide on how to build a knowledge graph for effective data governance.