Explore the Neo4j Data Modeling MCP Server

Consulting Engineer, Neo4j

7 min read

Enhance your graph data modeling workflow

Introduction

In this blog, I’ll discuss the benefits of the Model Context Protocol (MCP), what the Neo4j Data Modeling MCP server provides to your agentic applications, and how to implement it in an end-to-end workflow with other MCP servers.

MCP provides a powerful way for agents to easily gain access to standardized tooling. It’s a protocol that defines how applications can provide context in the form of tools, resources, and prompts to an LLM. This blog details how you can use the Neo4j Data Modeling MCP server to generate graph data models for your Neo4j-backed applications.

Graph data modeling consists of identifying entities within your data and connecting them with relationships. Both entities and relationships may also have properties that provide additional information.

When creating graph data models, it’s important to first understand the use cases they will address. This informs the queries we’ll write and, ultimately, how our data should be represented in the graph.

Neo4j is a schemaless database, so refactoring the data model is easy to do. This is because it’s fairly common to refactor your data model to improve query performance or add additional use cases. Since the data modeling step may be revisited multiple times throughout a project’s development cycle, the Neo4j Data Modeling MCP server is a useful asset to have.

The current release of the Neo4j Data Modeling MCP Server is v0.1.1.

Check it out on GitHub.

Configuration

Add the Neo4j Data Modeling MCP server to your client application of choice with the following configuration:

{

"mcpServers": {

"neo4j-graph-data-modeling": {

"command": "uvx",

"args": [ "mcp-neo4j-data-modeling@0.1.1" ]

}

}

}There are many MCP-compatible clients, such as Claude Desktop and the Cursor IDE. Check out a comprehensive list of MCP clients.

Data Modeling Resources

The Neo4j Data Modeling MCP server provides a few agent resources that assist in data modeling and data ingestion. These include the structure of nodes, relationships, and properties used to define a graph data model within the MCP server.

The MCP server also exposes a resource that lays out the process of ingesting data into Neo4j so you can use the Data Modeling MCP server in combination with other ingestion tools.

Data Modeling Tools

There are various tools exposed by the MCP server, including validation, import/export, visualization, and code-generation tools.

The MCP server offers validation tools for nodes, relationships, and whole data models. These tools take a Python dictionary as input that adheres to the structure defined by the resources discussed above. The dictionary is then converted to Pydantic models, and any errors are returned to the agent so the data model can be corrected.

The tools currently validate features, including:

- Key property existence on nodes

- Duplicate properties, nodes, or relationships

- Relationship source and target existence

The MCP server also provides a tool that generates a configuration you can use to create a Mermaid diagram of the data model. Many MCP clients support Mermaid diagrams, so this is useful for quickly visualizing and understanding your data models.

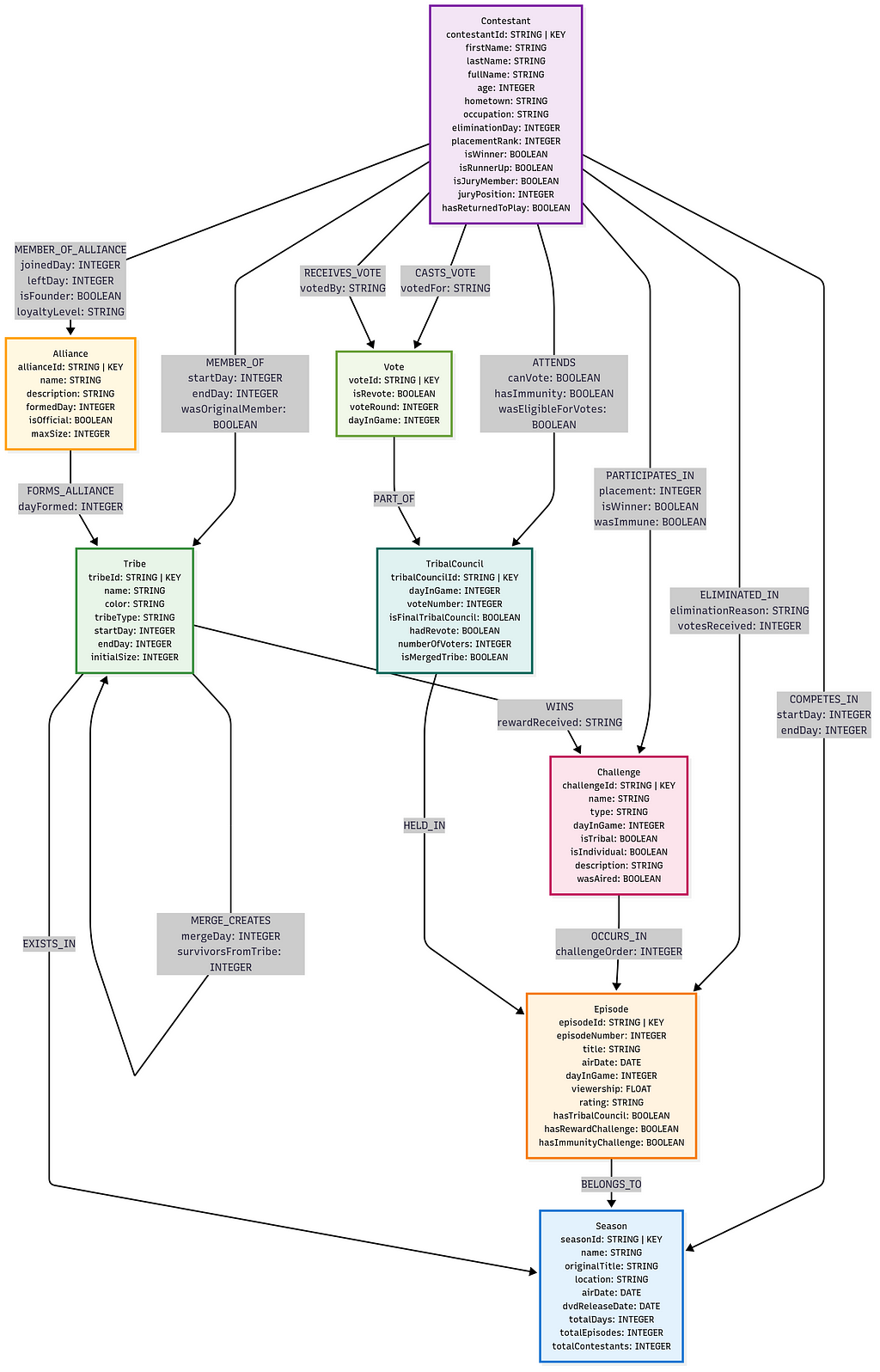

The data models below were generated from the Survivor: Borneo Wiki.

Use the Arrows.app drawing tool to manually create data models that can then be imported and modified within the client application. AI-generated data models can also be exported to Arrows format via a tool call so you can edit manually within Arrows.

Note the property format in the Arrows data model. This is done to maintain the information generated in the client application. Each property has the following format:

<property-name>: <neo4j-type> | <description> | OPTIONAL<key-identifier>The KEY portion is optional and should only be provided on a single property for each node or relationship. This is a constraint of the current Data Modeling MCP server build.

The MCP server also provides tools that generate Cypher code to ingest data according to the data model. This code includes the necessary indexes and constraints, as well as the code for ingesting nodes, relationships, and properties.

If the agent also has access to Cypher execution tools, it may use the Neo4j Data Ingest Process resource to inform its ingestion logic.

An End-To-End Developer Flow

There’s a common process when an agent is using the Data Modeling MCP server for development. This process includes some data discovery within the client application and, optionally, the Neo4j Cypher MCP server for loading and querying the resulting graph.

In this example, I’ll walk through a quick development session where I used Claude Desktop and the Neo4j Cypher and Data Modeling MCP servers to create a graph of Survivor data from season 1.

We’ll be creating a different graph data model from the one above.

The Claude configuration file I used:

{

"mcpServers": {

"mcp-data-modeling": {

"command": "uvx",

"args": [ "mcp-neo4j-data-modeling@0.1.1" ]

},

"mcp-cypher": {

"command": "uvx",

"args": [ "mcp-neo4j-cypher@0.2.3" ],

"env": {

"NEO4J_URL": "bolt://localhost:7687",

"NEO4J_USERNAME": "neo4j",

"NEO4J_PASSWORD": "password",

"NEO4J_DATABASE": "neo4j"

}

}

}

}I used a local Neo4j instance via Neo4j for Desktop to store my resulting graph.

You need to first provide the agent with data and some use cases. This data can be from a website, provided CSV file, or MCP tool call results. Once the agent analyzes the data and understands your use cases, it can begin the modeling process.

I provided Claude with a link to the Survivor: Borneo Wiki and some use cases I wanted to solve with the graph.

Once Claude understands our data, it will then reference the schema resources exposed by the Data Modeling MCP server to construct a JSON representation of the data model. This will then be passed to the data model validation tool, which will catch any errors in the model. Claude should then fix these errors and prompt for any missing information.

Once a valid data model is generated, Claude can use the Mermaid configuration tool to receive a valid Mermaid configuration. You can then use this to generate a visualization of the data model.

Many client applications, such as Claude Desktop and Cursor, support Mermaid diagrams.

Once we have the first draft visualized, we can request any modifications to Claude. This will loop over the data model correction → validation → visualization steps.

We’re happy with this data model for our demo purposes, so now we can move on to the ingest phase. The code generation tools provided by the Data Modeling MCP server can be used to ingest data into a Neo4j instance. While the Data Modeling MCP server doesn’t currently connect to a database, you can use the Neo4j Cypher MCP server to run any ingest code in your client application.

Note that ingesting data via Claude Desktop is not recommended, but can be good for quick proofs of concept.

Notice here that Claude chose to only use the constraints Cypher queries the Data Modeling MCP server provides. Sometimes Claude may choose to write its own Cypher code if we don’t explicitly ask it to use the MCP tools.

Finally, you can query your Neo4j database to ensure that data was loaded correctly. You can do this using the Neo4j Cypher MCP server or manually via the Neo4j Aura console or Neo4j for Desktop application.

We can then get detailed answers to our use cases.

NOTE: Major Survivor Borneo spoilers below

Summary and Next Steps

Data modeling can sometimes be a hurdle when it comes to migrating data to the graph, and the Neo4j Data Modeling MCP server alleviates some of the pain points. You can now easily get a proof of concept complete or iterate on different data model versions with the ability to validate and visualize within the same application. Other MCP servers can also be combined with the Data Modeling MCP server to create end-to-end development workflows that allow you to quickly explore your data as a graph.

You can learn more about the Neo4j Data Modeling MCP server by watching Jason Koo’s video tutorial.

And be sure to check out the GitHub repos:

Explore the Neo4j Data Modeling MCP Server was originally published in Neo4j Developer Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.