RDF vs. Property Graphs: Choosing the Right Approach for Implementing a Knowledge Graph

Chief Scientist, Neo4j

11 min read

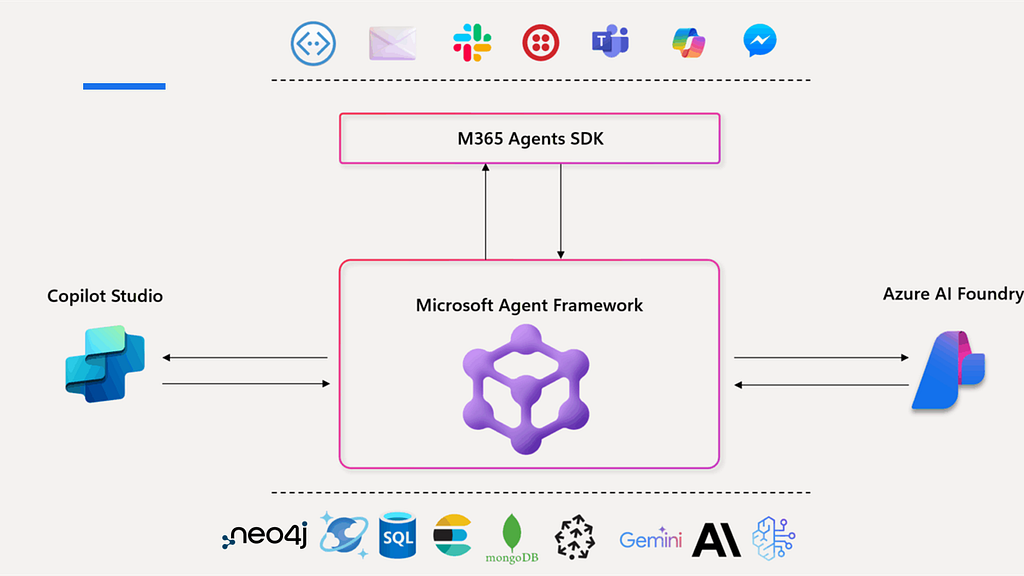

The generative AI market has grown by a staggering 3,600% year over year according to O’Reilly, fueling renewed demand for knowledge graphs.

The technology of choice for highly connected, heterogeneous data, knowledge graphs work well for grounding large language models (LLMs). In fact, independent research from data.world and Microsoft have highlighted the benefits of using knowledge graphs for RAG use cases.

Building and operating a knowledge graph brings a plethora of design decisions. This article compares two methods: RDF from the original 1990s Semantic Web research and the property graph model from the modern graph database.

What Is a Knowledge Graph?

A knowledge graph is a semantically rich data model for storing, organizing, and understanding connected entities. A knowledge graph contains three essential elements:

- Entities, which represent the data of the organization or domain area.

- Relationships, which show how the data entities interact with or relate to each other. Relationships provide context for the data.

- An organizing principle that captures meta-information about core concepts relevant to the business.

Put together, these elements create a self-describing data model that enhances the data’s fidelity and potential for reuse. The organizing principle serves as a meta-layer, acting as a contract between the data and its users.

Recent research has demonstrated that the organizing principle can operate on a range of complexity levels, from simple labels on nodes with named relationships to intricate product hierarchies (e.g., Product Line -> Product Category -> Product) to a comprehensive ontology that stores a business vocabulary. The key is to select the appropriate level of complexity for the job at hand.

What Is RDF?

RDF stands for Resource Description Framework, a W3C standard for data exchange on the Web. It is also often (mis)used to describe a particular approach to managing data.

As a framework for representing the Web, RDF captures structure using a triple, the basic unit in RDF. A triple is a statement with three elements: two nodes connected by an edge (also known as a relationship). Each triple is identified by a Uniform Resource Identifier (URI) as subject-predicate-object:

- The subject is a resource (node) in the graph;

- The predicate represents an edge (relationship); and

- The object is another node or a literal value.

Here’s an example of an RDF model that represents a brother and sister, Daniel and Sunita. Sunita owns and drives a Volvo she purchased on January 10, 2011. Dan has also driven Sunita’s car since March 15, 2013:

The RDF model became well-known around the same time as knowledge graphs under the umbrella of The Semantic Web. The goal of the Semantic Web was to add machine-readable data with well-defined semantics to the public web using W3C standards. This would allow software agents to treat the web as a vast distributed data structure. By combining metadata (such as ontologies) and query languages (such as SPARQL), these agents would be able to infer knowledge from the web’s data.

Sadly, this excellent vision never came to pass. The technologies developed under the umbrella of the Semantic Web found their way into the database world as “triple stores,” where the same techniques were applied to local databases (rather than the Web). However, SQL databases were dominant at the time, largely edging out triple stores.

With the rise of the graph database, triple stores began to market themselves as a type of graph database. Triple stores typically use SPARQL as their query language. As with the grand vision of the queryable Web, SPARQL allows users to reason over sets of triples in the database, guided by the rules defined in an ontology. However, the RDF approach has significant drawbacks.

The Practical Challenges of Implementing RDF

Implementing a knowledge graph with RDF can be challenging because RDF wasn’t designed with database systems in mind. For one, it’s not possible to identify unique relationships of the same type between two nodes in RDF. When a pair of nodes is connected by multiple relationships of the same type, they are represented by a single RDF triple. The expressiveness of the data model is limited since you can’t capture scenarios where multiple distinct relationships of the same type exist.

Because relationships in RDF don’t really exist as first class citizens, being hidden inside triples, additional effort is often required to maintain the fidelity and utility of models. Unfortunately this also makes the models more verbose and complicated to use and maintain. In a social network, for example, users typically follow many other users and, in turn, are followed by others. To store these multiple relationships, RDF must introduce more triples, with new nodes, to model the properties of each relationship. This workaround is analogous to using join tables in a relational database.

While ontologies make RDF data “smarter” in the sense that they advertise a processing model for that data, there’s no guarantee that a SPARQL query will ever terminate. This leaves the user waiting – potentially indefinitely – for an answer. Computability of queries isn’t the only downside, however, in fact ontology-driven approaches like RDF pose practical challenges for implementations:

- Skill building. Acquiring the expertise to create standard ontologies takes considerable time and effort. This investment brings risks and inefficiencies, as the highly specialized nature of these skills limits their transferability across the organization.

- Resource-heavy. Building an ontology is a time-consuming process, and the ontology must be complete to deploy the knowledge graph. This significantly delays time to business value.

- Fidelity to the business reality. Ontologies perpetually lag behind the business domains they support because the business moves more quickly than ontology updates. As a result, the knowledge graph becomes a reflection of the business as it was in the past, not its current state.

There are situations when it makes sense to invest in an ontology approach like RDF. An ontology that bridges the gap between two local domains is useful when those domains are individually well understood and information exchange is the goal. But importantly, ontologies can also be used outside of the semantic web stack since they are just graphs, after all.

How to Build a Knowledge Graph

Learn the basics of graph data modeling, how to query, and top use cases that use highly interconnected data.

What Are Property Graphs?

Information is organized as nodes, relationships, and properties in a property graph. Nodes are tagged with one or more labels, identifying their role in the network. Nodes can also store any number of properties as key-value pairs.

Relationships provide directed, named connections between two nodes. Relationships always have a direction, a type, a start node, and an end node, and they can have properties, just like nodes. Although relationships are always directed, they can be navigated efficiently in either direction.

Here’s an example property graph model that again shows the same brother and sister (Daniel and Sunita) that we looked at earlier:

The property graph model was designed to be a flexible and efficient way to store connected data, allowing for fast querying and traversal. It follows the important principle of storing data in a format that closely resembles the logical model – like how we might sketch out the data model on a whiteboard.

The property graph model reflects the logical data model that has been created to represent a company’s data and its relationships. In a native property graph like Neo4j, the physical storage model is isomorphic to the logical model. What you draw is what you store.

Property graphs have their own ISO standard query language called GQL, with Cypher being the most widely used implementation. Cypher and GQL differ from SPARQL as they are declarative languages that focus on pattern matching and not reasoning. In most practical scenarios, pattern matching suffices, and queries written in these languages will finish executing in a time proportional to the size of the graph being explored.

The Advantages of Using a Property Graph

Building a knowledge graph with a property graph database is straightforward compared to the alternatives. Unlike RDF, property graphs were designed as a database model (rather than data exchange format) for applications and analytics. Property graphs easily handle many-to-many relationships or multiple relationships of the same type between the same two nodes. They also provide much greater flexibility throughout the development process because they aren’t limited to a predefined structure. A property graph makes it possible for the business to build, expand, and enrich the knowledge graph over time. The main pros of using a property graph include:

- Simplicity: Property graphs are simple and quick to set up and use. Knowledge graphs built with property graphs have low complexity for new and experienced users.

- Detailed: User data can easily be stored in both nodes and relationships, ensuring that entities and their connections accurately reflect the business domain.

- Interoperable: Neo4j can consume and produce RDF for interoperability with legacy triple stores and integrate with any modern database system, including relational and document stores.

- Standards Compliant: With ISO GQL, implementers can have the same confidence for graph implementations that they have for relational databases that use ISO SQL.

The ROI of Implementing a Property Graph

The results of using a property graph for knowledge graph implementation speak for themselves. From biotechnology to pharmaceuticals to space exploration, organizations in different industries solve complex problems, reduce costs, and achieve breakthroughs thanks to the flexibility and scalability of the property graph model.

NASA: Unlocking Decades of Project Data for Faster, Smarter Space Exploration

Though NASA has project data going back to the late 1950s, organizational silos kept valuable insights hidden until a knowledge graph was implemented.

NASA converted its database into a property graph, which now stores millions of historical documents. The property graph database allows engineers to access information about past projects, enabling engineers to identify trends, prevent disasters, and incorporate lessons learned into new projects.

The property graph strategy has already saved NASA millions and years of research and development towards their Mission to Mars planning.

Basecamp Research: Mapping Earth’s Biodiversity for Biotechnological Breakthroughs

Basecamp Research built the world’s largest knowledge graph of Earth’s natural biodiversity using Neo4j’s property graph database. By collecting and connecting biological, chemical, and environmental data from across the globe, Basecamp has expanded known proteins by 50% and documented over 5 billion biological relationships.

This knowledge graph has become a superior resource for protein design applications and generative AI models, fostering the development of improved drugs, food products, and diagnostics.

Basecamp Research uses a knowledge graph to map Earth’s biodiversity, capturing proprietary protein and genome sequences and environmental and chemical data. The resulting knowledge graph, containing over 5 billion relationships, reveals intricate biological networks and has expanded known proteins by 50%.

Novo Nordisk: Streamlining Clinical Trials with a Connected Data Landscape

Novo Nordisk uses a Neo4j-based knowledge graph to power StudyBuilder, streamlining the process of clinical data collection and reporting in healthcare.

The property graph helps navigate the highly connected landscape of data standards (CDISC, SNOWMED, UCUM, etc.), allowing StudyBuilder to ensure end-to-end consistency, built-in compliance, automation, and content reuse. This innovative approach to handling study specifications has been shared with the pharmaceutical community as an open-source project.

Property Graph or RDF for My Knowledge Graph?

While graphs are powerful, not all graphs are equal. The property graph model offers the most advanced approach for analytics and application development. Despite this, the RDF model has kept a toehold in some industries since its semantic web heyday.

| Property Graph | RDF | |

|---|---|---|

| Store as native graphs | ⚫ | ◯ |

| Efficient, low-friction modeling | ⚫ | ◯ |

| Ease of getting started and incremental changes | ⚫ | ◯ |

| Efficient query of entity->property and relationship->property | ⚫ | ◯ |

| Query Language | Cypher/GQL | SPARQL |

| Speed/performance | ⚫ | ◑ |

| Analyze and enrich with data science | ⚫ | ◯ |

| Store transactional data natively | ⚫ | ◯ |

| Store semantic data natively | ⚫ | ⚫ |

| Leverage pre-built ontologies | ⚫ | ⚫ |

The model you choose will, to an extent, be defined by circumstance. When free to choose, most folks will pick the property graph model for its simplicity and agility. Due to its wide use, the property graph model enjoys a large ecosystem of tooling, literature, training, a large pool of professionals, and ISO Standards support. By contrast, the RDF community inhabits a world where up-front design of ontologies and standards intended for interoperability on the Web are repurposed as database tools.

Graphs have moved on considerably since the Semantic Web era and contemporary best practices draw from both schools of thought. It’s more practical to use property graphs by default and layer in the organizing principles from the RDF world (taxonomies, ontologies) when your system needs them, not as a technical prerequisite.