APOC Hidden Gems: What You Could Have Missed

CTO, Larus BA Italy

5 min read

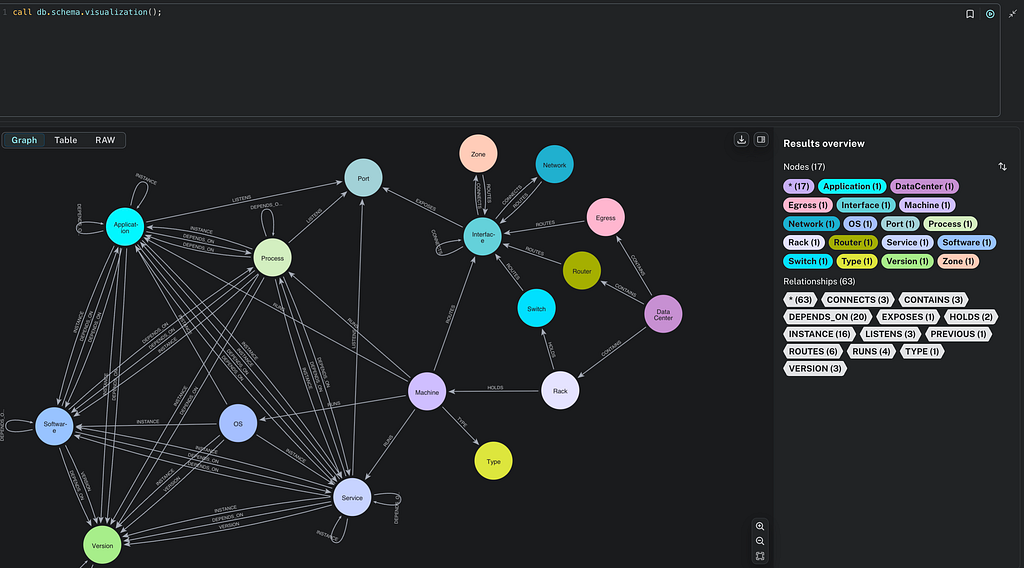

APOC contains about 500 procedures and functions, here we’ll discuss some of them that are very interesting and use cases about their applications. Enjoy!

Started as a very simple collection of useful Procedures and Functions in 2016 the APOC project raised its popularity very quickly, having more or less 500 useful methods to cover 90% of uses cases in the Neo4j ecosystem.

In the past, I wrote articles about how to leverage useful hidden gems like:

- Transform MongoDB collections automagically into Graphs

- Efficient Neo4j Data Import Using Cypher-Scripts

Now I’ll talk about others that we recently added to the project and can be useful in several scenarios.

apoc.load.directory

Sometimes you may need to ingest files into sub-directories with the same business rules (= cypher query), so when in the past you needed to execute the query every time for each specific file now you can leverage the apoc.load.directory procedure order to search recursively into a directory, so instead of doing this:

WITH 'path/to/directory/' AS baseUrl

CALL apoc.load.csv(baseUrl + 'myfile.csv', {results:['map']}) YIELD map

MERGE (p:Person{id: map.id}) SET p.name = map.name

RETURN count(p);

WITH 'path/to/directory/sub/' AS baseUrl

CALL apoc.load.csv(baseUrl + 'myfile1.csv', {results:['map']}) YIELD map

MERGE (p:Person{id: map.id}) SET p.name = map.name

RETURN count(p);

WITH 'path/to/directory/sub/sub1/' AS baseUrl

CALL apoc.load.csv(baseUrl + 'myfile2.csv', {results:['map']}) YIELD map

MERGE (p:Person{id: map.id}) SET p.name = map.name

RETURN count(p);

You can simply do this:

CALL apoc.load.directory('*.csv', 'path/to/directory', {recursive: true}) YIELD value AS url

CALL apoc.load.csv(url, {results:['map']}) YIELD map

MERGE (p:Person{id: map.id}) SET p.name = map.name

RETURN count(p)

Very nice right?!

As you can see the procedure takes 3 parameters:

- The file name pattern

- The root directory

- A configuration map that at this very moment (May 2021) manages just one property recursive=true/false that allows searching recursively from the root directory

And returns a list of URLs that match the input parameters so you can easily chain with other import/load procedures.

apoc.load.directory.async.*

Please raise the hand if you hate Cron and other similar mechanisms!

Unfortunately, they are quite used when you need to ingest data from files, but in order to overcome this, we build a “proactive” feature that allows you to define a listener into the File System that gets executed every time a file is created/updated and deleted.

These procedures are extremely helpful when you’re ingesting data via files like csv, json, graphML, cypher and so on because they don’t rely on a Cron job, but they are constantly active; this has several upsides because if a job fail for some reason (network issues that block the upload of the file into the import dir, and so on…) you don’t need to wait for next cron loop, you can upload the file whenever you want, and the ingestion starts right after!

It works in a very similar way to APOC Triggers so the first thing to do is define the listener:

CALL apoc.load.directory.async.add(

'insert_person',

'

CALL apoc.load.csv($filePath) yield list

CALL apoc.load.csv($filePath, {results:['map']}) YIELD map

MERGE (p:Person{id: map.id}) SET p.name = map.name

',

'*.csv',

'path/to/directory',

{ listenEventType: ['CREATE', 'MODIFY'] })

As you can see the procedure takes 5 parameters:

- The listener name

- The cypher query that has to be executed every time the listener catch a new event

- The file name pattern

- The root directory

- A configuration map that at this very moment (May 2021) manages just one property listenEventType=CREATE/MODIFY/DELETE that defines the type of the file system event that you want to listen

An important thing to consider is that into the cypher query (the second argument) we inject these parameters that you can leverage:

- fileName: the name of the file which triggered the event

- filePath: the absolute path of the file which triggered the event if apoc.import.file.use_neo4j_config=false, otherwise the relative path starting from Neo4j’s Import Directory

- fileDirectory: the absolute path directory of the file which triggered the event if apoc.import.file.use_neo4j_config=false, otherwise the relative path starting from Neo4j’s Import Directory

- listenEventType: the triggered event (“CREATE”, “DELETE” or “MODIFY”). The event “CREATE” happens when a file is inserted in the folder, “DELETE” when a file is removed from the folder and “MODIFY” when a file in the folder is changed. (n.b note that if a file is renamed, will be triggered 2 events, that is first “DELETE” and then ”CREATE”)

There are these other procedures that help you to manage the listener lifecycle:

- apoc.load.directory.async.list(): that returns a list of all running listeners

- apoc.load.directory.async.remove(‘<listner_name>’): that removes one listener

- apoc.load.directory.async.removeAll(): that removes all the listeners

apoc.periodic.truncate

The procedure is useful when you’re in the prototyping phase and you’re defining your graph model or your ingestion strategies because it allows you very easily to wipe the entire database:

CALL apoc.periodic.truncate({dropSchema: true})

As you can see we manage a configuration map that at this very moment (May 2021) manages just one property dropSchema=true/false that eventually drops indexes and constraints.

apoc.util.(de)compress

Sometimes you may need to store large string values into Node or Relationships, in this case we added two procedures in order to (de)compress between a string to a compressed byte array data in a very easy way.

You can compress the data in the following way:

CREATE (p:Person{name: event.name,

bigStringCompressed: apoc.util.compress(event.bigString, { compression: 'FRAMED_SNAPPY'})})

You can decompress the data in the following way:

MATCH (p:Person{name: 'John')

RETURN apoc.util.decompress(p.bigStringCompressed, { compression: 'FRAMED_SNAPPY'}) AS bigString

Supported compression types are:

- GZIP

- BZIP2

- DEFLATE

- BLOCK_LZ4

- FRAMED_SNAPPY

One other option is to compress source data (e.g. Gigabytes of JSON) on a client and send the compressed byte arrays of data (a few MB) via bolt as parameters to the server, and then decompress, parse and process the JSON on the server.

Other Minor Changes

We did tons of minor changes, following some of them:

- We updated the Couchbase procedures to the last driver version in order to have state-of-the support for the database.

- We added stats for the computation of apod.periodic.iterate, now you’ll get the number affected entities and properties by the procedure.

- Now UUID and TTL features work per database .

The Road Ahead

APOC growth is not stopping! We’re working hard in order to add new cool features that you can leverage in the near future:

- Support for read/write the Apache Arrow file format!

- Support for read/write data from/to Redis!

And many more!

By the way, if you need a feature please fill an issue into our GitHub repository we’re very happy to listen to what you need!

APOC Hidden Gems: What You Could Have Missed was originally published in Neo4j Developer Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.