Efficiently Monitor Neo4j and Identify Problematic Queries

Big Data Engineer

10 min read

Monitoring is an essential element of every database, tool, or component. In this post, I will explain how I monitor the Neo4j database in a clustered environment using several tools, such as Dynatrace and Kibana. I will also share some best practices. A future post will show the practices I used to optimize bad queries.

Query Optimization

Before delving into the most useful operations for reducing memory allocation and improving efficiency, it’s important to understand how Neo4j provides the opportunity to evaluate queries.

Query Profile

Neo4j provides two statements to analyze the execution plan of a query:

- EXPLAIN — This command added before the query gives all the operations that would be performed during its execution so the query is not launched, and there are no changes in the database.

- PROFILE — This command gives the execution plan and returns some statistics, such as DB hits, the estimated number of rows of each subunit of the query, etc. For further details, see the Neo4j Cypher Manual Execution plans.

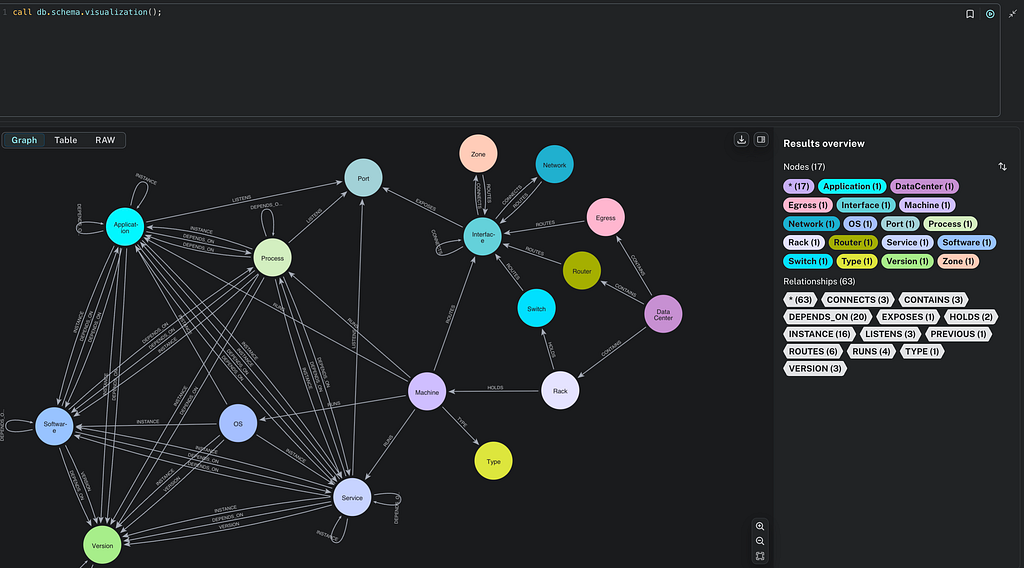

Domain Knowledge

Having a deep understanding of the domain and the data stored within the Neo4j database is crucial for writing efficient queries. I would say that the optimization process is made up of two parts:

- Network topology — Understanding the network topology and structure of your data is crucial for optimizing the performance of your Neo4j database.

- Query syntax — The syntax and structure of your queries play a vital role in optimizing their execution. Writing efficient queries involves understanding the query syntax, leveraging Cypher language features, and applying best practices.

Efficiently Traverse the Graph

Starting with Neo4j 5.9, Quantified Path Patterns (QPP) were introduced to match paths to efficiently traverse the graph. Prior to the introduction of QPP, there were two main possibilities:

- Use of APOC path-expanding procedures — The utilization of APOC path-expanding procedures offers a limited range of options. For instance, it isn’t possible to filter a node based on its properties. It is possible to customize the APOC procedures, but this entails a higher maintenance cost of continuously aligning the custom APOC with the bug fixes and new releases that emerge from the official branch.

- Use of Neo4j Traversal API — This framework enables the creation of a bespoke plugin for path traversing, tailored to the domain’s business logic requirements. This approach enables the alignment of APOC procedures and the removal of the overhead associated with the customization of a large library. This simplifies the code, improves the performance, and increases its maintainability. Unfortunately, custom plugins are not supported in AuraDB, and memory tracking doesn’t work when using these functions, so you may run out of memory and have to manually manage it.

Given the unfeasibility of utilizing QPP in this context, I have developed a plugin using the Neo4j Traversal API that replaces the previous custom APOC, which has consistently resulted in enhanced performance.

More Subqueries and Fewer List Comprehensions

By utilizing subqueries, you can break complex queries into smaller, more manageable units, which can then be executed in parallel. This parallel execution takes advantage of the available cores in the machine, enabling faster and more efficient query processing.

Neo4j’s query optimizer is designed to optimize the execution of subqueries, ensuring efficient evaluation and minimizing resource consumption:

//UNION wrapped in subquery

CALL {

MATCH (:Person)

RETURN 'person' AS type, count(*) AS count

UNION ALL MATCH (:Movie) RETURN 'movie' AS type, count(*) AS count

}// post union processing

WITH * WHERE count > 100 RETURN type, count ORDER BY count DESC LIMIT 5

There is a subcategory of subqueries, the existential subquery, in which partial subqueries are used to test for the existence of certain graph patterns:

WHERE EXISTS { MATCH (node)-[:REL]->…(:Label) WHERE expression … }

I often use existential subqueries since you can offload the evaluation of the existence condition to a separate parallel execution, which can significantly speed up the overall query.

Conversely, it’s generally not recommended to use list comprehension as it might not be optimized well by the query planner, potentially leading to slower query performance.

Avoid Useless Aggregation

When dealing with databases that contain millions of nodes and relationships, aggregation operations, like collect, can potentially consume a significant amount of heap memory. To avoid excessive memory usage, it is advisable to return results as a stream to the microservice, allowing it to manage the aggregation load off the cluster memory.

Returning results as a stream enables the microservice to process the data in a more controlled and efficient manner. Instead of loading the entire result set into memory at once, the microservice can process the data in a streaming fashion, reducing memory footprint and improving overall performance.

Combine Multiple Queries in One When Possible

The execution of a single query to perform multiple operations can often be more beneficial than executing multiple separate queries. Consolidating multiple operations into a single query offers several advantages in terms of memory usage, query optimization, and response-time reduction. When executing multiple queries separately, each requires memory allocation and cache space. By combining these operations into a single query, the overall memory consumed by query-specific resources, such as the plan cache, is reduced.

Use Cypher Queries Instead of APOC When Possible

Before, I suggested using a custom plugin. However, it is recommended to use an external plugin when absolutely necessary. This recommendation is primarily driven by maintenance considerations. When using custom plugins, the deployment of a new version require the restarting of all Neo4j processes, which can result in service interruption.

Load Test

Did you know that it’s possible to test query performance with JMeter? With JMeter, you can check if the query you refactored performs better or worse than the previous one. You can configure it to send queries to the Neo4j database and analyze performance metrics, such as response time, throughput, and error rates. I used this tool to test all the queries I rewrote.

Performance Comparison

I used all of the above to refactor the customer’s queries.

The Scenario

Suppose we have a water distribution network consisting of pipelines, reservoirs, water towers, etc. The network owner needs to:

- Extract parts of the network at any level, from the primary source to the end user.

- Know which pumping station supplies a particular service line.

- Get all the information about any element in the network, from a valve to a water tower. If an element in the network contains other elements, extract them, too. For example, a water tower contains the tank, the support structure, the inlet pipe, the outlet pipe, etc.

Each network element is modeled on the graph as a node, and for each element, there’s a history associated with it in the list using the HAS_VERSION relationship, which represents the version of an element at a particular time. For example, a valve might be open or closed at a particular time, or a pipe might have a problem. So the traverser needs at least a date and a pressure_level as main inputs.

We have two other important relationships: CLOSE_PIPE, OPEN_PIPE, which represent two network elements where there’s a flow of water; and CONTAINS, which represents a network element that contains other elements. For example, there’s a CONTAINS relationship between a water tower and its tank.

Microservice M1

Old query:

MATCH (n:Network{ID: $id})

CALL apoc.path.subgraphAllCustom(n, {

minLevel:$skip,

maxLevel:$limit,

relationshipFilter: 'OPEN_PIPE',

labelFilter: 'Network',

pressureLevel:$pressureLevel,

date:$date

})

YIELD relationships, nodes

unwind relationships AS r

match (s)-[r]->(e)

CALL {

WITH s

CALL apoc.path.subgraphNodesCustom(s, {

minLevel: 0,

relationshipFilter: '<CONTAINS|>HAS_VERSION',

labelFilter: 'Network|Version',

pressureLevel:'H|M|L',

filterOpenValve: false,

date:$date

}) YIELD node

where node:Version

MATCH hierarchyPath=()-->(node)

WITH s, COLLECT(hierarchyPath) as listHierarchyPath

RETURN {ID:s.ID, hierarchyPaths:listHierarchyPath} as startHierarchy

}

CALL {

WITH e

CALL apoc.path.subgraphNodesCustom(e, {

minLevel: 0,

relationshipFilter: '<CONTAINS|>HAS_VERSION',

labelFilter: 'Network|Version',

pressureLevel:'H|M|L',

filterOpenValve: false,

date:$date

}) YIELD node

where node:Version

MATCH hierarchyPath=()-->(node)

WITH e, collect(hierarchyPath) as listHierarchyPath

RETURN {ID:e.ID, hierarchyPaths:listHierarchyPath} as endHierarchy

}

RETURN startHierarchy, endHierarchy

New query:

MATCH (n:Network{ID: $id})

CALL waterWalk.subgraphRelsWithHierarchyPaths(n, {

minLevel:$skip,

maxLevel:$limit,

relationshipFilter: 'OPEN_PIPE',

labelFilter: 'Network',

pressureLevel: $pressureLevel,

date:$date

})

YIELD relationship, startHierarchy, endHierarchy

RETURN

startNode(relationship).ID AS startNodeId, nodes(HEAD(startHierarchy))[1] AS startNode, startHierarchy,

endNode(relationship).ID AS endNodeId, nodes(HEAD(endHierarchy))[1] AS endNode, endHierarchy

+-----------+-----------+---------+--------+----------+----------+----------+-----+---------+---------+------------+-----------------+-------------+

| Label | # Samples | Average | Median | 90% Line | 95% Line | 99% Line | Min | Maximum | Error % | Throughput | Received KB/sec | Sent KB/sec |

+===========+===========+=========+========+==========+==========+==========+=====+=========+=========+============+=================+=============+

| Old Query | 40 | 83 | 54 | 153 | 206 | 256 | 48 | 256 | 0.00% | 38.8/sec | 9.18 | 0.00 |

| New Query | 40 | 55 | 52 | 55 | 61 | 129 | 47 | 129 | 0.00% | 48.3/sec | 17.44 | 0.00 |

+-----------+-----------+---------+--------+----------+----------+----------+-----+---------+---------+------------+-----------------+-------------+

Results: Average: -70.26%. Maximum: -36.8%

In the old query, there’s a hidden aggregation, which is tricky. It’s the apoc.custom that does a collect because it returns a list of nodes and relationships. Then there’s an unwind. This is not very efficient. In the new version, there’s no aggregation, which drastically reduces memory usage on the Neo4j side.

Microservice M2

Old query:

MATCH (h:HeadingVersion:Version)

WHERE h.BEGIN <= localdatetime($date) AND h.END > localdatetime($date)

AND h.SERVICE_LINE= $lineId

AND h.IS_ACTIVE = TRUE

WITH h

OPTIONAL MATCH (h)<--(t:Heading)<-[r:CONTAINS]-(n:Network)-[r2:HAS_VERSION]->(hn)

WHERE hn.BEGIN <= localdatetime($date) AND hn.END > localdatetime($date)

AND hn.HEADING_START_NODE = TRUE

WITH n, hn

CALL apoc.path.subgraphAllCustom(n,

{

minLevel:0,

relationshipFilter: 'OPEN_PIPE|HAS_VERSION',

labelFilter:'Network|Version|-Pump',

pressureLevel: hn.PRESSURE_LEVEL,

date:$date,

serviceLine:$lineId,

traverseStartingValve:true

}) YIELD relationships UNWIND [r in relationships | ()-[r:OPEN_PIPE]->()] as lp UNWIND lp as p RETURN distinct p

New query:

MATCH (hv:HeadingVersion:Version)<-[:HAS_HISTORY]-(t)<-[rf:CONTAINS]-(n)-[:HAS_VERSION]->(hn)

WHERE hv.BEGIN <= localdatetime($date) AND hv.END > localdatetime($date)

AND rf.BEGIN <= localdatetime($date) AND rf.END > localdatetime($date)

AND hn.BEGIN <= localdatetime($date) AND hn.END > localdatetime($date)

AND hv.SERVICE_LINE = $lineId

AND hv.IS_ACTIVE = TRUE

CALL waterWalk.subgraphRelsWithHierarchyPaths(n, {

relationshipFilter: 'OPEN_PIPE',

labelFilter: 'Network',

serviceLine: $lineId,

pressureLevel: hn.PRESSURE_LEVEL,

date: $date

})

YIELD relationship, startHierarchy, endHierarchy

WITH relationship, startNode(relationship) AS startNode, startHierarchy, endNode(relationship) AS endNode, endHierarchy, apoc.date.parse($date, 'ms', "yyyy-MM-dd'T'HH:mm:ss") AS dateMillis

OPTIONAL MATCH (endNode:Valve)-[r_rs:HAS_VERSION]->(valveVersion)

WHERE r_rs.from <= dateMillis < r_rs.to

RETURN startNode, startHierarchy, endNode, endHierarchy, valveVersion.STATUS AS STATUS, relationship.IS_OPEN AS OPEN_FLG

+-----------+-----------+---------+--------+----------+----------+----------+-----+---------+---------+------------+-----------------+-------------+

| Label | # Samples | Average | Median | 90% Line | 95% Line | 99% Line | Min | Maximum | Error % | Throughput | Received KB/sec | Sent KB/sec |

+===========+===========+=========+========+==========+==========+==========+=====+=========+=========+============+=================+=============+

| Old Query | 40 | 83 | 54 | 153 | 206 | 256 | 48 | 256 | 0.00% | 38.8/sec | 9.18 | 0.00 |

| New Query | 40 | 55 | 52 | 55 | 61 | 129 | 47 | 129 | 0.00% | 48.3/sec | 17.44 | 0.00 |

+-----------+-----------+---------+--------+----------+----------+----------+-----+---------+---------+------------+-----------------+-------------+Average: -33%. Maximum: -50%

Results: Average: -33%. Maximum: -50%

In the old query, there’s not only the APOC that does an aggregation but there’s also a path comprehension that’s absolutely avoidable.

Microservice M3

This microservice receives millions of requests every day. The test takes into account the 500 slowest calls with the old query.

Old query:

OPTIONAL MATCH (t:Network)-[:HAS_VERSION]->(node:Version)

WHERE (node.NAME IN $nameList OR node.ID IN $ids)

AND node.BEGIN<= localdatetime($date) AND node.END > localdatetime($date)

WITH collect(node) as n

CALL apoc.path.subgraphAllCustom(n, {

minLevel: 0,

relationshipFilter: 'CONTAINS>|HAS_VERSION',

labelFilter: $labelFilter,

pressureLevel:$pressureLevel,

filterOpenSwitch:false,

date:$date

}) yield nodes, relationships

UNWIND nodes as nd WITH nd WHERE nd:Version WITH nd

OPTIONAL MATCH (nd)-[r:HAS_VERSION]-(n1)

WITH nd, n1

OPTIONAL MATCH (n1)-[ro:OPEN_PIPE|CLOSE_PIPE]-(n2)

WHERE ro.BEGIN <= localdatetime($date) AND ro.END > localdatetime($date)

WITH nd, n1, ro, n2

OPTIONAL MATCH p=(n2)-[r2:HAS_VERSION]->(v2)

WHERE v2.BEGIN <= localdatetime($date) AND v2.END > localdatetime($date)

RETURN n1 as identityNode, nd, COLLECT(ro) as pipes, COLLECT(p) as connectedPipes skip $skip limit $limit

New query:

MATCH (n:Network)-[:HAS_VERSION]->(version:Version)

WHERE (version.NAME IN $nameList OR version.ID IN $ids)

AND version.BEGIN<= localdatetime($date) AND version.END > localdatetime($date)

RETURN n

CALL waterWalk.subgraphNodesHistory(n, {

relationshipFilter: 'CONTAINS>',

labelFilter: $labelFilter,

pressionLevel: $pressionLevel,

date: $date

})

YIELD node AS identityNode, version AS nodeVersion

OPTIONAL MATCH (identityNode)-[ro:OPEN_PIPE|CLOSE_PIPE]-(n2)

WHERE ro.BEGIN <= localdatetime($date) AND ro.END > localdatetime($date)

WITH identityNode, nodeVersion, ro, n2

OPTIONAL MATCH p=(n2)-[r2:HAS_VERSION]->(v2)

WHERE v2.BEGIN <= localdatetime($date) AND v2.END > localdatetime($date)

RETURN identityNode, nodeVersion, COLLECT(ro) AS pipes, COLLECT(p) AS connectedPipes SKIP $skip LIMIT $limit

+-----------+-----------+---------+--------+----------+----------+----------+-----+---------+---------+------------+-----------------+-------------+

| Label | # Samples | Average | Median | 90% Line | 95% Line | 99% Line | Min | Maximum | Error % | Throughput | Received KB/sec | Sent KB/sec |

+===========+===========+=========+========+==========+==========+==========+=====+=========+=========+============+=================+=============+

| Old Query | 500 | 4233 | 158 | 9932 | 10048 | 13007 | 50 | 13857 | 0.00% | 4.4/sec | 8.14 | 0.00 |

| New Query | 500 | 75 | 75 | 99 | 104 | 117 | 49 | 159 | 0.00% | 203.6/sec | 381.71 | 0.00 |

+-----------+-----------+---------+--------+----------+----------+----------+-----+---------+---------+------------+-----------------+-------------+

Results: Average: -98%. Maximum: -98%

The big improvement here is that with the new plugin, it’s much easier to spot bugs and monitor every step.

Conclusions

By monitoring the memory usage of each query and rewriting it using best practices, and by changing the traverser, we achieved 99.99 percent availability in production, demonstrating the resilience of Neo4j.

References

https://neo4j.com/docs/operations-manual/current/performance/gc-tuning/

https://neo4j.com/docs/operations-manual/current/performance/memory-configuration/

https://neo4j.com/developer/kb/understanding-memory-consumption/

https://neo4j.com/docs/cypher-manual/4.4/execution-plans/

https://neo4j.com/docs/operations-manual/current/monitoring/logging/

https://medium.com/neo4j/the-power-of-subqueries-in-neo4j-4-x-4f1888739bec

https://medium.com/neo4j/load-testing-neo4j-procedures-with-apache-jmeter-caff4c0d9d5a

Efficiently Monitor Neo4j and Identify Problematic Queries was originally published in Neo4j Developer Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.