Create an Apache Spark external data source connection

|

This feature is experimental and not ready for use in production. It is only available as part of an Early Access Program, and can go under breaking changes until general availability. |

-

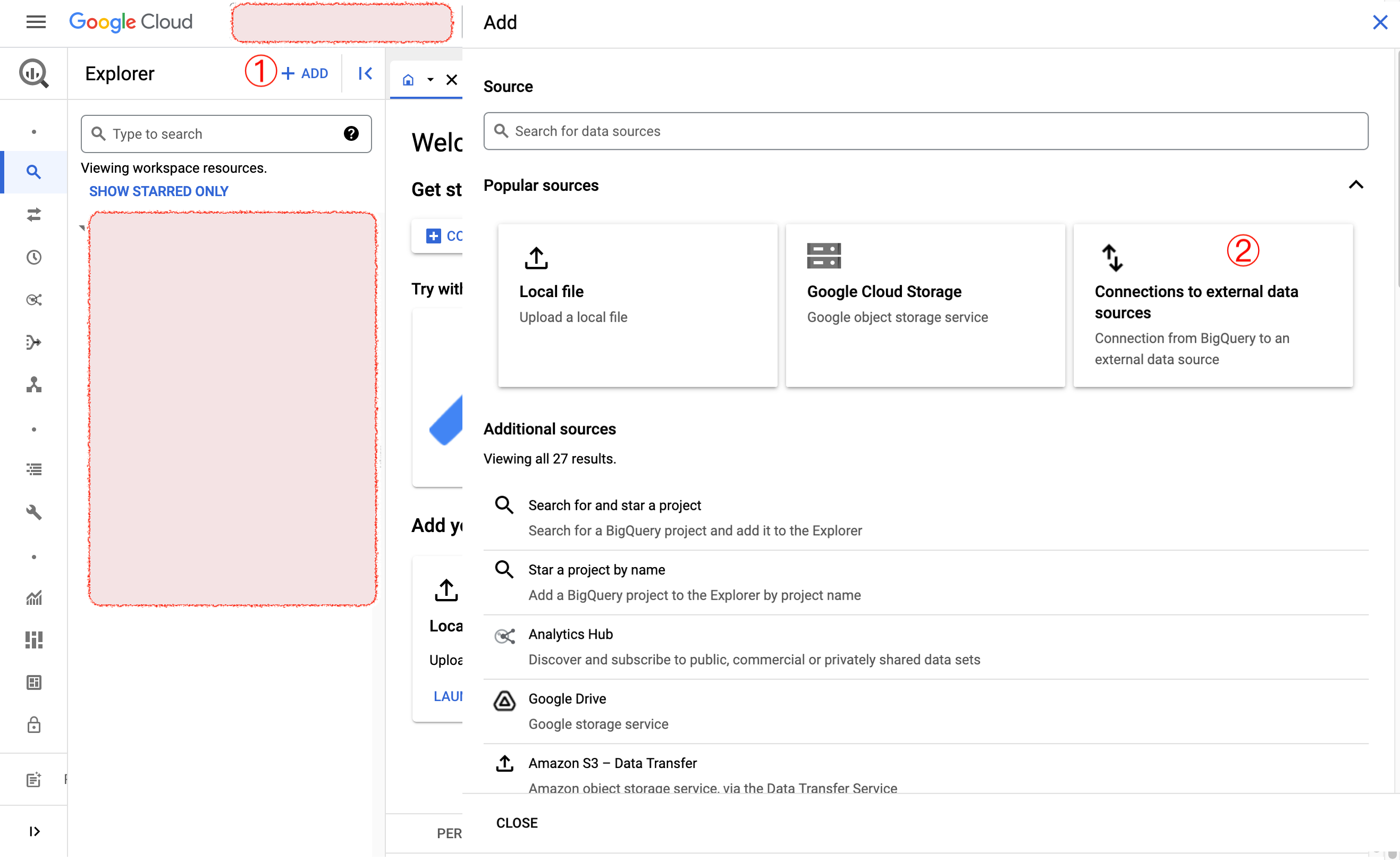

Navigate to BigQuery SQL Workspace Explorer and click

Add. Figure 1. Add new external data source

Figure 1. Add new external data source -

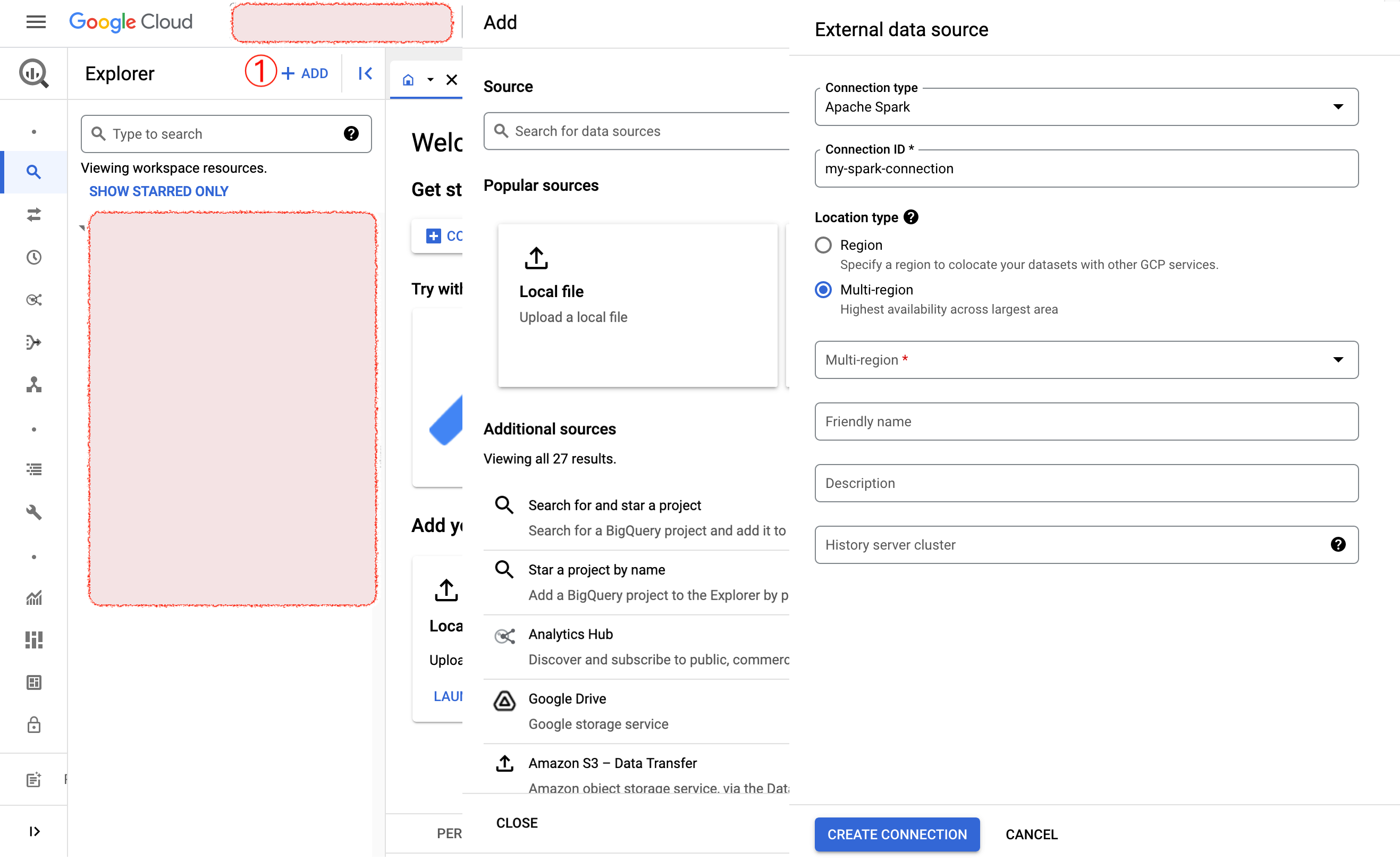

Enter details for your new data source connection and create the connection. Make sure to set the connection type to

Apache Sparkand the region close to your AuraDS or Neo4j GDS instance. Figure 2. Enter data source details

Figure 2. Enter data source details -

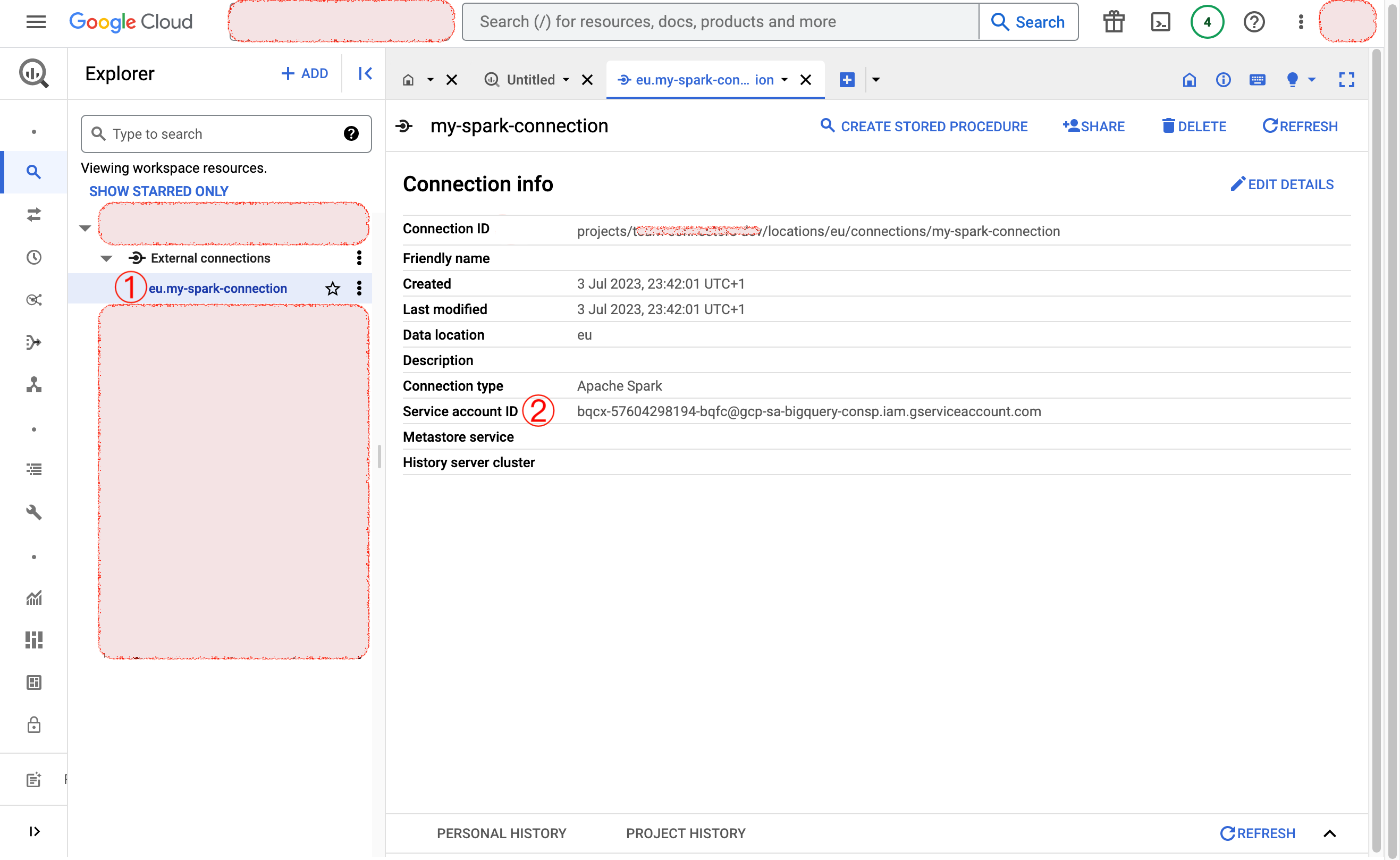

The new connection will be listed under

External Connectionsin BigQuery Explorer. Make note of both the "Connection Name" (1) and "Service Account ID" (2) information as we will use it later. Figure 3. View created data source

Figure 3. View created data source

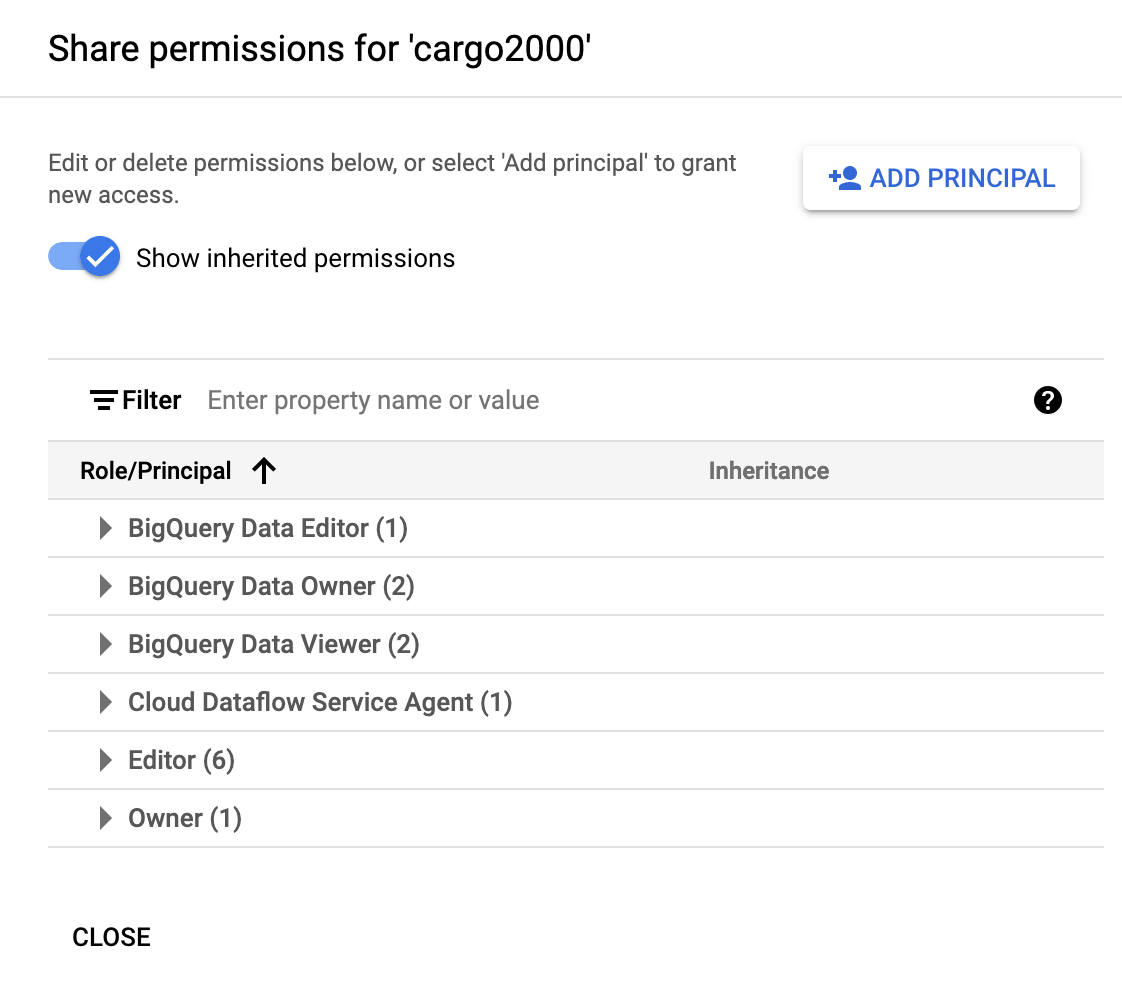

Grant roles to Service Account ID

Next, you need to grant required permissions for the Service Account ID to access the external data source above.

Select Add principal, then add the following roles for the Service Account ID:

-

Artifact Registry Reader

-

BigQuery Data Viewer (if read-only) or BigQuery Data Editor (if writing back to BigQuery)

-

BigQuery Read Session User

-

Secret Manager Secret Accessor

-

Storage Object Viewer

Additionally, grant the BigQuery Data Viewer or BigQuery Data Editor roles on your BigQuery Dataset to the same service account, through Dataset Permissions page.