apoc.export.cypher.query

|

This procedure is not considered safe to run from multiple threads. It is therefore not supported by the parallel runtime. For more information, see the Cypher Manual → Parallel runtime. |

Syntax |

|

||

Description |

Exports the |

||

Input arguments |

Name |

Type |

Description |

|

|

The query used to collect the data for export. |

|

|

|

The name of the file to which the data will be exported. The default is: ``. |

|

|

|

|

|

Return arguments |

Name |

Type |

Description |

|

|

The name of the file to which the data was exported. |

|

|

|

The number of batches the export was run in. |

|

|

|

A summary of the exported data. |

|

|

|

The format the file is exported in. |

|

|

|

The number of exported nodes. |

|

|

|

The number of exported relationships. |

|

|

|

The number of exported properties. |

|

|

|

The duration of the export. |

|

|

|

The number of rows returned. |

|

|

|

The size of the batches the export was run in. |

|

|

|

The executed Cypher Statements. |

|

|

|

The executed node statements. |

|

|

|

The executed relationship statements. |

|

|

|

The executed schema statements. |

|

|

|

The executed cleanup statements. |

|

Config parameters

The procedure support the following config parameters:

| Name | Type | Default | Description |

|---|---|---|---|

|

`BOOLEAN |

false |

if true export properties too. |

|

`BOOLEAN |

false |

stream the json directly to the client into the |

|

|

cypher-shell |

Export format. The following values are supported:

|

|

|

create |

Cypher update operation type. The following values are supported:

|

useOptimizations |

MAP |

|

Optimizations to use for Cypher statement generation.

|

|

|

300 |

Timeout to use for |

|

|

false |

If true adds the keyword |

Exporting to a file

By default exporting to the file system is disabled.

We can enable it by setting the following property in apoc.conf:

apoc.export.file.enabled=trueFor more information about accessing apoc.conf, see the chapter on Configuration Options.

If we try to use any of the export procedures without having first set this property, we’ll get the following error message:

Failed to invoke procedure: Caused by: java.lang.RuntimeException: Export to files not enabled, please set apoc.export.file.enabled=true in your apoc.conf.

Otherwise, if you are running in a cloud environment without filesystem access, use the |

Export files are written to the import directory, which is defined by the server.directories.import property.

This means that any file path that we provide is relative to this directory.

If we try to write to an absolute path, such as /tmp/filename, we’ll get an error message similar to the following one:

Failed to invoke procedure: Caused by: java.io.FileNotFoundException: /path/to/neo4j/import/tmp/fileName (No such file or directory) |

We can enable writing to anywhere on the file system by setting the following property in apoc.conf:

apoc.import.file.use_neo4j_config=false|

Neo4j will now be able to write anywhere on the file system, so be sure that this is your intention before setting this property. |

Exporting a stream

If we don’t want to export to a file, we can stream results back in the data column instead by passing a file name of null and providing the stream:true config.

Usage Examples

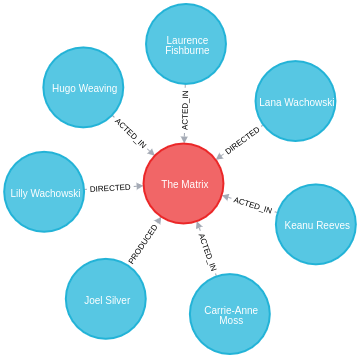

The examples in this section are based on the following sample graph:

CREATE (TheMatrix:Movie {title:'The Matrix', released:1999, tagline:'Welcome to the Real World'})

CREATE (Keanu:Person {name:'Keanu Reeves', born:1964})

CREATE (Carrie:Person {name:'Carrie-Anne Moss', born:1967})

CREATE (Laurence:Person {name:'Laurence Fishburne', born:1961})

CREATE (Hugo:Person {name:'Hugo Weaving', born:1960})

CREATE (LillyW:Person {name:'Lilly Wachowski', born:1967})

CREATE (LanaW:Person {name:'Lana Wachowski', born:1965})

CREATE (JoelS:Person {name:'Joel Silver', born:1952})

CREATE

(Keanu)-[:ACTED_IN {roles:['Neo']}]->(TheMatrix),

(Carrie)-[:ACTED_IN {roles:['Trinity']}]->(TheMatrix),

(Laurence)-[:ACTED_IN {roles:['Morpheus']}]->(TheMatrix),

(Hugo)-[:ACTED_IN {roles:['Agent Smith']}]->(TheMatrix),

(LillyW)-[:DIRECTED]->(TheMatrix),

(LanaW)-[:DIRECTED]->(TheMatrix),

(JoelS)-[:PRODUCED]->(TheMatrix);The Neo4j Browser visualization below shows the imported graph:

Exporting a stream

The following query is an example of using apoc.export.cypher.query to export a stream:

CALL apoc.export.cypher.query(

"MATCH ()-[r]->()

RETURN *",

null,

{ format: "cypher-shell", stream: true })| file | batches | source | format | nodes | relationships | properties | time | rows | batchSize | cypherStatements |

|---|---|---|---|---|---|---|---|---|---|---|

null |

1 |

"statement: nodes(8), rels(7)" |

"cypher" |

8 |

7 |

21 |

1 |

15 |

2000 |

":begin

CREATE CONSTRAINT UNIQUE_IMPORT_NAME FOR (node:`UNIQUE IMPORT LABEL`) REQUIRE (node. |

Exporting to a file

The export to Cypher procedures all support writing to multiple files or multiple columns.

We can enable this mode by passing in the config separateFiles: true

The following query exports all the ACTED_IN relationships and corresponding nodes into files with an actedIn prefix

CALL apoc.export.cypher.query(

"MATCH ()-[r:ACTED_IN]->()

RETURN *",

"actedIn.cypher",

{ format: "cypher-shell", separateFiles: true })

YIELD file, batches, source, format, nodes, relationships, time, rows, batchSize

RETURN file, batches, source, format, nodes, relationships, time, rows, batchSize;| file | batches | source | format | nodes | relationships | time | rows | batchSize |

|---|---|---|---|---|---|---|---|---|

"actedIn.cypher" |

1 |

"statement: nodes(10), rels(8)" |

"cypher" |

10 |

8 |

3 |

18 |

20000 |

This will result in the following files being created:

| Name | Size in bytes | Number of lines |

|---|---|---|

actedIn.cleanup.cypher |

234 |

6 |

actedIn.nodes.cypher |

893 |

6 |

actedIn.relationships.cypher |

757 |

6 |

actedIn.schema.cypher |

109 |

3 |

Each of those files contains one particular part of the graph. Let’s have a look at their content:

:begin

MATCH (n:`UNIQUE IMPORT LABEL`) WITH n LIMIT 20000 REMOVE n:`UNIQUE IMPORT LABEL` REMOVE n.`UNIQUE IMPORT ID`;

:commit

:begin

DROP CONSTRAINT uniqueConstraint;

:commit:begin

UNWIND [{_id:28, properties:{tagline:"Welcome to the Real World", title:"The Matrix", released:1999}}, {_id:37, properties:{tagline:"Welcome to the Real World", title:"The Matrix", released:1999}}] AS row

CREATE (n:`UNIQUE IMPORT LABEL`{`UNIQUE IMPORT ID`: row._id}) SET n += row.properties SET n:Movie;

UNWIND [{_id:31, properties:{born:1961, name:"Laurence Fishburne"}}, {_id:30, properties:{born:1967, name:"Carrie-Anne Moss"}}, {_id:42, properties:{born:1964, name:"Keanu Reeves"}}, {_id:0, properties:{born:1960, name:"Hugo Weaving"}}, {_id:29, properties:{born:1964, name:"Keanu Reeves"}}, {_id:38, properties:{born:1960, name:"Hugo Weaving"}}, {_id:43, properties:{born:1967, name:"Carrie-Anne Moss"}}, {_id:57, properties:{born:1961, name:"Laurence Fishburne"}}] AS row

CREATE (n:`UNIQUE IMPORT LABEL`{`UNIQUE IMPORT ID`: row._id}) SET n += row.properties SET n:Person;

:commit:begin

UNWIND [{start: {_id:31}, end: {_id:28}, properties:{roles:["Morpheus"]}}, {start: {_id:42}, end: {_id:37}, properties:{roles:["Neo"]}}, {start: {_id:38}, end: {_id:37}, properties:{roles:["Agent Smith"]}}, {start: {_id:0}, end: {_id:28}, properties:{roles:["Agent Smith"]}}, {start: {_id:29}, end: {_id:28}, properties:{roles:["Neo"]}}, {start: {_id:43}, end: {_id:37}, properties:{roles:["Trinity"]}}, {start: {_id:30}, end: {_id:28}, properties:{roles:["Trinity"]}}, {start: {_id:57}, end: {_id:37}, properties:{roles:["Morpheus"]}}] AS row

MATCH (start:`UNIQUE IMPORT LABEL`{`UNIQUE IMPORT ID`: row.start._id})

MATCH (end:`UNIQUE IMPORT LABEL`{`UNIQUE IMPORT ID`: row.end._id})

CREATE (start)-[r:ACTED_IN]->(end) SET r += row.properties;

:commit:begin

CREATE CONSTRAINT uniqueConstraint FOR (node:`UNIQUE IMPORT LABEL`) REQUIRE (node.`UNIQUE IMPORT ID`) IS UNIQUE;

:commitWe can then apply these files to our destination Neo4j instance, either by streaming their contents into Cypher Shell or by using the procedures described in Running Cypher fragments.

We can also use the separateFiles when returning a stream of export statements.

The results will appear in columns named nodeStatements, relationshipStatements, cleanupStatements, and schemaStatements rather than cypherStatements.

The following query returns a stream all the ACTED_IN relationships and corresponding nodes

CALL apoc.export.cypher.query(

"MATCH ()-[r:ACTED_IN]->()

RETURN *",

null,

{ format: "cypher-shell", separateFiles: true })

YIELD nodes, relationships, properties, nodeStatements, relationshipStatements, cleanupStatements, schemaStatements

RETURN nodes, relationships, properties, nodeStatements, relationshipStatements, cleanupStatements, schemaStatements;| nodes | relationships | properties | nodeStatements | relationshipStatements | cleanupStatements | schemaStatements |

|---|---|---|---|---|---|---|

10 |

8 |

30 |

":begin

UNWIND [{_id:28, properties:{tagline:\"Welcome to the Real World\", title:\"The Matrix\", released:1999}}, {_id:37, properties:{tagline:\"Welcome to the Real World\", title:\"The Matrix\", released:1999}}] AS row

CREATE (n:`UNIQUE IMPORT LABEL`{ |

":begin

UNWIND [{start: {_id:31}, end: {_id:28}, properties:{roles:[\"Morpheus\"]}}, {start: {_id:38}, end: {_id:37}, properties:{roles:[\"Agent Smith\"]}}, {start: {_id:0}, end: {_id:28}, properties:{roles:[\"Agent Smith\"]}}, {start: {_id:30}, end: {_id:28}, properties:{roles:[\"Trinity\"]}}, {start: {_id:29}, end: {_id:28}, properties:{roles:[\"Neo\"]}}, {start: {_id:43}, end: {_id:37}, properties:{roles:[\"Trinity\"]}}, {start: {_id:42}, end: {_id:37}, properties:{roles:[\"Neo\"]}}, {start: {_id:57}, end: {_id:37}, properties:{roles:[\"Morpheus\"]}}] AS row

MATCH (start:`UNIQUE IMPORT LABEL`{ |

":begin

MATCH (n:`UNIQUE IMPORT LABEL`) WITH n LIMIT 20000 REMOVE n:`UNIQUE IMPORT LABEL` REMOVE n. |

":begin

CREATE CONSTRAINT uniqueConstraint FOR (node:`UNIQUE IMPORT LABEL`) REQUIRE (node. |

We can then copy/paste the content of each of these columns (excluding the double quotes) into a Cypher Shell session, or into a local file that we stream into a Cypher Shell session.

If we want to export Cypher statements that can be pasted into the Neo4j Browser query editor, we need to use the config format: "plain".