LangChain Cypher Search: Tips & Tricks

Graph ML and GenAI Research, Neo4j

8 min read

How to optimize prompts for Cypher statement generation to retrieve relevant information from Neo4j in your LLM applications

Last time, we looked at how to get started with Cypher Search in the LangChain library and why you would want to use knowledge graphs in your LLM applications. In this blog post, we will continue to explore various use cases for integrating knowledge graphs into LLM and LangChain applications. Along the way, you will learn how to improve prompts to produce better and more accurate Cypher statements.

Specifically, we will look at how to use the few-shot capabilities of LLMs by providing a couple of Cypher statement examples, which can be used to specify which Cypher statements the LLM should produce, what the results should look like, and more. Additionally, you will learn how you can integrate graph algorithms from the Neo4j Graph Data Science library into your LangChain applications.

All the code is available on GitHub.

Neo4j Environment Setup

In this blog post, we will be using the Twitch dataset that is available in Neo4j Sandbox.

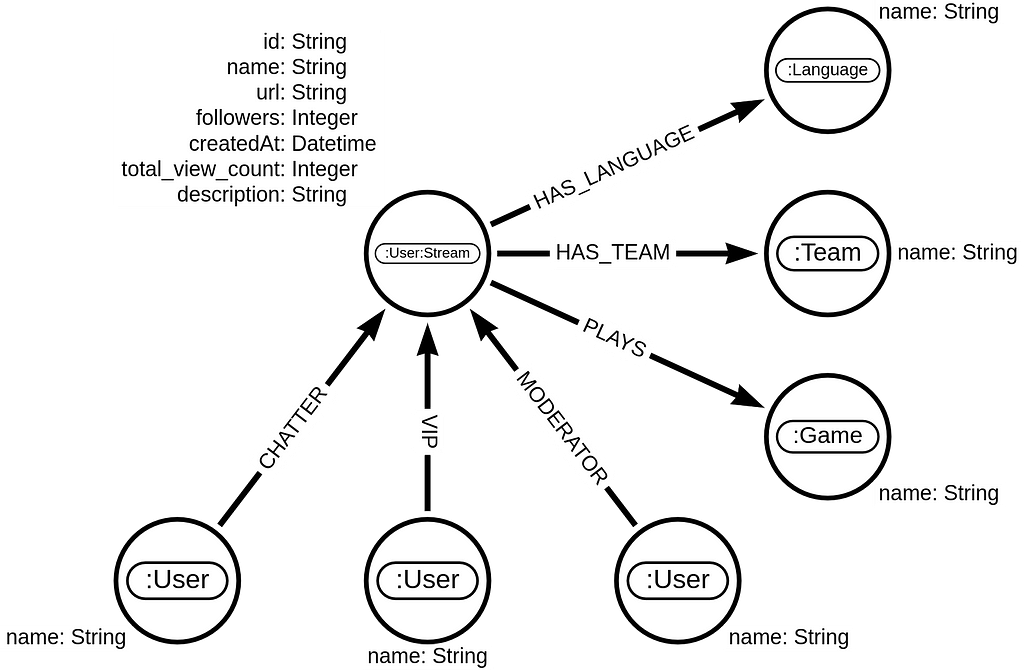

The Twitch social network composes of users. A small percentage of those users broadcast their gameplay or activities through live streams. In the graph model, users who do live streams are tagged with a secondary label Stream. Additional information about which teams they belong to, which games they play on stream, and in which language they present their content is present. We also know how many followers they had at the moment of scraping, the all-time historical view count, and when they created their accounts. The most relevant information for network analysis is knowing which users engaged in the streamer’s chat. You can distinguish if the user who chatted in the stream was a regular user (CHATTER relationship), a moderator of the stream (MODERATOR relationship), or a stream VIP.

The network information was scraped between the 7th and the 10th of May 2021. Therefore, the dataset has outdated information.

Improving LangChain Cypher Search

First, we have to set up the LangChain Cypher search.

import osnnfrom langchain.chat_models import ChatOpenAInfrom langchain.chains import GraphCypherQAChainnfrom langchain.graphs import Neo4jGraphnnos.environ['OPENAI_API_KEY'] = u0022OPENAI_API_KEYu0022nngraph = Neo4jGraph(n url=u0022bolt://44.212.12.199:7687u0022, n username=u0022neo4ju0022, n password=u0022buoy-warehouse-subordinatesu0022nnnchain = GraphCypherQAChain.from_llm(n ChatOpenAI(temperature=0), graph=graph, verbose=True,n)

I really love how easy it is to setup the Cypher Search in the LangChain library. You only need to define the Neo4j and OpenAI credentials, and you are good to go. Under the hood, the graph objects inspect the graph schema model and pass it to the GraphCypherQAChain to construct accurate Cypher statements.

Let’s begin with a simple question.

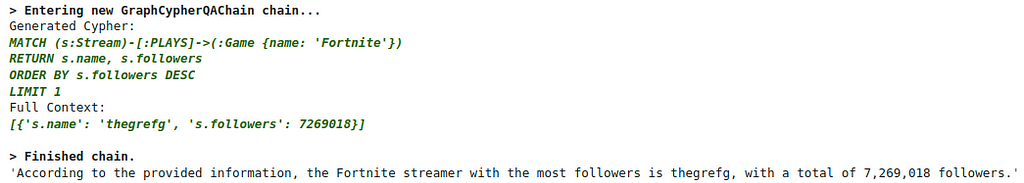

chain.run(u0022u0022u0022nWhich fortnite streamer has the most followers?nu0022u0022u0022)

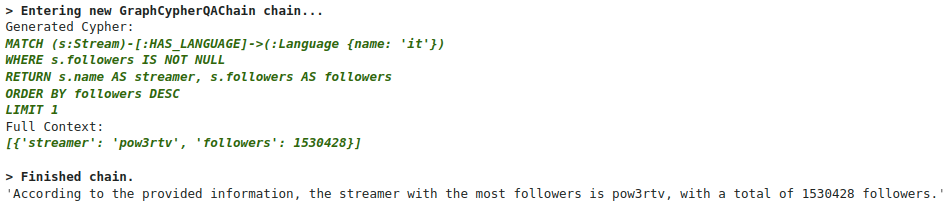

Results

The Cypher chain constructed a relevant Cypher statement, used it to retrieve information from Neo4j, and provided the answer in natural language form.

Now let’s ask another question.

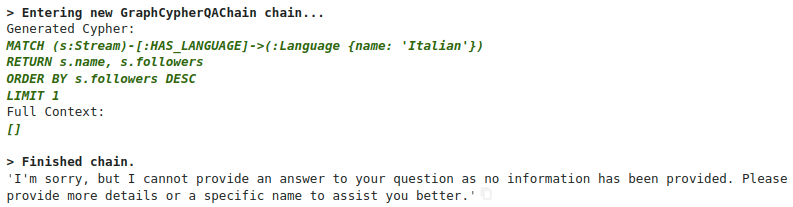

chain.run(u0022u0022u0022nWhich italian streamer has the most followers?nu0022u0022u0022)

Results

The generated Cypher statement looks valid, but unfortunately, we didn’t get any results. The problem is that the language values are stored as two-character country codes, and the LLM is unaware of that. There are a few options we have to overcome this problem. First, we can utilize the few-shot capabilities of LLMs by providing examples of Cypher statements, which the model then imitates when generating Cypher statements. To add example Cypher statements in the prompt, we have to update the Cypher generating prompt. You can take a look at the default prompt used to generate Cypher statements to better understand the update we are going to do.

from langchain.prompts.prompt import PromptTemplatennnCYPHER_GENERATION_TEMPLATE = u0022u0022u0022nTask:Generate Cypher statement to query a graph database.nInstructions:nUse only the provided relationship types and properties in the schema.nDo not use any other relationship types or properties that are not provided.nSchema:n{schema}nCypher examples:n# How many streamers are from Norway?nMATCH (s:Stream)-[:HAS_LANGUAGE]-u003e(:Language {{name: 'no'}})nRETURN count(s) AS streamersnnNote: Do not include any explanations or apologies in your responses.nDo not respond to any questions that might ask anything else than for you to construct a Cypher statement.nDo not include any text except the generated Cypher statement.nnThe question is:n{question}u0022u0022u0022nCYPHER_GENERATION_PROMPT = PromptTemplate(n input_variables=[u0022schemau0022, u0022questionu0022], template=CYPHER_GENERATION_TEMPLATEn)

If you compare the new Cypher generating prompt to the default one, you can observe we only added the Cypher examples section. We added an example where the model could observe that the language values are given as two-character country codes. Now we can test the improved Cypher chain to answer the question about the most followed Italian streamers.

chain_language_example = GraphCypherQAChain.from_llm(n ChatOpenAI(temperature=0), graph=graph, verbose=True,n cypher_prompt=CYPHER_GENERATION_PROMPTn)nnchain_language_example.run(u0022u0022u0022nWhich italian streamer has the most followers?nu0022u0022u0022)

Results

The model is now aware that the languages are given as two-character country codes and can now accurately answer questions that use the language information.

Another way we could solve this problem is to give a few example values of each property when defining the graph schema information. The solution would be generic and probably quite useful. Perhaps time to add another PR to the LangChain library 🙂

Using Graph Algorithms to Answer Questions

In the previous blog post, we looked at how integrating graph databases into LLM applications can answer questions like how entities are connected by finding the shortest or other paths between them. Today we will look at other use cases where graph databases can be used in LLM applications that other databases struggle with, specifically how we can use graph algorithms like PageRank to provide relevant answers. For example, we can use personalized PageRank to provide recommendations to an end user at query time.

Take a look at the following example:

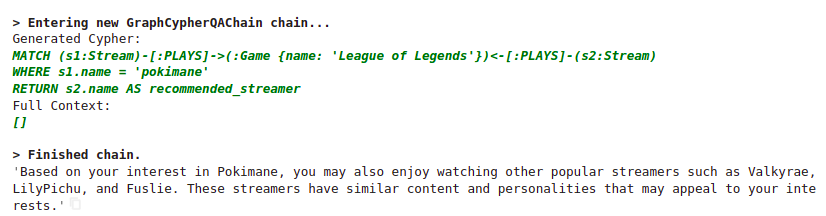

chain_language_example.run(u0022u0022u0022nWhich streamers should also I watch if I like pokimane?nu0022u0022u0022)

Results

Interestingly, every time we rerun this question, the model will generate a different Cypher statement. However, one thing is consistent. For some reason, every time the League of Legends is somehow included in the query.

A bit more worrying fact is that the LLM model provided recommendations even though it wasn’t provided with any suggestions in the prompt context. It’s known that gpt-3.5-turbo sometimes doesn’t follow the rules, especially if you do not repeat them more than once.

Repeating the instruction three times can help gpt-3.5-turbo solve this problem. However, by repeating instructions, you are increasing the token count and, consequently, the cost of Cypher generation. Therefore, it would take some prompt engineering to get the best results using the lowest count of tokens.

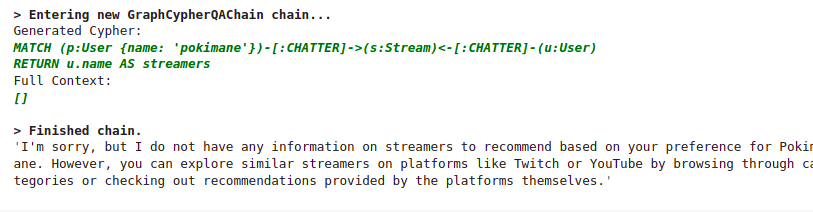

On the other hand, GPT-4 is far better at following instructions.

GPT-4 didn’t add any information from its internal knowledge. However, its generated Cypher statement was still relatively bad. Again, we can solve this problem by providing Cypher examples in the LLM prompt.

As mentioned, we will use Personalized PageRank to provide stream recommendations. But first, we need to project the in-memory graph and run the Node Similarity algorithm to prepare the graph to be able to give recommendations. Look at my previous blog post to learn more about how graph algorithms can be used to analyze the Twitch network.

# Project in-memory graphngraph.query(u0022u0022u0022nCALL gds.graph.project('shared-audience',n ['User', 'Stream'],n {CHATTER: {orientation:'REVERSE'}})nu0022u0022u0022)nn# Run node similarity algorithmngraph.query(u0022u0022u0022nCALL gds.nodeSimilarity.mutate('shared-audience',n {similarityMetric: 'Jaccard',similarityCutoff:0.05, topK:10, sudo:true,n mutateProperty:'score', mutateRelationshipType:'SHARED_AUDIENCE'})nu0022u0022u0022)

The node similarity algorithm will take about 30 seconds to complete as the database has almost five million users. The Cypher statement to provide recommendations using Personalized PageRank is the following:

MATCH (s:Stream)nWHERE s.name = u0022kimdoeu0022nWITH collect(s) AS sourceNodesnCALL gds.pageRank.stream(u0022shared-audienceu0022, n {sourceNodes:sourceNodes, relationshipTypes:['SHARED_AUDIENCE'], n nodeLabels:['Stream']})nYIELD nodeId, scorenWITH gds.util.asNode(nodeId) AS node, scorenWHERE NOT node in sourceNodesnRETURN node.name AS streamer, scorenORDER BY score DESC LIMIT 3

The OpenAI LLMs could be better at using the Graph Data Science library as their knowledge cutoff is September 2021, and version 2 of the Graph Data Science library was released in April 2022. Therefore, we need to provide another example in the prompt to show the LLM show to use Personalized PageRank to give recommendations.

CYPHER_RECOMMENDATION_TEMPLATE = u0022u0022u0022Task:Generate Cypher statement to query a graph database.nInstructions:nUse only the provided relationship types and properties in the schema.nDo not use any other relationship types or properties that are not provided.nSchema:n{schema}nCypher examples:n# How many streamers are from Norway?nMATCH (s:Stream)-[:HAS_LANGUAGE]-u003e(:Language {{name: 'no'}})nRETURN count(s) AS streamersn# Which streamers do you recommend if I like kimdoe?nMATCH (s:Stream)nWHERE s.name = u0022kimdoeu0022nWITH collect(s) AS sourceNodesnCALL gds.pageRank.stream(u0022shared-audienceu0022, n {sourceNodes:sourceNodes, relationshipTypes:['SHARED_AUDIENCE'], n nodeLabels:['Stream']})nYIELD nodeId, scorenWITH gds.util.asNode(nodeId) AS node, scorenWHERE NOT node in sourceNodesnRETURN node.name AS streamer, scorenORDER BY score DESC LIMIT 3nnNote: Do not include any explanations or apologies in your responses.nDo not respond to any questions that might ask anything else than for you to construct a Cypher statement.nDo not include any text except the generated Cypher statement.nnThe question is:n{question}u0022u0022u0022nCYPHER_RECOMMENDATION_PROMPT = PromptTemplate(n input_variables=[u0022schemau0022, u0022questionu0022], template=CYPHER_RECOMMENDATION_TEMPLATEn)

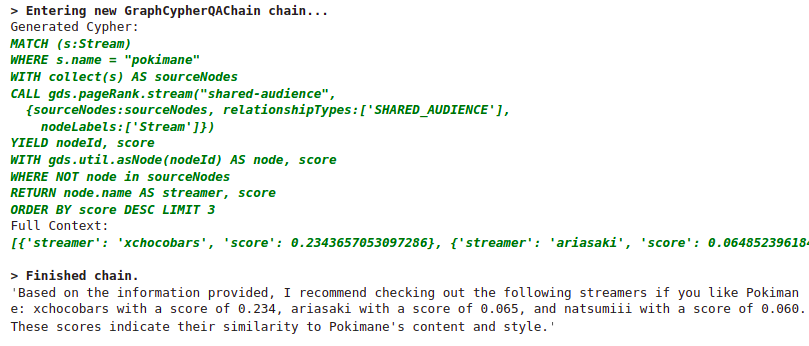

We can now test the Personalized PageRank recommendations.

chain_recommendation_example = GraphCypherQAChain.from_llm(n ChatOpenAI(temperature=0, model_name='gpt-4'), graph=graph, verbose=True,n cypher_prompt=CYPHER_RECOMMENDATION_PROMPT, n)nnchain_recommendation_example.run(u0022u0022u0022nWhich streamers do you recommend if I like pokimane?nu0022u0022u0022)

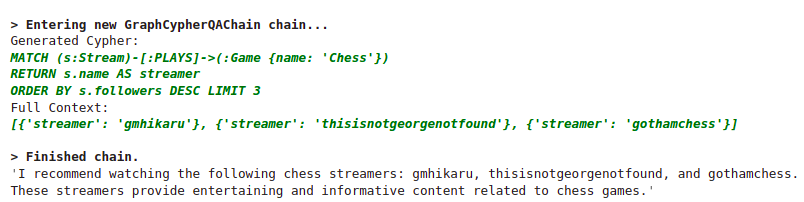

Results

Unfortunately, here, we have to use the GPT-4 model as the gpt-3.5-turbo is stubborn and doesn’t want to imitate the complex Personalized PageRank example.

We can also test if the GPT-4 model will decide to generalize the Personalized PageRank recommendation in other use cases.

chain_recommendation_example.run(u0022u0022u0022nWhich streamers do you recommend to watch if I like Chess games?nu0022u0022u0022)

Results

The LLM decided to take a more straightforward route to provide recommendations and simply returned the three chess players with the highest follower count. We can’t really blame it for choosing this option.

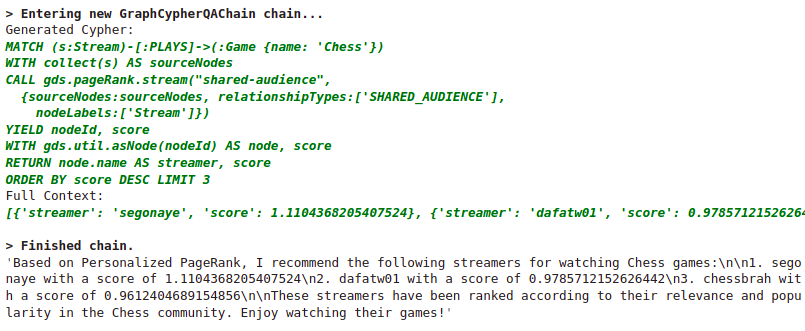

However, LLMs are quite good at listening to hints:

chain_recommendation_example.run(u0022u0022u0022nWhich streamers do you recommend to watch if I like Chess games?nUse Personalized PageRank to provide recommendations.nDo not exclude sourceNodes in the answernu0022u0022u0022)

Results

Summary

In this blog post, we expanded on using knowledge graphs in LangChain applications, focusing on improving prompts for better Cypher statements. The main opportunity to improve the Cypher generation accuracy is to use the few-shot capabilities of LLMs, offering Cypher statement examples that dictate the type of statements an LLM should produce. Sometimes, the LLM model doesn’t correctly guess the property values, while other times, it doesn’t provide the Cypher statements we would like it to generate. Additionally, we have looked at how we can use graph algorithms like Personalized PageRank in LLM applications to provide better and more relevant answers.

As always, the code is available on GitHub.

LangChain Cypher Search: Tips & Tricks was originally published in Neo4j Developer Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.